Evaluating a Planning Product for Active Cyberdefense and Cyberdeception

Justin J. Green

U.S. Naval Postgraduate School

Monterey, CA 93943 USA

Sasha K. Drew

U.S. Naval Postgraduate School

Monterey, CA 93943 USA

Charles W. Heinen

U.S. Naval Postgraduate School

Monterey, CA 93943 USA

Robert E. Bixler

SoarTech, Inc., 3600 Green Ct.

Ann Arbor, MI 48105 USA

Neil C. Rowe (contact)

U.S. Naval Postgraduate School

Monterey, CA 93943 USA

Armon C. Barton

U.S. Naval Postgraduate School

Monterey, CA 93943 USA

Abstract—Active defenses are increasingly used for cybersecurity, as they add lines of defense after access controls and system monitoring. This work tested a commercial tool CCAT from Soar Technology Inc. for planning active defenses including cyberdeceptions. The tool simulated a formal game between attacker and defender, offering options for both sides with associated costs and benefits. Both sides play a series of random games in advance to learn the best methods in a range of adversarial situations. Our experiments then tested the best active-defense responses to six new kinds of cyberattacks, and showed our defensive methods effectively impeded them. Overall, the product worked well in developing realistic attack scenarios, but required work to set up, and the attack plans were quite predictable.

This paper appeared in the Proceedings of the 22nd International Conference on Security and Management, Las Vegas, NV, US, July 2023.[1]

Keywords—cybersecurity, deception, wargaming, defense, games, optimality, planning, testing

Active defenses are increasingly being explored for additional cybersecurity. They include dynamic modification of systems to confuse cyberattacks as well as automated tracking and searching for attacks and attackers. They also include deliberate defensive deception, for which a wide range of methods are available (Rowe and Rrushi, 2016). Deception has advantages of flexibility and unexpectedness. Most theories of ethics permit occasional deception to reduce harms, and damage to computer systems and theft of valuable data often are harms worse than that of occasional defensive deception. Deception is considered acceptable in warfare, as cyberattacks can be used by nation-states as military tools.

In the last ten years, commercial products have become available for active defenses, especially those with deception as an option. Some are not very good; one recent product we reviewed provided decoy processes on digital systems, but the decoys were easily detectable by their unusual traffic. Better products are available, but a question is whether well they will fool attacks. Most cyberattacks are automated and do not pay much attention to what they attack, so it is hard to deceive them. However, manual cyberattacks on high-value targets do occur, and the most important of these are “automated persistent threats” or APTs. These are state-sponsored “information operations” that slowly probe a system to find its weaknesses, then steal data or do sabotage (Clark and Mitchell, 2019). Deception can be especially useful in defense against them.

Wargames help in analyzing and planning a broad range of military activities. Cybersecurity is like a war between attackers and defenders, and this is a particularly good analogy when state-sponsored information-operations specialists are involved. Cybersecurity wargames can model operations using procedures, data, and rules at far lower cost than exercises or red-teaming, and can handle situations with more options than possible for human comprehension. Unexpected scenarios can occur in wargames just as in actual warfare, and these prepare users better for real conflict.

This work evaluated a wargaming product for planning of active defenses for local-area networks including cyberdeception. The product differs from most defensive cyberdeception products in that it is only a planner, which permits it some continuing value as software and attacks evolve.

Traditionally, computer systems and other devices within cyberspace provide very accurate information about themselves for operation and administration. This means that deception is unexpected and can be very effective in cyberspace for defenders. Its new capabilities are useful because cyber defense is becoming increasingly difficult due to the number of devices with Internet connectivity, the number of new vulnerabilities being discovered, and the increasing sophistication of attacks. So cyber defense must be increasingly automated. Automated deception can allow defenders to conceal high-value targets while encouraging attackers to seek low-value targets (Gartzke & Lindsay, 2015). It also gives defenders more time to successfully identify attacks and figure out how to fight them, a strategic advantage (Park & Kim, 2019).

Commonly seen defensive deception examples are honeypots and fakes (Rowe and Rrushi, 2016). Honeypots are otherwise useless decoy devices that entice and trap attackers into revealing their methods; fake documents or devices can enable the defender to mislead attackers. The research described here simulated both. A range of work on defensive deception has been done. For instance, one project developed deep learning for automated cyber deceptive defenses (Matthew, 2020). Another project introduced moving decoys (Sun et al., 2019) where front-end decoys would constrain the attackers and forward the malicious commands to decoy servers, providing attackers with plausible appearances from their attacks.

Deception games are imperfect-information stochastic games which try to minimize the worst-case potential loss by having the agents consider the opponent’s response to their strategy, and select the tactics that give the largest possible payoff. Games were used to plan a defense using lightweight decoys while hiding and defending real hosts in (Major et al., 2019). The defender and attacker played a game with resources consisting of real and decoy systems, possible actions for each player, and a method for defining and evaluating individual player strategies. The work used multiple game trees and an explicit representation of each player’s knowledge of the game structure and payoffs.

Modeling attacks enables simulation and testing of them, so much work has built attack models. One method of attack modeling focuses on conditions like trust and availability to define effects on human behavior of cyberattacks (Cayirci and Ghergherehchi, 2011). Another approach uses attack graphs for visual representation of cyberattacks, though this requires considerable manual labor by analysts (Liu et al., 2012). Research and experiments done using costs and incentive-based methodologies can deduce this type of information (Liu, Zang, and Yu, 2005). Another project built attack models using deep learning with numerical simulations to verify accuracy (Najada, 2018). Another project generated an agent taxonomy using topological data analysis of simulation outputs (Swarup, 2019). A useful but necessarily limited resource is the Malicious Activity Simulation Tool, a scalable, flexible, and interoperable architecture for cybersecurity training (Swiatocha, 2018).

Attack modeling should include simulation of attacker movement through the network to establish footholds, or “lateral movement” (Bai et al., 2019). A defender can detect these movements and take countermeasures. Defenders can make decoys look like legitimate network assets to confuse the attacker (Amin et al., 2020). Previous indicators of compromise can help network defenders identify and prevent this activity. One way to reduce searching for lateral movement is to find shortest paths by biased random walks (Wilkens et al., 2019). Honeypots help detect lateral movement since any access to them is suspicious.

Providing both real and fake data in network scans can confuse an attacker doing reconnaissance of a network (Jajodia et. al., 2016). Game-theoretic analysis can evaluate effectiveness of deceptions in a simulated environment (Wang and Lu, 2018), and help determine an optimal defender strategy against adversaries in a cyberdeception game (Fang et. al. 2018). Game theory can also evaluate the concealment of decoys (Miah et al., 2020).

A weakness of all the previous work is that it tested research prototypes of limited scope and robustness. Our work here tested a commercial tool with a wide range of capability.

We used a proprietary product from Soar Technology Inc. (SoarTech), the Cyberspace Course of Action Tool (CCAT) (SoarTech, 2017). The CCAT simulates deception and active defenses within a computer network, and permits training of security measures in the form of a wargame that is run many times with random options to learn the best tactics for both attacker and defender. Our experiments with CCAT tested eight scenarios including two controls. More details are in (Green 2020) and (Drew & Heinen, 2022).

The CCAT runs a cyber wargame between attackers and defenders. Attacker and defender alternate choices of actions, and can choose them depending on the situation. Our experiments were designed with network assets and nodes to simulate a real-life network. Empirical game-theoretic analysis was used with reinforcement learning to train agent behavior from results of games. Training tried to ensure that the agent is more likely to choose an action in the future that provides more benefits or has made it less costly to achieve its objectives.

Experiments ran a cyber wargame with attacker and defender agents. The primary attacker goals were to exfiltrate a file “PII.txt” of sensitive data, and to corrupt or destroy the “sysconfig.conf” file on the file server. The PII.txt file simulated employee personal data (social-security numbers, addresses, phone numbers, and blood type). The “sysconfig.conf” file simulated a system file used to run a critical service on the server. The file server simulated mission-critical servers found on critical-infrastructure networks. The defender’s goals were to delay the attacker as much as possible and increase their costs.

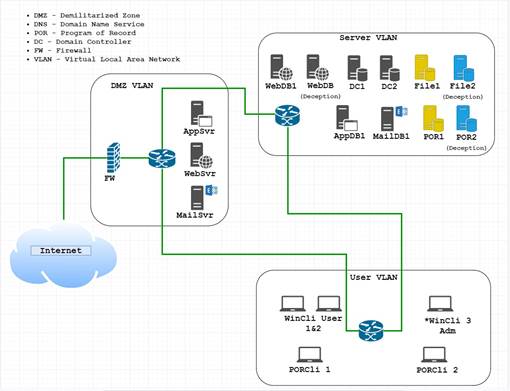

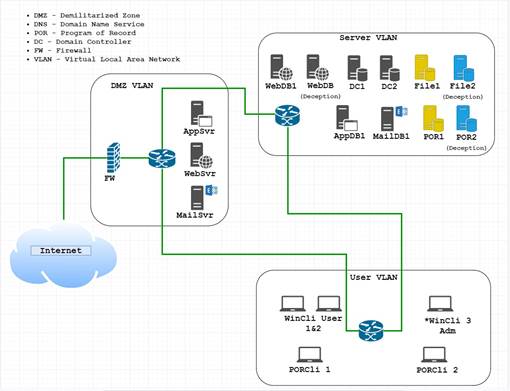

The network map of our simulated test network is in Figure 1 and simulates a local-area network with segmentation and security. It has three simulated local-area subnetworks which contain servers, workstations, a firewall, and other network commonly seen network devices. Front-end servers support Web, mail, and applications that access the backend servers for those specific tasks. Two simulated Program of Record (software) servers are within the server network, two domain controllers, two Web database servers, two file servers, and one router with the application and mail backend servers.

Figure 1: Map of the simulated network used by the wargame.

Automated decision-making by the attacker and defender was based on empirical game-theoretic analysis, specifically a “double oracle” variant with deep reinforcement learning (Wright et al., 2019). Before testing, both attacker and defender agents did a million test runs of training for each experiment in which they attempted different random actions and different sequences to determine best tactics. The optimal strategy for an agent in this game is a Nash equilibrium. The strategies closest to the Nash equilibrium for both attacker and defender agents were selected for testing. However, many strategies for both attacker and defender are available, and we could not test more than a sample of them in the training period.

Our experiments tested two control scenarios and six variant scenarios in which the attacker and defender agents competed against each other.

· Experiment 1 was a control experiment without any deception.

· Experiment 2 used two decoy servers and two decoy files.

· Experiment 3 camouflaged the PII.txt and sysconfig.conf files by renaming them.

· Experiment 4 combined the features of Experiments 2 and 3, and also allowed the defender to deploy additional decoy and camouflage tactics.

· Experiment 5 was another control experiment without deception, done since the simulation software had been improved since the first four experiments.

· Experiment 6 delayed their attacker to test their perceptiveness, by such tricks as the defender disabling the links and attachments that were sent in a phishing email to make it ineffective.

· Experiment 7 involved the attacker doing a denial-of-service attack against one part of the network to distract defenders from attacks on local servers or crawling of the Web pages. The defender could blacklist the IP address, do denial-of-service mitigations, and install tools to detect further such attacks.

· Experiment 8 had an attacker spoof a trusted network address in packets. The defender had to recognize it and block traffic from that address.

Figure 2 is the core of the defender flowchart showing the response options based on the attack experienced. It was created in collaboration with SoarTech from parts of the MITRE ATT&CK framework (MITRE, 2020). Actions available to the agents are indicated by squares. A security condition indicated by a circle will become true when that action succeeds. Security conditions are status information about the network or an asset on the network like a Web server. The probability of an action being executed successfully depends on the complexity of the action, and, more complex actions have a lower chance of success. An element of randomness is included which simulates the uncertain nature of cyberspace attacks and defenses operating as intended. Security conditions resulting from the attacker’s actions can be seen by both the attacker and defender agents in the game, but defender security conditions are hidden from the attacker.

The defender’s goals are to identify the actions taken against their network and prevent the attacker from accomplishing their own goals. At the start of the game, the defender can select from the options of file monitoring, process monitoring, “Audit Logon Events”, “Audit HIPS Logs”, “Check Firewall Alerts”, and “Monitor Network Traffic” to look for downloads and uploads. They enable the defender to identify suspicious events on the network. If they find any, they can try to stop an attack from progressing, stop the exfiltration of data, or prevent a server being destroyed. Tactics available include disabling an account, resetting a password, disabling email links, tightening access to local Web servers, and blocking connections from particular addresses. They also include deception options to create decoy servers, create decoy programs, change file names, and change host names. Last resorts are reimaging the asset and blocking outside traffic from a resource or the entire network. Other options are “Enforce Robots.txt” to impede the attacker’s Web crawling and “Block IP Addresses” to prevent internal and external traffic from reaching the attacker.

An attacker flowchart was also created to represent standard cyberattacks using tactics from the MITRE ATT&CK framework (MITRE, 2020). It is significantly more complex than the defender flowchart, so we do not have room to show it here. It includes actions done both inside and outside the defender network.

Attackers start the game with a set of preparations such as “Detecting Decoy” or “Maintain IP Address”. Then they do something like “Map Network” and choose an attack based on the network map. Options include spear phishing, exploiting public-facing servers, and Web crawling to inventory the Web server. If any of these actions are successful, a security condition (indicator of an attacker goal achieved) will appear for the local network. For example, if a spear-phishing action is done successfully, a security condition for a WinCli user becomes available.

Next, the attacker can explore local networks, users, processes, and local data. They can collect useful information like user names, personally identifiable information, and network connections. Example actions are “Discover Local Network”, “Discover User” which collects data from users including administrators, and “Get Hashes” which accesses password hashes for possible cracking. Further options are privilege escalation and lateral movement, such as “Discover Processes”, “Process Injection”, “Search Local Data”, and “Determine Decoy”. The two possible final actions are “Exfiltrate Data” and “Destroy Server”.

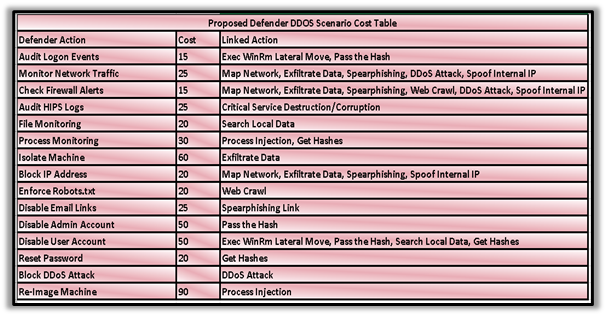

Costs and benefits are associated with each action by attacker and defender. They were carefully set while consulting SoarTech to give realism to the game. Costs are assigned to the number of steps (turns) required for an action and benefits are assigned to achievement of goals. The score for an attacker is their benefits achieved plus defender costs minus attacker costs minus defender benefits. The score for a defender is the negative of the attacker score.

As an example, in the denial-of-service experiment 7 with actions shown in Table 1, the attacker will get a large benefit if they can gain critical-system access, but defender’s benefit for detecting and mitigating the first attack is small. The longer that denial of service occurs, the easier it is to figure how to block it, so a small reward is assigned if the defender waits to block the attack. However, denial of service impedes security activities by the defender during the denial, which adds to the defender’s cost.

Table 1: Example defender and attacker actions (with defender cost) in a denial-of-service camouflaging attack.

Both the attacker and defender agents were trained to be more effective, with separate training for each experiment. Training took around three weeks in parallel on a cloud service, and did a million runs each for attacker and defender in each experiment, so there were 16 million training runs altogether. Training used a CentOS 7 core server with an i7-7820X processor at 3.60GHz, 8 cores, 128 gigabyte random access memory, and two Nvidia Tesla V100 32 gigabyte graphical processing units. The tactics whose results were closest to the Nash equilibrium were chosen for final testing.

Table 2 shows average results on 100 runs of the game after training. A separate control experiment was done in experiment 5 since experiments 5-8 were done a year later than experiments 1-4. The more negative the score, the better the attacker did. It can be seen that deception increased the attacker’s costs compared to the control in all but the third experiment, and defender costs increased too but not as much as attacker costs. Therefore, we judge the deceptions a success except in experiment 3.

The maximum number of timesteps allowed in a game was set at 40; most cybersecurity policies would not allow an attacker to explore a system indefinitely. We did not see any significant effects of deception on the average number of timesteps, but deception required different and more complex steps for the attacker than the defender. Most actions occurred with approximately the same frequency with deception, but exceptions were the decoy-related actions when decoys were present and with the “Check File” action.

The research reported here suggests that deceptive tactics in the cyberspace domain are a cost-effective defensive strategy. These tactics can increase the time to mount an attack and its likelihood of success. Wargaming worked well as a technique to explore and rate deception options. The automated tactics found by both sides were nonetheless predictable as expected.

Table 2: Results; "avg" = average, "att" = attacker, "def" = defender, "exfil" = exfiltration, "corrupt." = corruption, “distrib” = distributed.

|

Experiment |

Avg. def. score |

Avg. att. score |

Avg. att. exfil. steps |

Avg. att. corrupt. steps |

|

1, first control , no deception |

-3850 |

-1534 |

25.3 |

32.6 |

|

2, decoys |

-1427 |

-1861 |

26.3 |

33.5 |

|

3, camou-flage |

-4158 |

-1658 |

25.1 |

32.8 |

|

4, decoys and camouflage |

-1475 |

-1803 |

25.4 |

32.9 |

|

5, second control, no deception |

-3944 |

-1551 |

- |

25.7 |

|

6, delays |

-3636 |

-1682 |

- |

26.0 |

|

7, distrib. denial of service |

-3310 |

-1740 |

- |

29.3 |

|

8, spoofing |

-3908 |

-1668 |

- |

25.2 |

A weakness of the SoarTech approach is that it took significant work to train the agents, three weeks on experiments 5-8. This appeared necessary to ensure we had sufficiently explored the combinations of options. However, this may be overkill for both attacker and defender; usually the best options in cyber operations are well known in advance (Rowe & Rrushi, 2016). Furthermore, an important principle of deception planning is to avoid wasting effort in designing details that deceivees will not notice, and with so many options, an attacker cannot notice many of them.

Our current work is improving on this approach by writing our own program using reinforcement learning to guide the game playing. It incrementally improves choice probabilities based on the differences between outcomes and average outcomes and the degree of correlation of the action choice with the outcome. This does not require special training runs, and can give useful results with only small numbers of runs. It does not provide optimal solutions, but deception is a psychological effect that does not require high precision.

Amin, M. A. R. A., Shetty, S., Njilla, L. L., Tosh, D. K., & Kamhoua, C. A. (2020). Dynamic cyber deception using partially observable Monte‐Carlo planning framework. In Modeling and Design of Secure Internet of Things (pp. 331–355). IEEE. https://doi.org/10.1002/9781119593386.ch14

Bai, T., Bian, H., Daya, A. A., Salahuddin, M. A., Limam, N., & Boutaba, R. (2019). A machine learning approach for RDP-based lateral movement detection. 2019 IEEE 44th Conference on Local Computer Networks (LCN), 242–245. https://doi.org/10.1109/LCN44214.2019.8990853

Cayirci, E., & Ghergherehchi, R. (2011, December). Modeling cyber attacks and their effects on decision process. In Proceedings of the 2011 Winter Simulation Conference (WSC) (pp. 2627–2636). IEEE.

Clark, R., & Mitchell, W. (2019). Deception: Counterdeception and counterintelligence. Thousand Oaks, CA, US: Sage.

Drew, S. and Heinen, C. (2022, March). Testing deception with a commercial tool simulating cyberspace, M.S. thesis in Cyber Systems and Operations.

Gartzke, E., & Lindsay, J. R. (2015). Weaving tangled webs: Offense, defense, and deception in cyberspace. Security Studies, 24(2), 316–348. https://doi.org/10.1080/09636412.2015.1038188

Green, J. (2020). The fifth masquerade: An integration experiment of military deception theory and the emergent cyber domain [Master’s thesis, Naval Postgraduate School]. NPS Archive: Calhoun. http://hdl.handle.net/10945/66078

Jajodia, S., Subrahmanian, V., Swarup, V., & Wang, C. (2016). Cyber deception (Vol. 6). Springer.

Liu, Z., Li, S., He, J., Xie, D., & Deng, Z. (2012, December). Complex network security analysis based on attack graph model. In 2012 Second International Conference on Instrumentation, Measurement, Computer, Communication and Control (pp. 183–186). IEEE.

Liu, P., Zang, W., & Yu, M. (2005). Incentive-based modeling and inference of attacker intent, objectives, and strategies. ACM Transactions on Information and System Security (TISSEC), 8(1), 78–118.

Major, M., Fugate, S., Mauger, J., & Ferguson-Walter, K. (2019). Creating cyber deception games. 2019 IEEE First International Conference on Cognitive Machine Intelligence (CogMI), Los Angeles, CA, USA 102–111. https://doi: 10.1109/CogMI48466.2019.00023.

Matthew, A. (2020). Automation in cyber-deception evaluation with deep learning. www.researchgate.net/publication/ 340061861_Automation_in_ Cyber-deception_Evaluation_with_Deep_Learning.

Miah, M. S., Gutierrez, M., Veliz, O., Thakoor, O., & Kiekintveld, C. (2020, January). Concealing cyber-decoys using two-sided feature deception games. Hawaii Intl. Conf. on Systems Sciences.

MITRE (2020) MITRE ATT&CK. https://attack.mitre.org/.

Najada, H. A., Mahgoub, I., & Mohammed, I. (2018). Cyber intrusion prediction and taxonomy system using deep learning and distributed big data processing. 2018 IEEE Symposium Series on Computational Intelligence (SSCI), 631–638. https://doi.org/10.1109/SSCI.2018.8628685

Park, Y., & Stolfo, S. J. (2012). Software decoys for insider threat. Proceedings of the 7th ACM Symposium on Information, Computer and Communications Security, 93–94.

Rowe, N. C., & Rrushi, J. (2016). Introduction to cyberdeception. Berlin: Springer International Publishing.

SoarTech Inc. (2017). https://soartech.com/cyberspace/.

Sun, J., Liu, S., & Sun, K. (2019). A scalable high fidelity decoy framework against sophisticated cyberattacks. Proceedings of the 6th ACM Workshop on Moving Target Defense, 37–46. https://doi.org/10.1145/3338468.3356826

Swarup, S., & Rezazadegan, R. (2019). Generating an agent taxonomy using topological data analysis. Proceedings of the 18th International Conference on Autonomous Agents and MultiAgent Systems, 2204–2205.

Swiatocha, T. L. (2018). Attack graphs for modeling and simulating sophisticated cyberattacks [Thesis, Monterey, CA; Naval Postgraduate School]. https://calhoun.nps.edu/handle/10945/59599

Wang, C., & Lu, Z. (2018). Cyber deception: Overview and the road ahead. IEEE Security & Privacy, 16(2), 80–85.

Wilkens, F., Haas, S., Kaaser, D., Kling, P., & Fischer, M. (2019). Towards efficient reconstruction of attacker lateral movement. Proceedings of the 14th International Conference on Availability, Reliability and Security, 1–9.

Wright, M., Wang, Y., & Wellman, M. P. (2019, June). Iterated deep reinforcement learning in games: History-aware training for improved stability. In EC (pp. 617– 636).

[1] This work was supported by the U.S. Defense Intelligence Agency and the U.S. Department of Energy. Opinions expressed are those of the authors and not the U.S. Government.