REPORT DOCUMENTATION PAGE

|

Form Approved OMB No. 0704-0188

|

|

Public reporting burden

for this collection of information is estimated to average 1 hour per

response, including the time for reviewing instruction, searching existing

data sources, gathering and maintaining the data needed, and completing and

reviewing the collection of information. Send comments regarding this burden

estimate or any other aspect of this collection of information, including

suggestions for reducing this burden, to Washington headquarters Services,

Directorate for Information Operations and Reports, 1215 Jefferson Davis

Highway, Suite 1204, Arlington, VA 22202-4302, and to the Office of

Management and Budget, Paperwork Reduction Project (0704-0188) Washington DC 20503.

|

|

1.

AGENCY USE ONLY (Leave blank)

|

2. REPORT DATE

|

3. REPORT TYPE AND DATES

COVERED

Master’s Thesis

|

|

4. TITLE AND SUBTITLE: Autonomous Agent-Based Simulation Of A Model

Simulating The Human Air-threat Assessment Process

|

5.

FUNDING NUMBERS

|

|

6.

AUTHOR(S) Baris Egemen OZKAN

|

|

7.

PERFORMING ORGANIZATION NAME(S) AND ADDRESS(ES)

Naval Postgraduate School Monterey, CA 93943-5000

|

8. PERFORMING ORGANIZATION REPORT NUMBER

|

|

9.

SPONSORING /MONITORING AGENCY NAME(S) AND ADDRESS(ES) N/A

|

10. SPONSORING/MONITORING

AGENCY REPORT NUMBER

|

|

11.

SUPPLEMENTARY NOTES The views

expressed in this thesis are those of the author and do not reflect the

official policy or position of the Department of Defense or the U.S.

Government.

|

|

12a.

DISTRIBUTION / AVAILABILITY STATEMENT

Approved for public

release; distribution is unlimited

|

12b.

DISTRIBUTION CODE

|

|

13.

ABSTRACT (maximum 200 words)

The Air Defense Laboratory (ADL) Simulation is a software

program that models the way an air-defense officer thinks in the threat

assessment process. The model uses multi-agent system (MAS) technology and is

implemented in Java programming language. This research is a portion of Red

Intent Project whose goal is to ultimately implement a model to predict the

intent of any given track in the environment. For any air track in the

simulation, two sets of agents are created, one for controlling track actions

and one for predicting its identity and intent based on information received

from track, the geopolitical situation and intelligence. The simulation is

also capable of identifying coordinated actions between air tracks. We used

three kinds of aircraft behavior in the simulation: civilian, friendly and

enemy. Predictor agents are constructed in a layered structure and use

"conceptual blending" in their decision-making processes using

mental spaces and integration networks. Mental spaces are connected to each

other via connectors and connecters trigger tickets. Connectors and Tickets

were implemented using the Connector-based Multi Agent System (CMAS) library.

This thesis showed that the advances of Cognitive Science and Linguistics can

be used to make our software more cognitive. This simulation is one of the

first applications to use cognitive blending theory for a military

application. We demonstrated that agents can create an “integration network”

composed of “mental spaces” and retrieve any mental space data inside the

network immediately without traversing the entire network by using the CMAS

library. The results of the tests of the simulation showed that the ADL

Simulation can be used as assistant to human air-defense personnel to

increase accuracy and decrease reaction time in naval air threat assessment.

|

|

|

15. NUMBER OF PAGES

120

|

|

16. PRICE CODE

|

|

17. SECURITY

CLASSIFICATION OF REPORT

Unclassified

|

18. SECURITY CLASSIFICATION OF THIS PAGE

Unclassified

|

19. SECURITY CLASSIFICATION OF ABSTRACT

Unclassified

|

20. LIMITATION OF ABSTRACT

UL

|

|

|

|

|

|

|

|

NSN 7540-01-280-5500 Standard Form 298

(Rev. 2-89)

Prescribed

by ANSI Std. 239-18

THIS PAGE INTENTIONALLY LEFT BLANK

Approved for public release; distribution is unlimited

AUTONOMOUS AGENT-BASED SIMULATION OF A MODEL SIMULATING THE

HUMAN AIR-THREAT ASSESSMENT PROCESS

Baris E. OZKAN

Lieutenant Junior Grade, Turkish Navy

B.S., Turkish Naval Academy, 1998

Submitted in partial fulfillment of the

requirements for the degree of

MASTER OF SCIENCE IN COMPUTER SCIENCE

from the

NAVAL POSTGRADUATE SCHOOL

March 2004

Author: Baris Egemen OZKAN

Thesis Co-Advisor

Neil Rowe

Thesis Co-Advisor

Chris Darken

Second Reader

Peter J. Denning

Chairman, Department of Computer

Science

THIS PAGE INTENTIONALLY LEFT BLANK

ABSTRACT

The Air Defense Laboratory (ADL) Simulation is a software

program that models the way an air-defense officer thinks in the threat

assessment process. The model uses multi-agent system (MAS) technology and is implemented

in Java programming language. This research is a portion of Red Intent Project

whose goal is to ultimately implement a model to predict the intent of any

given track in the environment. For any air track in the simulation, two sets

of agents are created, one for controlling track actions and one for predicting

its identity and intent based on information received from track, the

geopolitical situation and intelligence. The simulation is also capable of

identifying coordinated actions between air tracks. We used three kinds of

aircraft behavior in the simulation: civilian, friendly and enemy. Predictor

agents are constructed in a layered structure and use "conceptual

blending" in their decision-making processes using mental spaces and integration

networks. Mental spaces are connected to each other via connectors and

connecters trigger tickets. Connectors and Tickets were implemented using the

Connector-based Multi Agent System (CMAS) library. This simulation is one of the

first applications to use cognitive blending theory for a military application.

We demonstrated that agents can create an “integration network” composed of “mental

spaces” and retrieve any mental space data inside the network immediately

without traversing the entire network by using the CMAS library. The results of

the tests of the simulation showed that the ADL Simulation can be used as

assistant to human air-defense personnel to increase accuracy and decrease

reaction time in naval air-threat assessment.

THIS PAGE INTENTIONALLY LEFT BLANK

TABLE OF CONTENTS

I. introduction........................................................................................................ 1

a. THE air-defense laboratory Simulation............................... 1

b. motivation for the ADL Simulation............................................ 2

C. Multi-agent systems in ADL Simulation................................... 4

II. CONCEPTUAL BLENDING THEORY................................................................... 9

A. introduction to conceptual blending

theory................ 9

B. Forms............................................................................................................ 9

C. principles of blending................................................................... 10

D. networks OF SPACES in blending theory............................ 13

1. Simplex

Networks.......................................................................... 16

2. Mirror

Networks.............................................................................. 17

E. NETWORKS IN THE ADL Simulation................................................ 18

F. alternative ways to blending theory

and cmas library for adl simulation................................................................................................ 19

iII. RELATED WORK IN NAVAL AIR-THREAT

ASSESSMENT......................... 21

A. RELAted work

introduction........................................................ 21

B. adversarial plan recognItion for

airborne threats 21

C. NAVAL AIR-DEFENSE THREAT ASSESSMENT:

COGNITIVE FACTORS AND MODEL......................................................................................................................... 22

D. air-threat assessment: research, model

and display guidelines 24

E. Simulation of an Aegis Cruiser Combat

Information Center 25

F. multisensor data fusion............................................................... 27

g. multisensor data fusion............................................................... 28

H. COMParison WITH OTHER AIR-DEFENSE WORK....................... 29

IV. multi-agent systems and DESCRIPTION OF

AIR DEFENSE LABORATORY SIMULATion............................................................................................................ 31

A. program language and system

requirements for adl simulation 31

B. multi-agent systems and THE CMAS

LIBRARY...................... 31

1. Agents............................................................................................... 31

2. Connector

Based Multi-Agent Systems and CMAS Library 32

a. Connectors.......................................................................... 32

b. Tickets................................................................................... 34

c. Ticket & Connector Structures........................................ 35

d. The CMAS Library.............................................................. 36

C. MENU OPTIONS.......................................................................................... 36

D. Toolbar Options.................................................................................. 42

E. Output Panel......................................................................................... 44

F. java xml integration........................................................................ 44

V. design of the adl simulation program............................................. 47

A. Introduction.......................................................................................... 47

B. Real Track Agents............................................................................. 47

1. Civilian

Aircraft Track Agent........................................................ 48

2. Friendly

Aircraft Track Agent...................................................... 49

3. Enemy

Aircraft Track Agent......................................................... 50

4. Coordinated

Detachment Attack Track Agent........................ 52

5. Missile

Attack Track Agent........................................................... 53

6. Missile

Track Agent........................................................................ 54

7. User

derived Track Agent............................................................ 54

C. Reactive Agents.................................................................................. 54

1. Airlane

Reactive Agent.................................................................. 55

2. ESM

Reactive Agent...................................................................... 56

3. Heading-change

Reactive Agent............................................... 57

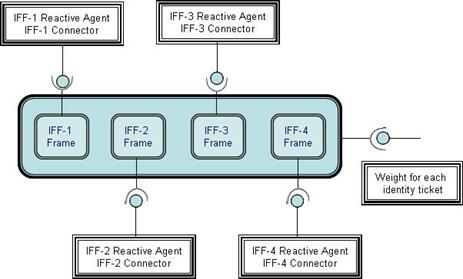

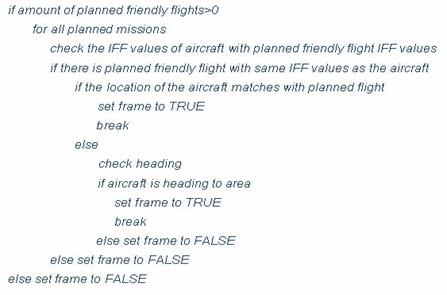

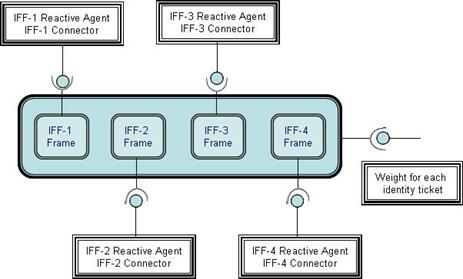

4. IFF

Reactive Agents....................................................................... 57

5. Max

Acceleration, Altitude, and Speed Reactive Agents..... 58

6. Origin

Reactive Agent................................................................... 60

7. Radar

Status Reactive Agent...................................................... 60

8 Random

Number Finder Reactive Agent................................. 60

9. Snooper

Detector Reactive Agent............................................. 62

D. Predictor Track Agents................................................................ 63

1. Predictor

Agent Connectors and Queries............................... 64

2. Predictor

Agent Competing Models.......................................... 66

3. Predictor

Agent Tickets................................................................ 66

4. Weighting

Procedure for the Predictor Track Agent............. 71

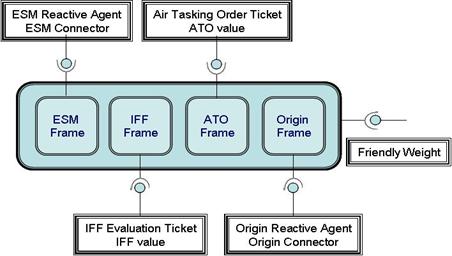

a. Weighting the Civilian Ticket............................................ 72

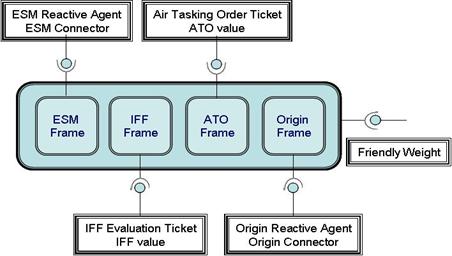

b. Weighting the Friendly Ticket.......................................... 72

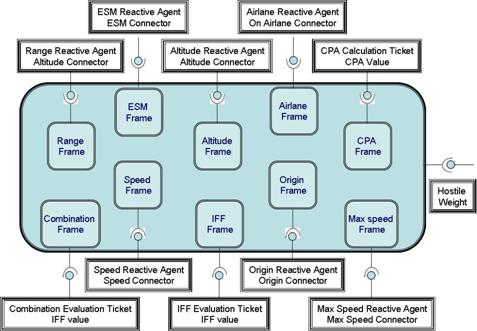

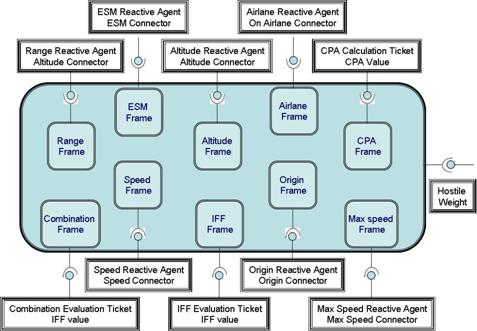

c. Weighting the Hostile Ticket............................................ 73

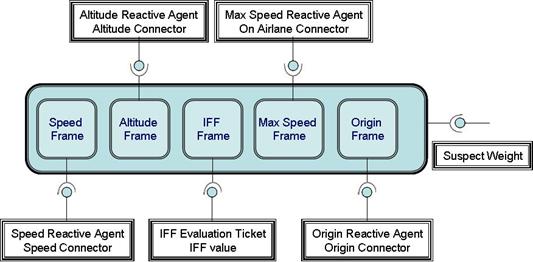

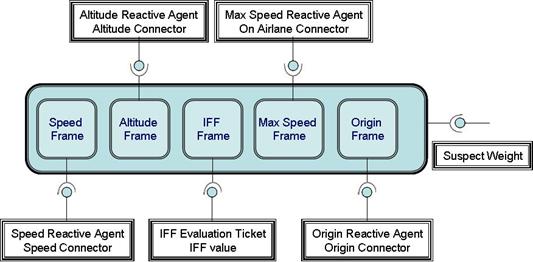

d. Weighting the Suspect Ticket.......................................... 75

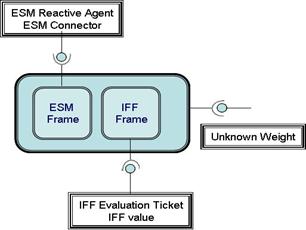

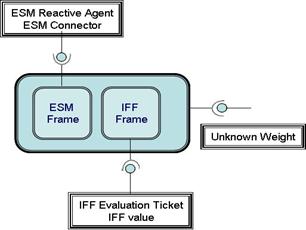

e. Weighting the Unknown Ticket....................................... 75

f. Execution of the ATO Ticket............................................ 75

g. Execution of IFF Evaluation Ticket................................ 76

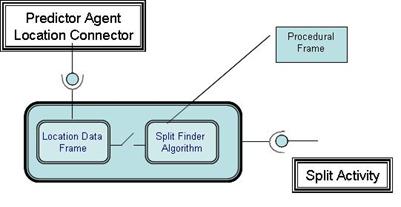

h. Execution of CPA Ticket................................................... 77

i. Split Activity Detection...................................................... 77

E. THE Regional Agent........................................................................... 78

f. Blending theory and the ADL Simulation............................ 81

VI. RESEARCH QUESTIONs RESULTS AND THE EVAULATION OF THE

SIMULATION 85

a. Research Questions......................................................................... 85

1. Overview........................................................................................... 85

1. General

Testing Methodology..................................................... 85

b. the level of reality of the ADL

simulation....................... 86

C. the accuracy of the decisions by the

adl simulation 87

D. the level of closeness of decisions

given by the model to the decisions given by the experts................................................. 90

VII. FUTURE WORK AND DEVELOPMENT OF THE AIR

DEFENSE LABoRATORY SIMULATOR..................................................................................................................................... 91

a. Future work introduction.......................................................... 91

b. Development of the environment........................................... 91

c. Integrated knowledge transfer........................................... 91

d. THE adl simulation as a training tool................................... 92

e. implementing THE adl simulation WITH

bayesian methodS 93

VIII. SUMMARY AND CONCLUSION.......................................................................... 95

list of references.................................................................................................... 97

INITIAL DISTRIBUTION LIST......................................................................................... 101

THIS PAGE INTENTIONALLY LEFT BLANK

LIST OF FIGURES

Figure 1. ADL

Simulation Interface................................................................................ 2

Figure 2. ADL

Simulation MAS Layout......................................................................... 5

Figure 3. Conceptual

Blending (After [1]).................................................................... 11

Figure 4. Conceptual

Integration Network................................................................... 14

Figure 5. Simplex

Network (After [1]).......................................................................... 16

Figure 6. Mirror

Network (After [1]).............................................................................. 18

Figure 7. Cognitively-Based

Model of Threat Assessment (From [20]).................. 23

Figure 8. Threat

Assessment Model (From [22])....................................................... 24

Figure 9. Contact

Classification Artificial Neuron (From [24]).................................. 26

Figure 10. Connector

States.......................................................................................... 33

Figure 11. Visual

Design of the ADL Simulation.......................................................... 37

Figure 12. Tactical

Figure Control Panel...................................................................... 38

Figure 13. Track

Generator Panel................................................................................. 38

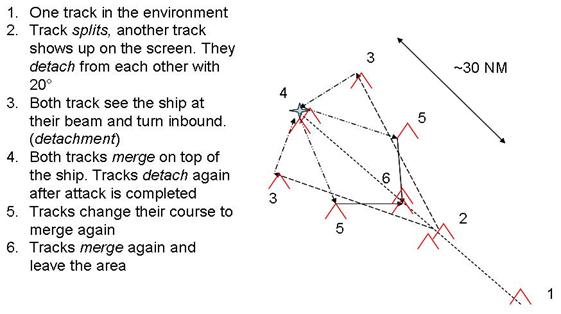

Figure 14. Coordinated

Detachment Attack................................................................. 39

Figure 15. Evaluation

of Model Panel............................................................................ 41

Figure 16. Track

Info pages............................................................................................ 42

Figure 17. Regional

Agent and Track Agent Connectors Frames............................. 43

Figure 18. Competing

Models Frame........................................................................... 43

Figure 19. Simulation

Output Panel............................................................................... 44

Figure 20. Coordinated

Detachment Attack Profile..................................................... 53

Figure 21. ADL

Simulation Layered Structure.............................................................. 55

Figure 22. ADL

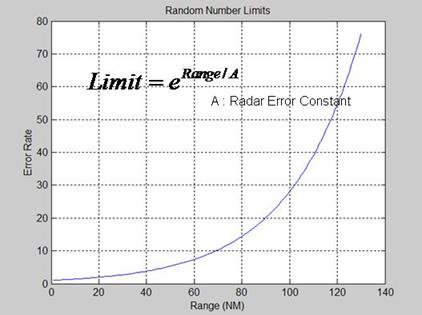

Random Number Finder Reactive Agent Equation.......................... 61

Figure 23. Snooper

Detector Reactive Agent Equation.............................................. 63

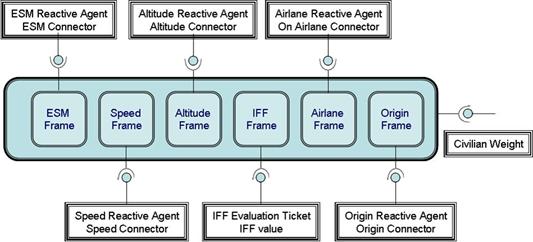

Figure 24. Civilian

Ticket and Frames.......................................................................... 67

Figure 25. A

Friendly Ticket and Frames...................................................................... 68

Figure 26. Hostile

Ticket and Frames........................................................................... 68

Figure 27. Suspect

Ticket and Frames......................................................................... 69

Figure 28. Unknown

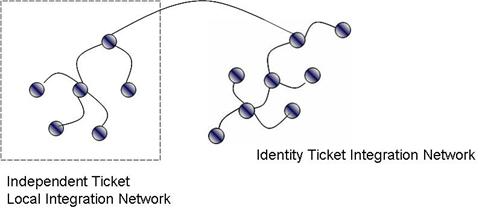

Ticket and Frames....................................................................... 69

Figure 29. Connecting

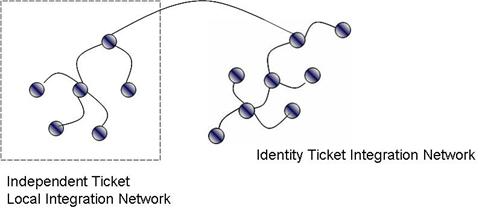

Local Independent Ticket Integration Network to Identity Integration Network 70

Figure 30. IFF

Evaluation Independent Ticket.............................................................. 71

Figure 31. Evaluation

of ATO Frame of Hostile Ticket............................................... 76

Figure 32. Predictor

Agent Split Activity Detector Ticket............................................ 77

Figure 33. Regional

Agent Snooper Detector Ticket.................................................. 80

Figure 34. Merge

Detector Blending Operation........................................................... 81

THIS PAGE INTENTIONALLY LEFT BLANK

LIST OF TABLES

Table 1. Default

Classification Threshold Values(From [24]).................................. 26

Table 2. Scoring

(Weighted) Values for Various Input Cues(From [24])............... 27

Table 3. Civilian

Aircraft Attributes and Behaviors................................................... 48

Table 4. Friendly

Aircraft Missions [28]..................................................................... 49

Table 5. Friendly

Aircraft Attributes and Behaviors.................................................. 50

Table 6. Enemy

Aircraft Missions [28]....................................................................... 51

Table 7. Enemy

Aircraft Attributes and Behaviors.................................................... 52

Table 8. ESM

Reactive-agent Messages to Track Agent....................................... 57

Table 9. IFF

System Modes and Concepts............................................................... 58

Table 10. Sample

Error Percentage Limits................................................................. 61

Table 11. Predictor

Track Agent Connectors and Queries....................................... 66

Table 12. Regional

Agent Connectors and Queries................................................... 79

Table 13. Scenarios

Used in the Simulation Test and Analysis................................ 86

Table 14. Civilian

Aircraft ID Results of Tests............................................................. 88

Table 15. Friendly

Aircraft ID Results of Tests............................................................ 88

Table 16. Hostile

Aircraft ID Results of Tests.............................................................. 89

THIS PAGE INTENTIONALLY LEFT BLANK

ACKNOWLEDGMENTS

This thesis is the culmination of my military experience

and education. As an Anti-Air Warfare Officer (AAWO) of TCG ORUCREIS (F-245)

and a master degree student at Naval Postgraduate School, I was able to blend

both theory and application from my personal experience to my thesis. I have combined

my naval air defensive warfare experience with the computer science knowledge

that I gained at Naval Postgraduate School. I am grateful to everyone who

helped me in achieving my goals especially my senior officers who taught me air

warfare concepts, my air-defense team on the ship, and all of my instructors at

the Naval Postgraduate School. Special thanks to Prof. John Hiles, Prof. Neil C. Rowe and Prof. Chris Darken.

I also would like to thank my family members for their

endlessly supporting me and for being in my life. I am so grateful to my mom

for showing me how to be strong in life and keep up with the struggles of life

by beating two different cancers. I am very thankful to my father for inspiring

me to one day be as a great father as he is. I am also thankful to Basar, my

brother, for being the best brother imaginable.

I also would like to thank my girlfriend, Jennifer M. Courtney, for joining my life and sharing her life with me. Her help and support was

key in the completion of my thesis.

THIS PAGE INTENTIONALLY LEFT BLANK

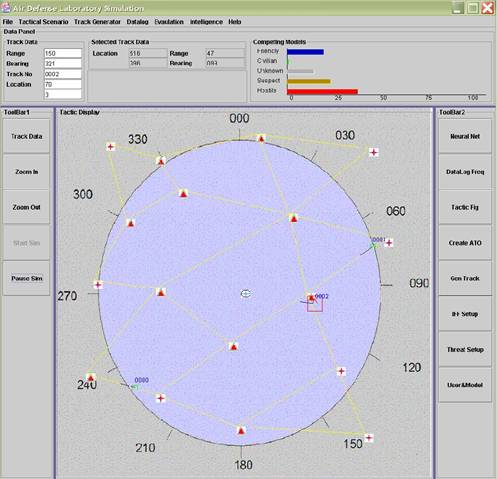

The Air Defense Laboratory (ADL) Simulation is a software

program which simulates an Anti-Air Warfare (AAW) Officer’s threat assessment

process in the Combat Information Center (CIC) of a frigate performing air

defense. ADL is a software cognitive model implemented in the Java programming

language. It is user-interactive in that it allows users to manipulate input

data and create realistic air-defense scenarios. The program simulates the

mental processes performed by an AAW Officer in the threat-assessment phase. It

uses multiagent systems technology and the Connector Based Multi-Agent Systems

(CMAS) Library written by the Integrated Asymmetric Goal Organization (IAGO)

team at the Naval Postgraduate School. The cognitive model implements

Conceptual Blending Theory as proposed by Turner and Fauconnier[1].

The ADL Simulation has two goals, one short-term and one

long-term. In the short term, the simulation aims to assist air-defense teams

to gain insight about air-threat assessment and to support the team in

decision-making under stressful conditions. The air-defense crew may create

queries for a specific air track and receive advice and predictions about the possible

intentions of the track from the simulation. Differently from most other

approaches in which the only final decision is presented, the ADL Simulation

also gives the user reasons as to why the steps towards a decision are made by

traversing backwards each node of an "integration network" that is

created in the model. In the long term, the ADL Simulation aims to improve decisionmaking

and air-threat assessment duties of the Anti-Air Warfare Officer. Currently in

naval technology, the primary use of unmanned sensory vehicles is

reconnaissance. In the future, when the cognitive models like that of the ADL

Simulation are embedded in these vehicles, they will be able to make decisions

and take actions in the field. This would save much time, money, and human

resources that are currently used. This would also limit the placement of

humans in dangerous situations and the loss of life or additional risks that

accompany these decisions.

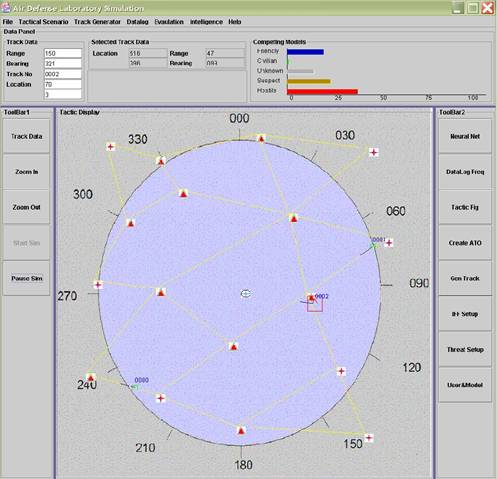

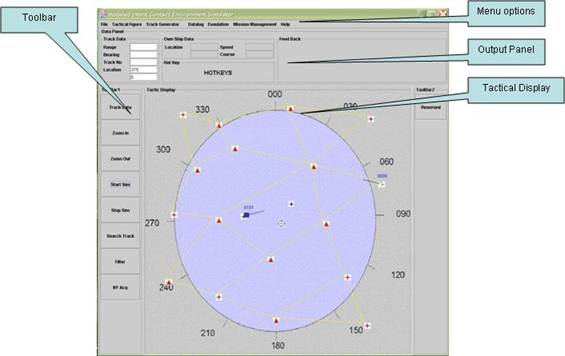

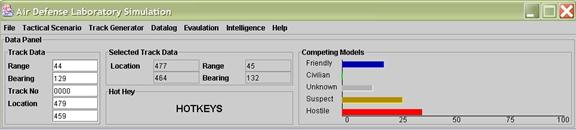

Figure 1.

ADL Simulation Interface

Naval air warfare is the most rapid and intense

traditional warfare. If not properly executed it could result in severe

destruction. The attacker has the advantage of speed, flexibility of the attack

axis, direction, and time. With a well-coordinated attack, a ship’s

self-defense systems may also be saturated with overwhelming data. This

requires that a naval unit focus on expertly training their air-defense teams

and maximize their proficiency level.

Currently there are two types of training for air-defense

teams. The first is the training with actual aircraft at sea and the second is

training with simulated tracks. Even though the simulators are well designed

and represent the reality of air warfare, they suffer from being a simulation

in the air-defense team’s minds and do not provide the realistic atmosphere and

the factors associated stressful conditions. Realistic training requires the

use of the actual aircraft and other relevant training components, a huge

amount of resources, and pre-coordination between the air assets and the naval

unit. A naval force without an aircraft carrier has to arrange all of this

coordination. Most of the time, training with real air assets is short due to

the limited flight time of aircraft. This motivates the need for new simulators

better representing the real world to assist air-defense teams in naval air

warfare.

Another way to increase success in defensive air warfare

and to compensate for the disadvantage of being on the defensive side is to

assist the air-defense team in the command-and-control systems in the CIC.

These embedded systems support the air-defense crew in threat assessment by

showing threat priorities, sorting threats based on priorities, and reminding

the air-defense team of the Anti-Ship Missile Defense (ASMD) procedures. There

has been a great deal of work done to increase the efficiency of the supporting

software embedded in the command-and-control systems. This is especially

relevant because of two high-profile incidents concerning the USS Vincennes and

USS Stark. On March 17, 1987 a Mirage F-1 fighter jet, took off from Iraq's Shaibah military airbase. It was detected by both USS Stark and an Airborne Warning

and Control System (AWACS) plane. The attacker Mirage released its load and

headed to the north but the USS Stark and the AWACS did not detect the

missiles. On July 3, 1988, 15 months after the previous incident, USS Vincennes

shot down a civilian Iranian Airliner carrying 290 people after falsely

identifying it as an attacking aircraft. The reports released after these two

events clearly revealed the importance of the human factor in air defense. In

both incidents, the lack of correct decision-making about the situation and

situational awareness had a catastrophic result.

After these incidents, U.S. Navy research focused on

assisting humans in air defense using the fields of artificial intelligence

(AI) and display technologies. The ADL Simulation deals mostly with the AI and

aims to assist the air-defense crew. The post-Stark and post-Vincennes research

supporting air-defense teams in the AI field is discussed in Chapter III.

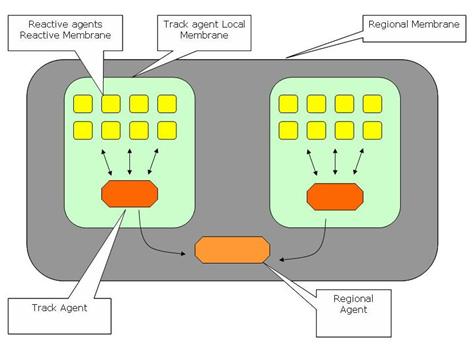

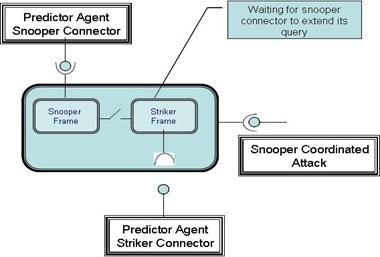

The ADL Simulation uses Multi-Agent System (MAS)

technology. “A basic Multi Agent System (MAS) is an electronic or computing

model made of artificial entities that communicate with each other and act in

an environment.” [2] Agents are autonomous software elements operating and

interacting with each other in that environment.

MAS’s are comprised of six components: an environment (E)

which is the space where agents operate, the objects (O) in the environment,

actors (A), relations (R) between actors and the environment and between

agents, operations (Ops) that are executed by actors, and laws of the

environment.

MAS = {E, O, A, R, Ops, Laws}

Agents in a MAS environment receive input from the

environment, process this input and produce an output. This output is

eventually released to the environment by the agents. The environment may have

more than one agent in it and provides communication facilities to all agents.

Its architecture is usually formulated as sense-process-act.

The environment in the ADL Simulation is the airspace

containing air assets and the air-defense ship equipped with satisfactory

air-defense sensors. One class of objects are the sensory devices of the ship

which provide input information to threat assessors. There are mainly two kinds

of agents in ADL Simulation: Real-track agents (offensive) and predictor agents

(defensive). Real-track agents control aircraft activities based on the type of

the aircraft. Predictor agents receive sensory data produced by real-track

agents and generate a prediction about the identity and possible intent of the

aircraft. Predictor agents are designed in a layered architecture. At the very

bottom level of prediction, reactive agents reside. They are responsible for

each factor in air-threat assessment, discussed in detail in Chapters III and V.

The track agents are located above reactive agents and are responsible for

combining all information provided by reactive agents. Regional agents are

above the track agents and are responsible for identifying coordinated

activities between air tracks. Communication between agents is provided by

connectors implemented with the CMAS library, as discussed in detail in Chapter

V.

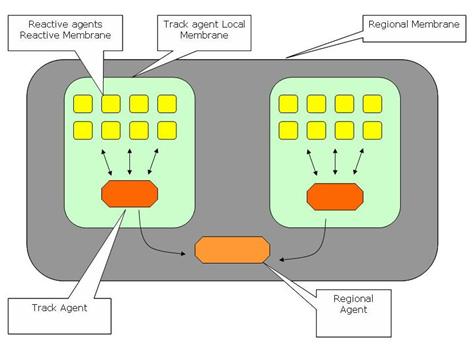

Figure 2.

ADL Simulation MAS Layout

Predictor agents figure out the identity of the aircraft

and their possible intentions. There are four kinds of aircraft in the

simulation: civilian, military friendly, military hostile and user-defined ones.

Civilian and friendly aircraft take no hostile action and are generated

randomly by the Track Manager. User-defined aircraft are generated via the user

interface.

The time difference between the initial detection of an

air track and the time when the air track represents a threat for the ship is

relatively short in air warfare with respect to other kinds of warfare. This

forces the air-defense team to evaluate all the sensory data including

kinematics of aircraft, history data, the geopolitical situation, and

intelligence in a short time and carefully. The team then must synthesize this

information, make a decision about the identity of the aircraft, and take

appropriate action. Actions are limited to the Rules of Engagements (ROE). But

ROEs are strict guidelines and usually the actions are based on the identities

of the contact of interests. Therefore the main task is to identify the air

tracks and then look up the ROEs to take proper action. Wrong identification

causes wrong actions as with the USS Vincennes incident. Air warfare leaves

limited time to make the right decision. Today’s technology has brought us

computers with ever-increasing speed that can help meet the time constraints of

air warfare. They are also indifferent to stress which would eliminate factors

related to human error, i.e. fatigue, making wrong decisions under stress, and

lack of experience. Calfee in his master thesis at NPGS modeled the impact of

fatigue, stress and experience on humans in air defense using software decisionmaking

processes. The results evidently show the impacts of human related deficiencies

on air warfare. For the above mentioned reasons, the ADL Simulation uses

computers to resolve problems, help air-defense teams in identifying tracks,

and making correct actions.

The ADL Simulation differs from previous research in that

it uses conceptual blending theory for the cognitive model. This theory

explains how the human brain constructs meaning in the mind. It was primarily

developed in linguistics and all the examples provided by Turner and Fauconnier

come from that area. The ADL Simulation is a software implementation of

Blending Theory in a scientific field. The ADL Simulation has the advantage of

using CMAS Library to simplify its modeling. Part of the library is support for

Connectors and Tickets. Connectors are communication devices between agents in

the environment and let us apply real-like world scenarios to software and

create the integration network of Blending Theory. Connectors in CMAS library

enable agents in the environment to communicate with each other without a

direct relation or a global controller. Tickets are procedural instructions for

agents and data organizing systems. Connectors and Tickets are discussed in

Chapter IV. By using these techniques to anticipate the intent of an air

contact, the simulation comes close to the ways that human CIC personnel create

meaning that integrates the intent and the possible threat of the air contacts.

The results of the ADL Simulation showed that the agents

in the simulation created an Integration network of which the nodes are mental

spaces containing instant information. The agents made their decision as the

way a human air-defense officer did in shorter time period and with same

accuracy. The results opened a new way to extend this project to its second

goal. Using CMAS library was key to developing the simulation and extending it

to second goal.

THIS PAGE INTENTIONALLY LEFT BLANK

Conceptual Blending Theory proposed by Giles Fauconnier and Mark Turner is a theory of reasoning in the human brain [3]. How do we

understand the things happening around us? How do we give meanings to the events?

How do we combine multiple actions? For many years both scientists and

non-scientific people have been searching for the answers. The short

explanation to these questions involves evolution, an ongoing process that

could last millions or billions of years. Most people use their reasoning

ability to rationalize the events that happen around them without wondering how

they are able to do this. We take advantage of the fact that we can think

rather than questioning why we can. Conceptual blending theory is one way of explaining

how we think, give meaning to what is happening around us, integrate this

information, learn, and eventually gain experience with age. Blending is the

key to this theory. Humans are constantly blending as they talk, imagine,

listen and in every other action imaginable. It is integration of processes

that we blend in our minds as we do all these activities.

Forms are the most common way to

represent things around us. One of the most commonly used forms is language.

People communicate with each other with a complex system of forms known as

languages. These languages may be either verbal languages like English, German,

Spanish or some symbolic languages like Morse code, flags, searchlights, ASL

(American Sign Language), or even smoke. We construct sentences, sentences are

composed of words, words consist of letters, and letters are nothing but little

points and lines drawn in a particular shape with an associated phoneme. What

actually makes everybody come to the decision that “a” is “a” is not coming

from the nature of the “letter a”; which is the combination of some points and

lines. It is actually the “form” that we wrap around the “letter a”. Since

everyone in the world who speaks a Latin language knows this form, “a” has the

same meaning to everyone that recognizes the form. For the people who do not

know this particular form, “letter a” does not make any sense and similarly for

people who do not know the searchlight language, it is just a blinking light,

not an “SOS” signal.

Considering symbols as a method of

communication, there are diverse forms that we use in our daily life. Many of

these symbols go unnoticed on a daily basis because they are universal. Forms

do not carry meaning themselves. The human brain then works to recognize the

regularities these forms, assign them meanings, and eventually store these

meanings in our brains.

We associate form "wrappers"

with the real-world meanings which prompt a similar meaning in our brains. On

the other hand, two people may give entirely different meaning to the same

sentence. What makes them think in different ways even though the input is the

same? The answer to this question brings us to Turner and

Fauconnier’s “Mind’s Three I’s: Identity,

Integration and Imagination”. The answer could be a combination of

three things: two people could identify the input differently, integrate the

inputs in a different way, or perhaps the new structure that emerged in their

brains is dissimilar because their varied background experience. For that

reason, forms are good but do not explaining everything. There must be

another way to explain how we make meanings. Answer to this question comes from

cognitive science researchers and linguistics who developed Conceptual Blending

Theory.

Conceptual Blending Theory is a complex theory that

explains how humans process the information coming from the environment. “Conceptual Blending is a set of operations for

combining cognitive models in a network of mental spaces.” [4]

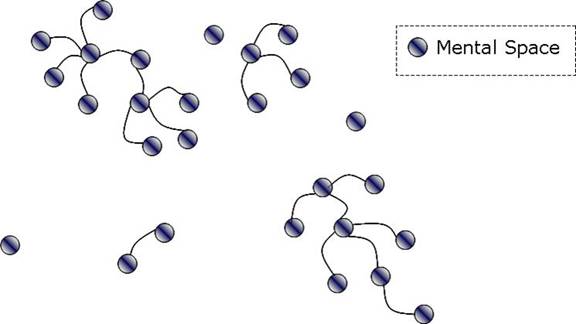

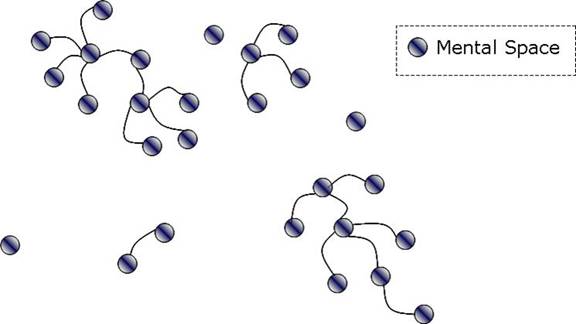

Mental spaces are the principle entities involved in conceptual blending. In a

simplest blend operation, there are three types of mental space:

·

Input space

·

Generic space

·

Blend space[5]

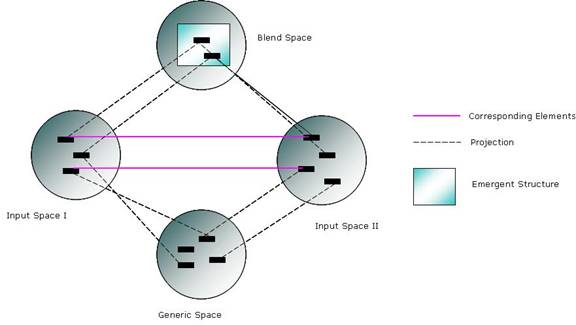

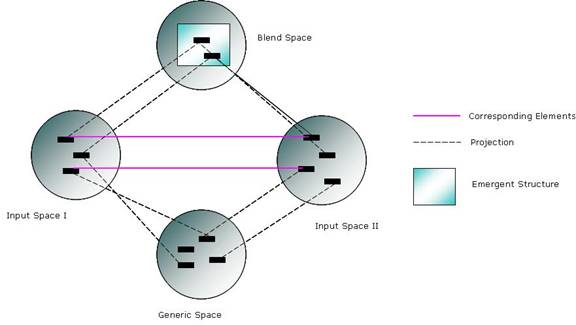

Figure 3.

Conceptual Blending (After [1])

Mental spaces are instantly built conceptual containers

that appear to be constructed as we talk, listen, remember, imagine and think [6].

Turner and Fauconnier name these containers as “conceptual packets”. Mental

spaces contain information about a particular domain. The elements of this

information represent entities of whatever we think or any activity we do. They

may be related to other elements inside other spaces and may be selectively

projected into a "blend space" as shown above. In Figure 3, mental

spaces are represented by circles, black rectangles represent elements, lines

between elements represent relations between elements, and dashed lines

represent projections from one mental space to another.

Blending is an inference method operating on spaces.

There may be more than two input space for a blend operation. Generic space

contains the common input elements of the input spaces as well as the general

rules and templates for the inputs. The elements of generic space can be mapped

onto input spaces. Blend space is the place where the emergent structure

occurs. The projected elements from each input space and generic space create

an emergent structure in the blend space, possibly something not in the input

space. The structure in the blend space may be an input for another blend

operation as controlled by an Integration network. A new emergent structure may

contain not only elements from the input spaces and generic space but also new

emergent elements that do not exist in either space [7].

Blending involves three operations:

·

Composition

·

Completion

·

Elaboration

Composition involves relating an element of one input

space to another. These relations are called “vital relations”. This matching

generally occurs under a "frame". Completion is pattern completion in

which generic space is involved in the blending operation. If the elements from

both input spaces match the information stored in the generic space, a more

sophisticated type of inference can be made, a generalization of reasoning by

analogy. This is the place where we use long-term memory and increase our

experiences. Elaboration is an operation that creates an emergent structure in

the blend space after composition and completion. It is also called running the

blend. [8]

“She was so sexy, but he’d heard she was a real cannibal”.

[9]

Considering the sentence above, the word

"cannibal" is metaphorical and has nothing to do with its original

meaning which is “An animal that eats the flesh of other animals of the same

kind”. [10] When we read this sentence, we can figure out that the woman who

was referenced is probably interested in the man’s money as opposed to his

flesh and most likely is using her beauty for this purpose. Disentangling such

metaphors and creating newly emergent structure is achieved by the elaboration

operation.

We may run blending operations many times for the same

input spaces. We cannot often reach a useful blend after one blend operation.

We do it subconsciously many times until we find the best result at the end.

There is always extensive unconscious work in meaning construction,

and blending is no different. We may take many parallel attempts to find

suitable projections, with only the accepted ones appearing in the final

network…. Input formation, projection, completion, and elaboration all go on at

the same time, and a lot of conceptual scaffolding goes up that we never see in

the final result. Brains always do a lot of work that gets thrown away [11]

Not all elements of the input spaces are projected into

blend space. This is called “selective projection”. This is vital to simplify

things. Let’s assume that we are looking at a radar scope, following air

contacts. There are two input spaces for that case: One for the aircraft values

and one for the air-defense concept. The aircraft input space has aircraft’s

properties including the kinematics, mission, nationality, type, color. The other

input space has the air-defense concept elements. At first we pay attention to

aircraft’s kinematics and other relevant factors. We never think about the

color of the aircraft even though it is an element of the input space.

Identifying the aircraft’s identity does only include other elements of input

space but not the color. Therefore the blend space which is the identity of the

aircraft does not include the color of the aircraft unless the context requires

it. The elements projected onto blend space are selected carefully based on the

context in which we are being viewed.

Figure 3 is an example of the simplest integration

network involving two input spaces, generic space, and blend space. The newly

emergent structure may be input space for a further blend operation and linked

to another network. A mental space that has been previously used in the network

may also be used again in the following blend operations. This gives the model

a coherent structure and the ability to explain experience in network form.

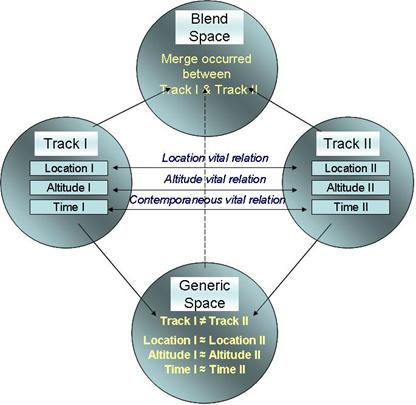

Figure 4.

Conceptual Integration Network

An integration network is the

focal point of the conceptual blending theory. This network consists of groups

of mental spaces and eventually constructs the meaning in our minds by way of

blending operations on mental spaces. “An integration network is an array of

mental spaces in which the processes of conceptual blending unfold”. [12] The

network is constructed by finding mappings between elements in different

spaces; projecting these relations from space to space and finally creating an

emergent structure that does not exist in either space.

Finding the relations between

spaces becomes the most important issue to construct identifiable types of

integration networks. At first it seems in Figure 3 that blend space is the

most important place of blending theory and therefore makes the blender the

most important module of the theory. Actually, this is not completely true. The

ability to find the relations between spaces is more important than modeling a

blender. These relations are called vital relations in blending theory.

These relations enable us to combine the two input spaces into one space that

is ultimately called compression. Turner and Fauconnier define two kinds

of relations in blending theory [13]:

·

Inner-space relations: relations inside the blend space

·

Outer-space relations: vital relations between the input mental

spaces

The newly emergent structure is constructed in blend

space by compressing outer-space relations into inner-space relations. Turner

and Fauconnier have listed vital relations as follows [14]:

These vital relations listed above are mostly used in

linguistics. However, we can define our own vital relations for an application.

Conceptual Blending Theory has four kinds of topology for its integration networks

of mental spaces [15]:

·

Simplex Network

·

Mirror Networks

·

Single-Scope Networks

·

Double-Scope Networks

The type of topology is primarily related to the

organizing frames. The similarity of the organizing frames in each input space

determines the type of the topology. Organizing frames may or may not be the

same for both input spaces. If they are not the same, clashes occur between

input spaces. One of the organizing frames or a hybrid of the two frames

determines the frame of the blend space.

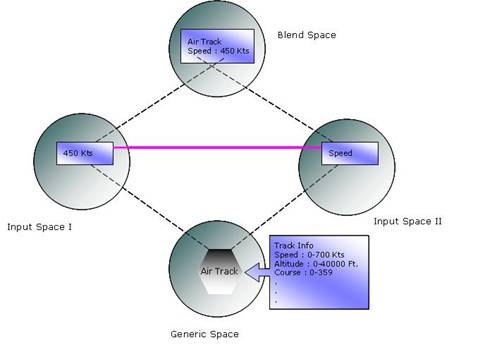

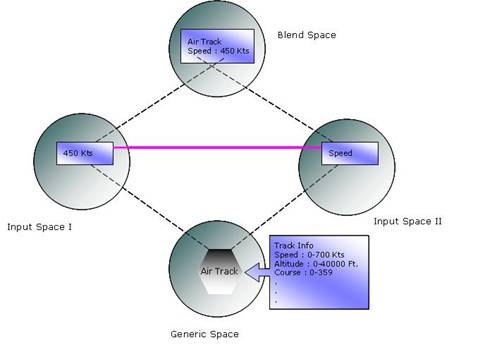

Simplex networks have an "organizing frame" in

one input space and relevant data in the other input space. These networks are

good at variable-value type of relations. If we have a “track info” organizing

frame in one input, the speed, heading, location, identity and other variables

are represented by the elements in one input space. In the other input space

there are values for each element in the organizing frame. In Figure 5, input

space I has the data for the

speed variable for input space II.

Primarily in this kind of network role-value relations are used. Simplex

networks basically formalize first-order logic proofs as studied in artificial

intelligence.

Figure 5.

Simplex Network (After [1])

In mirror networks, both of the mental spaces, generic

space and blend space, share the same organizing frame. Since both input spaces

share same organizing frame finding relations between inputs are

straightforward. Therefore there is no clash between mental spaces in the

blending at the level of organizing frame. However there may be clashes between

subframes of organizing frames. [16]

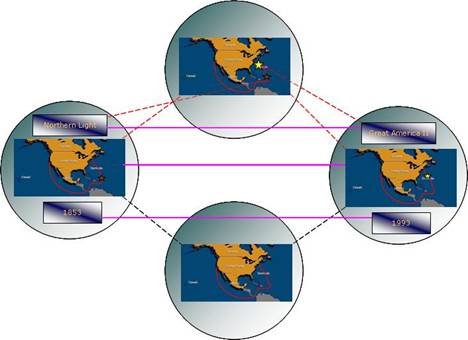

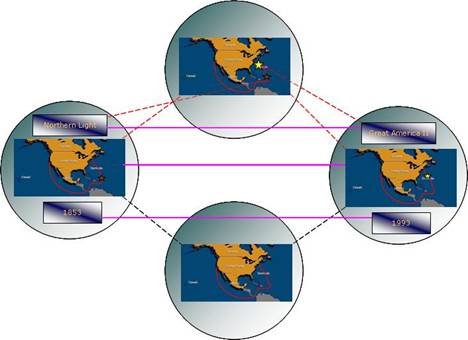

Turner and Fauconnier explain mirror networks with a

comparison of the cruise time of two sailing ships leaving San Francisco for Boston. In 1853 the clipper ship named Northern Light made this voyage in 76 days 8

hours and this was a record time until another modern catamaran named Great

America II made this distance in shorter time in 1993.

A few days before the catamaran reached the Boston, the observers were able to say that Great America II is 4.5 days ahead of Northern

Light [17].

This sentence discusses two boats racing with each other

and one of them is 4.5 days ahead of the other one. However, these two boats

are not competing with each other and they don’t even exist in the same time

period. When we read this sentence, we can understand that Northern Light was

in the analogous position in 1853 but 4.5 days later than Great America was.

One of the inputs to that blend operation is Northern Light cruising in 1853

and other one is Great America in 1993. The organizing frame in the blend

operation is sailing a boat from San Francisco to Boston. Only boats, time

periods and position on course are projected on to blend space while weather

conditions and the aim of voyage are not. Time vital relation enables us to

associate these two events in the same time domain by compressing time. Compression

is evaluating two events with 140 years time difference in the same space and

seeing them as if happening at the same time.

There is no clash between the organizing frames of these

two events. On the other hand, there are some clashes in the subframes. While

one input has a nineteenth century cargo sailing boat, the other input has a

twentieth century racing catamaran. Both frames clash each other but these

clashing properties are heuristically ignored in the blend. [18]

Figure 6.

Mirror Network (After [1])

Besides simplex and mirror networks, there are also

single-scope networks and double-scope networks in blending theory. In single-scope

networks the two input spaces have different kinds of organizing frames and

only one of them shows up on the blend space. In double-scope networks the

input spaces have two different organizing frames again but this time a

combination of these frames show up in the blend space.

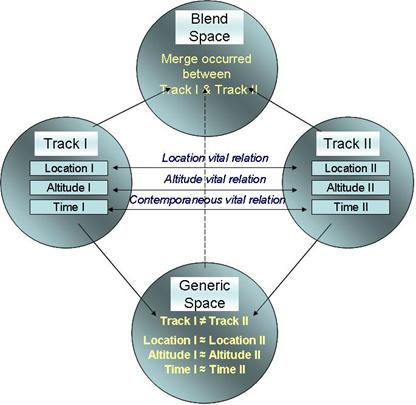

The ADL Simulation uses only the simplex and mirror

networks. Simplex networks are used in assigning vales to variables of air

tracks. At the reactive level each reactive agent receives its information and

triggers another action at the predictor track-agent level.

Mirror networks are used in various places in the ADL

Simulation. They are used in "regional" space to figure out

coordinated activities between tracks. In a coordinated attack scenario, two

air tracks are turning inbound at the same time. Both of the input space

organizing frames are the same: Attacking a ship. We can compress these two

input spaces with the place and time vital relations and find a coordinated

attack profile and then project this conclusion onto blend space.

Another place that mirror networks are used in the ADL

Simulation is in the track-agent level. Consider an air track that is changing

its course. We can find out the change in course by comparing two heading

values in successive times. Two input spaces have two different heading values

in two different times. The organizing frames are same but the time elements of

the input spaces are different.

F. alternative ways to blending theory

and cmas library for adl simulation

Using the CMAS library is not the only way of

coordination and communication to implement ADL Simulation. An alternative to

usage of CMAS library is to pass arguments to each other and define methods for

each of communication links. This may be good for an environment where there

are many agents. In a mesh topology in where n agents have dedicated

point-to-point links to every other, we need n(n-1)/2 links. For a limited

number of agents in the environment this number may be acceptable but in ADL

Simulation’s layered structure there are more than 15 agents for each track.

For a multithreaded environment this number will multiply itself for dedicated

links between every agent. The requirement of links and coordination between

each agent could be a considerable advantage of the usage of the CMAS library.

Therefore we used CMAS library for coordination and communication between

agents instead of using a mesh topology and dedicated link between each agent.

THIS PAGE INTENTIONALLY LEFT BLANK

iII. RELATED

WORK IN NAVAL AIR-THREAT ASSESSMENT

We reviewed a variety of previous work that relates to

the subject of naval air-defense, cognitive modeling, threat assessment and how

the human brain works. Many studies were conducted after the USS Vincennes and

the USS Stark incidents to understand the underlying reasons and the

factors affecting decision-making under stress. While most studies focused on

increasing the accuracy of decisions made under stress and the performance of

watchstanders in the Combat Information Center on board ships, there has not

been much study on a cognitive model for the human contribution to decisionmaking.

A few studies of how humans do identification and threat assessment suggests

that humans get inputs from environment, compare them with some predefined

templates, and then make a decision.

A plan recognition system for airborne threats was

developed by Richard Amori, the Plan Recognition for Airborne Threats (PRAT).[19]

PRAT performs three-dimensional spatial and temporal reasoning, incorporating a

high volume of data with predefined patterns via two different kinds of agents.

The PRAT system used the Falkland war between Argentina and Great Britain in its scenarios.

The most important module of the system is the Plan

Recognizer which is an intelligent subsystem. This module is based on physical

data and changes to this data, known air tactics and behaviors, and likely

primary and secondary goals. This module has two subcomponents: the

Individual-Agent Manager and Sets-of-Agents Manager. The former analyzes the

data associated with each track while the latter analyzes the coordinated

activity between air threats. These two modules use each other’s inferences so

that both can provide mutual support for more accurate reasoning. Each of these

modules uses "rolling" data structures that evolve and are updated

continuously. Each data structure has forward and backward components. A

backward component is supplied with incoming data from the environment while a

forward component provides the reasoning about the track or tracks. “A backward component permits reasoning which is

historical in nature, and the forward component permits reasoning which is

hypothetical in nature”. [19]

In naval air warfare, a large amount of data needs to be

processed. In high-threat situations this becomes even more severe. The PRAT

system addresses this problem by dividing responsibilities into sets of agents.

Each module is also subdivided into several different mission reasoners.

The PRAT system is similar to the ADL Simulation in two

ways. First, both use a layered agent architecture rather than using one type

of agent. Secondly, both use some common factors to identify track intention

and identity like kinematics values. One difference between ADL Simulation and

PRAT system is that the PRAT system uses only kinematics values while the ADL

Simulation uses additional factors. The ADL Simulation also uses special CMAS data

structures instead of keeping data in a data structure and traversing this data

each time to find a match or reason about existing history data as in the case

of PRAT.

Another investigation examined the cognitive aspects of naval

air-threat Assessment. Experienced US Navy Air-defense personnel were used in

this research. Collected data revealed that participants assigned threat and

priority levels to air tracks by using a set of factors.

Factors are elements of data and information that are

used to assess air contacts. Traditionally, they are derived from kinematics,

tactical, and other data. Examples of such data include course, speed, IFF

modes, and type of radar emitter. [20]

The major factors were

electromagnetic signal emissions, course, speed, and altitude, point of origin,

Identification Friend or Foe (IFF) values, intelligence, airlane, and distance

from the detector. Participants used up to 22 factors, and each used different

but overlapping factors. Participants used more factors in identifying tracks

posing a greater threat than tracks posing a lesser threat. Participants used

the factors in a certain order in threat assessment and each factor had a

priority.

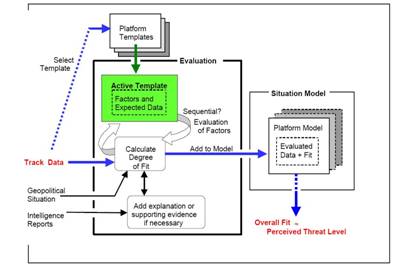

Each factor has an expected range of values. Research

showed that aircraft that matched these expectations were assigned lower threat

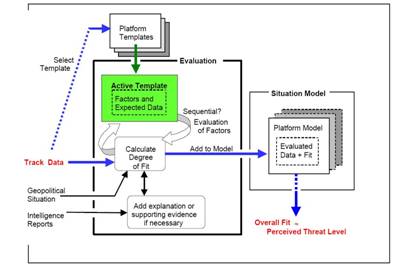

levels than aircraft that did not. Figure 7

shows a threat assessment model derived from the research.

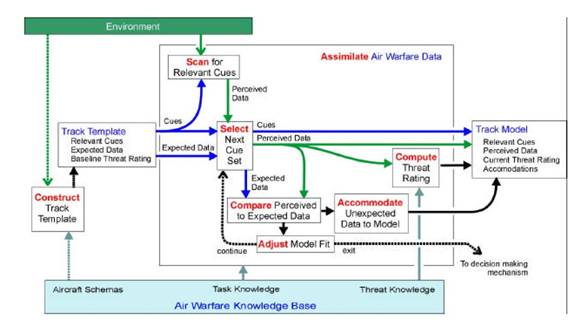

Figure 7.

Cognitively-Based Model of Threat Assessment (From [20])

The ADL Simulation uses the factors found in this

research in its reactive agents in the threat assessment process. The ADL

Simulation improved on this model by adding an integration network and the

ability to evaluate coordinated activities between two or more aircraft.

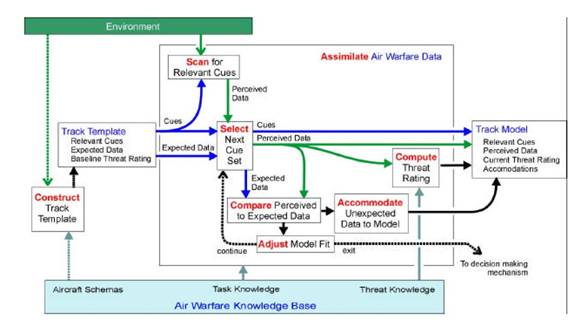

Figure 8.

Threat Assessment Model (From [22])

The factors in the previous model are mentioned as cues

in another study. Each cue has a weight and if the perceived data is

unexpected, the value of the active model is reduced by the weight of the clue.

Other studies discussed in this paper includes Tactical Decision Making Under

Stress (TADMUS), the Decision Support System (DSS), and the Basis For

Assessment (BFA) concerning the development of more efficient tactical displays

and human interfaces for air-defense personnel. These studies showed that if

the most important data is shown to the user more effectively, accuracy is

increased in threat assessment.

The following results were found in the research:

·

Users created templates to define

which cues will be evaluated and the permissible range of data for each cue.

·

Cues were:

·

Evaluated in a fairly consistent

order;

·

Weighted;

·

Processed in sets reflecting their

weights.

·

Air-defense threat evaluators:

·

Did not rely on all data, only the

data associated with cues in their active template;

·

Did not change templates in the

face of conflicting data;

·

Were influenced by conflicting

data in specific cues rather than in the overall pattern.

·

Perceived threat level:

·

Was related to the degree of fit

of observed data to expected data ranges in the evaluator’s active template;

·

Was not related to the number of

cues that were evaluated during threat assessment [23].

E.

Simulation of an Aegis Cruiser Combat Information Center

Other previous work reports on a simulation that models the

CIC of an Aegis Cruiser for air defense. The research mainly explores the

team’s performance under high-stress situations and tries to understand the interpersonal

factors that affect the overall performance of the CIC team and watchstanders.

Air-defense contact identification, threat assessment, and classification were

modeled but were not the primary focus of the research. An artificial neuron is

used to model the cognitive decision-making process.

Figure 9.

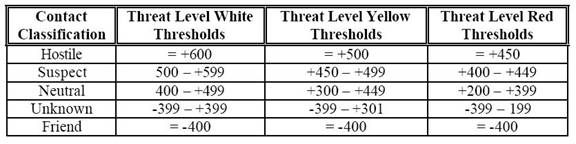

Contact Classification Artificial Neuron (From [24])

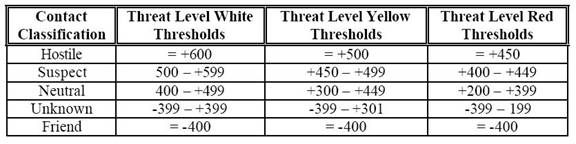

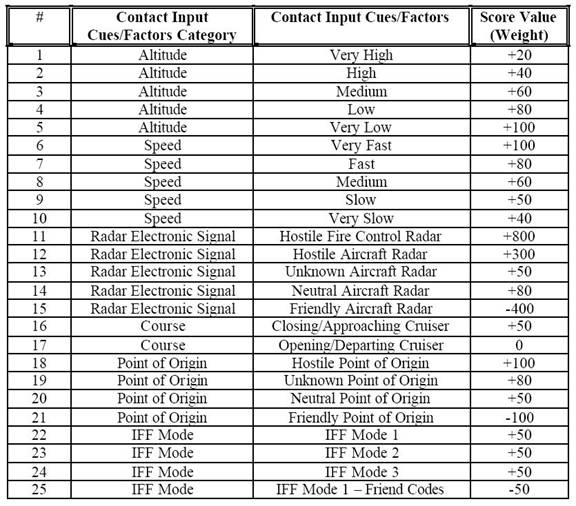

Each contact category has a threshold value for

classification and threat level. The threat level may be White, Yellow or Red.

Each input value has a weight and weighted sum is compared to a threshold. The

scoring values and thresholds were constructed in compliance with air-defense

personnel from the ATRC detachment in San Diego, CA. The threshold values are

displayed below:

Table 1.

Default Classification Threshold Values(From [24])

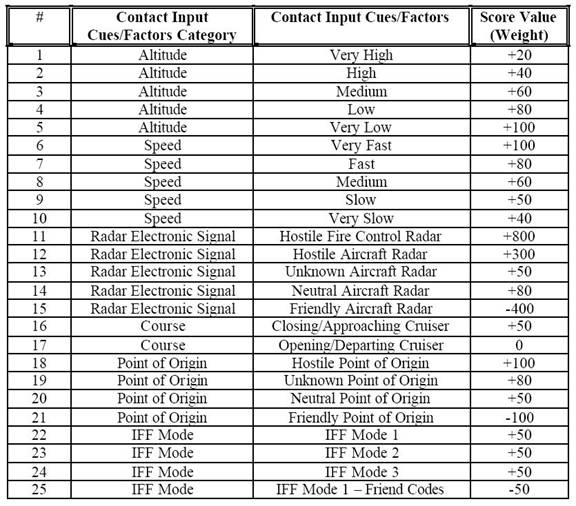

Table 2.

Scoring (Weighted) Values for Various Input Cues(From [24])

Another relevant study discusses data-fusion techniques,

collecting data from multiple sources and combining them to achieve more

accurate results than could be achieved from a single sensor alone. Data fusion

has military applications (e.g. finding track identity and establishing a

tactical picture) and non-military applications (e.g. robotics, automated

control of smart buildings, weather monitoring, and medical applications). Air-threat

assessment involves data fusion since air-defense personnel have to combine

information from multiple sensors and evaluate them.

To establish target identity, a transformation must be

made between observed target attributes and a labeled identity. Methods for

identity estimation involve pattern recognition techniques based on clustering

algorithms, neural networks, or decision-based methods such as Bayesian

inference, Dempster-Shafer’s method, or weighted decision techniques. Finally,

the interpretation of the target’s intent entails automated reasoning using

implicit information, via knowledge-based methods such as rule-based reasoning

systems [25].

The fusion in the ADL Simulation used a combination of

a neural network in the form of an integration network and an evidence

weighting algorithm. An integration network is used by the Conceptual Blending

Theory. The models that reside in the nodes of this network are weighted based

on some Bayesian inferences.

This study also suggests Blackboard Knowledge-Based

Systems (KBS) as a good data-fusion method. These systems partition the problem

into subproblems and use constrained interaction between solutions of

subproblems to solve whole problem. This is analogous to how human experts come

to a solution by gathering in front of a blackboard and brainstorming. A KBS

must have three required elements:

a. Knowledge

representation schemas

b. An

automated inference/evaluation process

c. Control

schemas

The first and third requirements are provided by Generic

Space while the second requirement is done by the blender in Conceptual

Blending Theory. Thus the method used in ADL Simulation (Conceptual Blending

Theory) fulfills the requirements of a KBS. At the same time, the ADL

Simulation is a cognitive model for how humans accomplish these functions.

Another study about multi-sensor data fusion

distinguishes three kinds of data fusion: data, feature, and decision. For data

fusion, raw data from each sensor is combined in a centralized manner. This is

claimed to compute the most accurately of the three. The drawback of this

method is the requirement that all sensor values must be put in the same units.

If sensors are distributed in the real world, all the information from all of

the sensors must be transmitted to the center, which requires a large bandwidth.

In feature-level fusion, features are extracted in each sensor and these

features are transmitted to the center. In this case communication requirements

are reduced but the result is less accurate because of the lost information

during generating features from raw data. Finally, in decision-level fusion,

each sensor sends a decision about its input and these decisions are fused. The

result is the least accurate of the three fusion options because of the

information compression of the sensor observations, but requires the least

bandwidth.

In ADL Simulation, predictor track agents are like local

sensors focused on individual aircraft tracks. They are using feature-level

fusion in which sensors are represented as reactive agents. Predictor agents

thus infer the identity of the aircraft based on the features sent by the reactive

agents. At the same time, regional agents identify coordinated activities

between aircraft and collect data from track agents, doing something like

decision fusion. Thus, different levels of data fusion are used in the ADL

Simulation.

In summary, the ADL Simulation is unique for the

following reasons:

·

It uses Conceptual Blending Theory to imitate a human brain.

·

ADL Simulation uses a Connector Based Multi-Agent System to

create an integration network.

·

The ADL Simulation allows a user to set up an arbitrary

geographical area to test.

·

It is structured with a layered design.

·

The ADL Simulation can use both analog and digital approaches to inference

to permit studying the precision losses that come with digitization.

·

Its results are stored in an XML file which enables studying this

data.

·

It allows a user to see the decisionmaking process in a

step-by-step manner and give reasons for decisions. A user may see all

decision-making processes by sending queries to a track.

The ADL Simulation is written in the Java programming

language. It was developed using the JBuilder 9 Application Development

Environment. The Java Development Kit 1.4.1_02 is used for the implementation

of the program. The program was run on a Pentium 4, 2.4 GHz. machine with 512 megabytes

(MB) of RAM. The requirements to run the program are as follows:

·

Pentium 3 or equivalent and higher processor

·

Minimum 512 MB of RAM

·

Java Development Kit 1.4.1 or higher

·

Screen display of 1280x1024 pixels or higher

The program is based on a multi-threaded environment:

There are more than 100 threads running in a five-track scenario. Therefore a

processor with high speed and large amount of RAM is a requirement for the

program to run smoothly. The SPY XML Editor was used to monitor data logging

information and XSLT transformations. XML files are used to store data logging

information.

A multi-agent system (MAS) is a computing model made of

entities that communicate with each other and act in an environment. [2] Agents

are autonomous software elements operating in an environment. Multi-agent

systems have six components: an environment (E) which is the space where agents

operate, the objects (O) in the environment, actors (A), relations (R) between

actors and the environment and relations between agents, operations (Ops) that

are executed by actors, and laws of the environment.

Agents in a multi-agent system environment receive input

from the environment, process this input, and produce an output. Then the

agents release the output back to the environment. This kind of architecture

may be basically formulated as sense-process-act.

According to Integrated Asymmetric Goal Organization

(IAGO) team at NPS there are three kinds of agents: reactive, cognitive, and

composite. The actions taken by reactive agents only rely on the current input

data, and these agents do not use memory or experience. Therefore there is no

learning capability in reactive agents. They are good at basic implementations

(e.g. thermostats or alarms).

Cognitive agents maintain a state of information and

knowledge which permits them to operate in conjunction with the memories and

experience gained so far. Composite agents are composed of both reactive

agents and cognitive agents, typically in a hierarchy. Such agents communicate

with the inner environment of the host agent as well as the outer environment.

Inner agents maintain an insight model and internal states for host agent.

An alternative taxonomy gives four kinds of agents: simple

reflex, environment trackers, goal-based, and utility-based. Simple reflex

agents are associated with the definition of reactive agents. Agents that keep

track of the environment have some sort of state information but they are not

quite cognitive enough. Goal-based agents address certain kinds of goals.

Utility-based agents try to make agents happy on the way to the goal. While

goal-based agents use only one path to a goal, utility-based agents use the

most effective path.

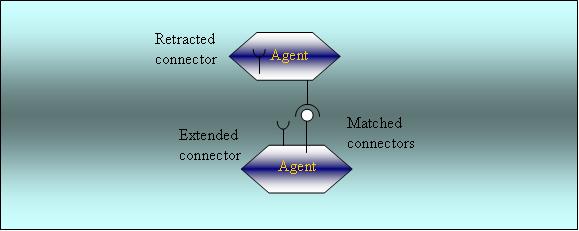

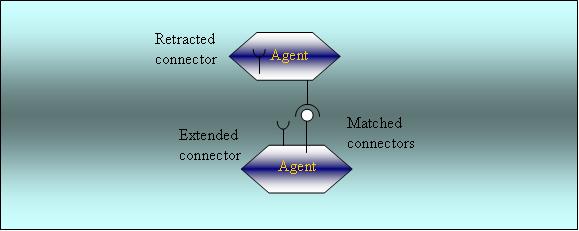

Connectors are one way to do communication and

coordination between agents. They are a particular kind of message passing

system. [26][27] Only the agents with the same namespace of the source agent

may receive the data through the connectors. Naming the connectors with a

namespace provides an addressing facility in the communication between agents.

There are three different states for a connector: retracted, extended, and

matched.

Retracted connectors are the ones that are not broadcast

by agents. They cannot be matched to another agent. Such a connector may be

retracted because the connector could not match in a certain time, the

conditions that created the connector have changed, or the conditions have not

yet been met to extend the connector. In an extended connector, the inner state

information is made available to the outside environment for another agent with

the same kind of connector to match. Matching connectors may fire an action or

change the state of a data structure in an agent.

Figure 10.

Connector States

One could question that there are other many ways

proposed for agents to communicate. A general message passing could be used to

communicate the agents. During the research before we received the library we

used a general message passing method. As the project got bigger the amount of

code also increased. After we received the CMAS library, we used connectors to

communicate and coordinate the agents inside the simulation. With the CMAS

library, we decreased the amount of code for coordination and communication of

agents. This enabled us to focus on the model rather than the coordination of

agents. For our second goal, use of the CMAS library enables us to extend the

project further in the future.

Tickets are procedural instructions for agents and data

organizing systems. There are basically two kinds of tickets: data and

procedural. Data tickets organize the data structures inside an agent. These

tickets have different kinds of frames. These frames may include names, types,

and type-value pairs. The status of a ticket is determined by the status of

each frame inside the ticket. Data tickets may be used as a trigger to fire an

action when a set of data is matched with predefined criteria. Procedural

tickets have methods to be executed. When certain conditions are met the

methods in these frames are executed in the ticket.

Both data tickets and procedural tickets have two states:

completed and incomplete. A data ticket may be completed when either all of the

frames in the ticket are “set” or a predefined subset of all frames is “set”.

Procedural tickets may be completed when either all of the frames are executed

or a subset of them is executed. The frames that have to be set or executed to

make a ticket complete are called primary frames.

A ticket may also be sequential or non-sequential.

Sequential ticket frames must be completed in sequence while frames may be

executed or set out of order in a non-sequential ticket. In a sequential

ticket, each frame may set or fire the other frame to set or execute. The

tickets in which the all frames have to be set or executed without interruption

are called synchronous tickets, or must-complete tickets. Tickets in which the

frames may be set or executed in any time are called asynchronous tickets.

There are other ways to associate procedural and logical

information with an agent. Plans, rules, and scripts are some of them. The

difference between them and tickets are that tickets are more abstract. We can

do more things with tickets. Tickets retain the plans, scripts, rules, and data

structure of an agent. Most of the implementation of tickets in CMAS library is

interfaces not classes. Tickets of the library are left to user to be

implemented based on the context.

Tickets and connectors may be used together to achieve

various kinds of design options and complete coordination between agents.

Connectors may be used to activate a ticket. This kind of relation may be a

precondition for the ticket. After some certain conditions are provided tickets

are activated and the frames in the ticket are executed.

Connectors may be used as a trigger for methods

implemented in procedural tickets or to set data structures in data tickets.

Then connectors are gates to individual frames inside the ticket. Once a

connector is matched with another one, methods in frames in a ticket may be

fired. In that sense connector matching acts like a function call. An action

taken by a frame inside a ticket may be a trigger for a different frame inside

another ticket. Then output which is released from a frame of a ticket may be

connected to an additional frame inside another ticket.

Many MASs are nested agent systems. At the very bottom

level, reactive agents are the working units. Above them is there another agent

system with more cognitive agents and so forth. Each agent system makes their

own decisions in their local area called a "membrane" or context. The

relations with upper and lower level membranes are provided by connectors.

These connectors enable systems to be generalized. With generalization, agent

systems can not only affect their little environment but also affect the

outside environments and receive information from outside world. This is called

feedback.

CMAS library was written by Neal Elzanga for the IAGO at the

Naval Postgraduate School. This library enables users to define five types of

connectors: String, Integer, Double, Float, and Boolean connectors. Each

connector must be given a namespace to enable matches in the CMAS library.

These namespaces stands for membrane. If the connectors are registered to CMAS

library, any query with registered namespace in the software can reach the

value of the connector if the connector status is extended. That enables the

user to communicate the agents between each other without implementing an

external connection inside the software. CMAS library also enables the user to

define tickets. Both data and procedural tickets are implemented in the CMAS

library. The library and IAGO project is still on progress at Naval Postgraduate School.

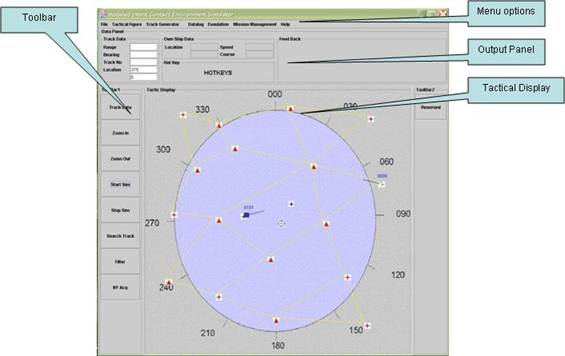

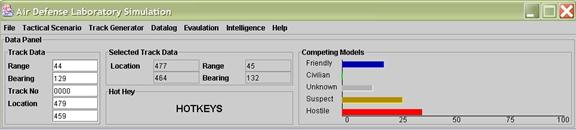

The ADL Simulation user interface has four main

components as shown in Figure 11: menu options, output panel, toolbar, and

tactical display.

The tactical figure has four drop-down menus. These menu

options allow a user to specify the environment, create and delete airbases,

air routes, and joint points, create user-derived aircraft, load a pre-prepared

scenario, save a prepared scenario, and create an Air Tasking Order (ATO)

message for friendly activity in the environment. ATO Messages are prepared by

the Air Force or Naval Force holding air assets in their force on daily basis,

and this message informs all friendly forces of the friendly air activity in

the area with time frame, task area, IFF values and mission specifications.

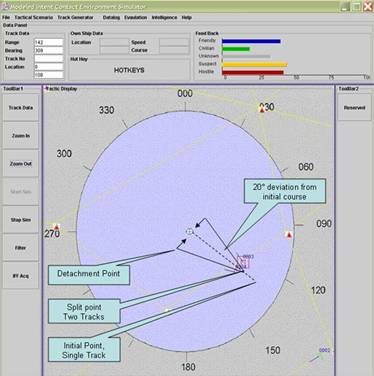

Figure 11.

Visual Design of the ADL Simulation

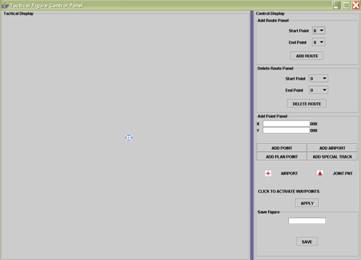

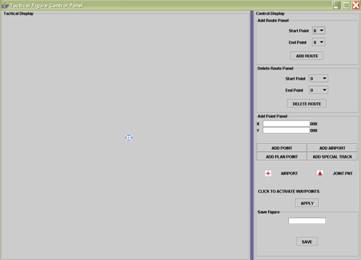

One submenu opens a new window for the user to define the

tactical scenario for the simulation. The user can create an environment by adding

airports, joint points, and air routes between these points (Figure 12). The

simulation finds the shortest path from each airport to every possible airport

and stores them as waypoints. The method used to find the shortest route is a

combination of the A* and Depth First Search (DFS).

The user can also create a track by specifying the

waypoints on this panel. The user can specify the altitude, speed, IFF

Transponder status, IFF values, radar status, and radar emission on this panel.

Agents controlling the actions of the aircraft adjust the altitude and speed of

the aircraft based on the values on the waypoints. IFF and radar status changes

on the waypoints are based on the geographic location of the aircraft.

Another menu option loads the default scenario from the

hard disk. This scenario includes the location of airports, joint points and

the routes connecting them. This enables the user to test the same scenario

multiple times without recreating the same environment. The user can also

create an ATO message.

Figure 12.

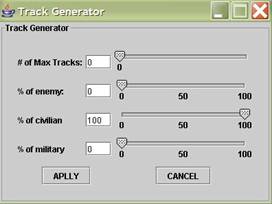

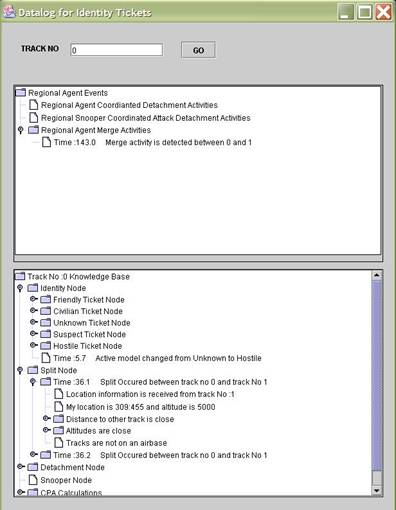

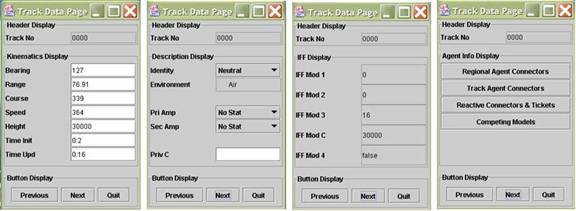

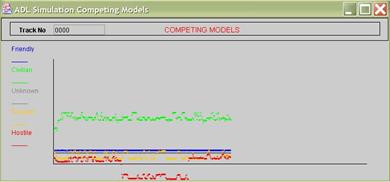

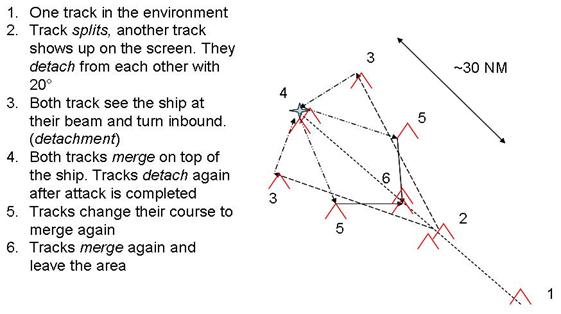

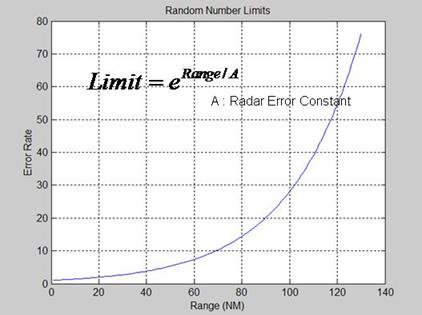

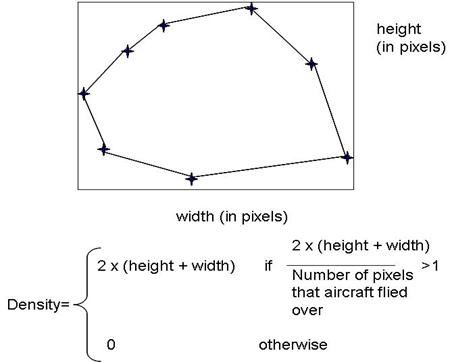

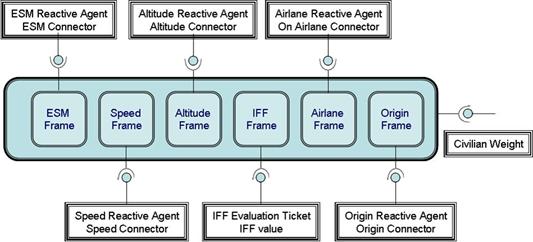

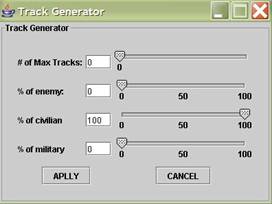

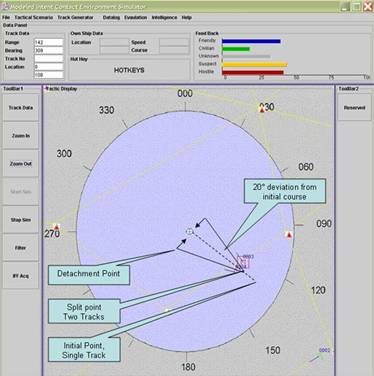

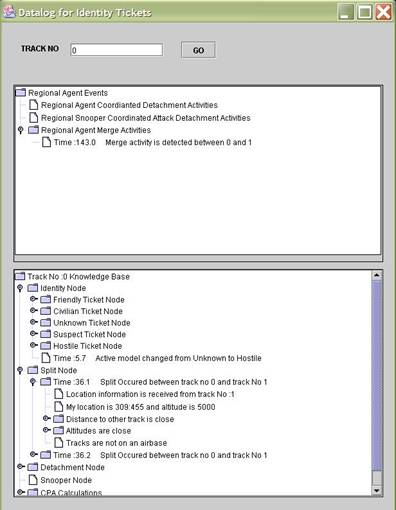

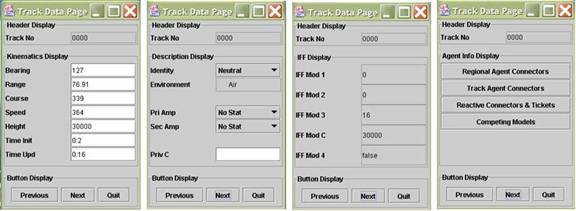

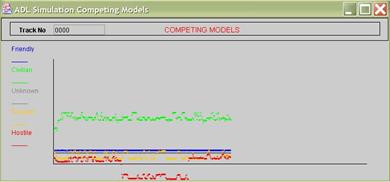

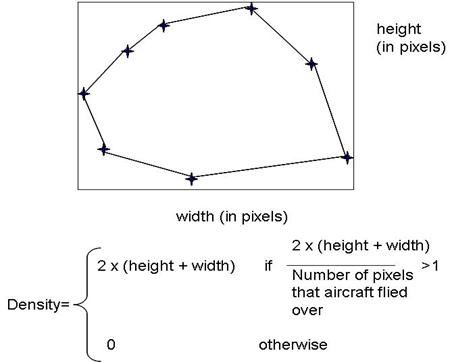

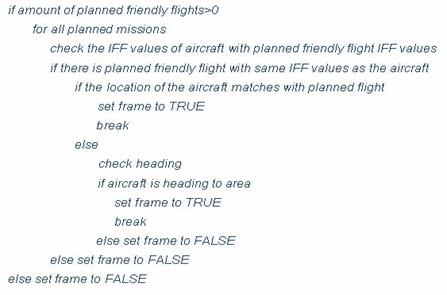

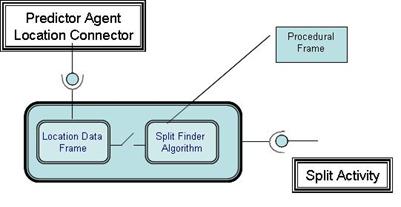

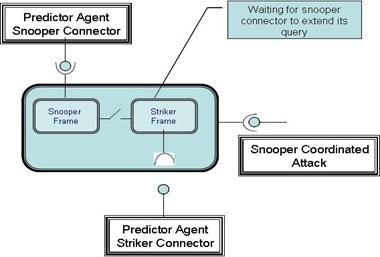

Tactical Figure Control Panel