REPORT DOCUMENTATION PAGE

|

Form

Approved OMB No. 0704-0188

|

|

Public reporting burden for

this collection of information is estimated to average 1 hour per response,

including the time for reviewing instruction, searching existing data

sources, gathering and maintaining the data needed, and completing and

reviewing the collection of information. Send comments regarding this burden

estimate or any other aspect of this collection of information, including

suggestions for reducing this burden, to Washington headquarters Services,

Directorate for Information Operations and Reports, 1215 Jefferson Davis

Highway, Suite 1204, Arlington, VA 22202-4302, and to the Office of

Management and Budget, Paperwork Reduction Project (0704-0188) Washington DC 20503.

|

|

1. AGENCY USE ONLY (Leave

blank)

|

2.

REPORT DATE

December 2006

|

3. REPORT TYPE AND DATES COVERED

Master’s Thesis

|

|

4. TITLE AND SUBTITLE: Assessing the

Effects of Honeypots on Cyber-Attackers

|

5. FUNDING NUMBERS

|

|

6. AUTHOR(S) Sze

Li Harry, Lim

|

|

7. PERFORMING

ORGANIZATION NAME(S) AND ADDRESS(ES)

Naval

Postgraduate School

Monterey, CA 93943-5000

|

8. PERFORMING ORGANIZATION REPORT NUMBER

|

|

9. SPONSORING / MONITORING AGENCY NAME(S) AND

ADDRESS(ES)

N/A

|

10. SPONSORING / MONITORING

AGENCY REPORT

NUMBER

|

|

11. SUPPLEMENTARY NOTES The views expressed in this thesis are those of the

author and do not reflect the official policy or position of the Department

of Defense or the U.S. Government.

|

|

12a. DISTRIBUTION /

AVAILABILITY STATEMENT

Approved for public release; distribution is unlimited.

|

12b. DISTRIBUTION CODE

|

|

13. ABSTRACT (maximum 200

words)

A honeypot is a

non-production system, designed to interact with cyber-attackers to collect

intelligence on attack techniques and behaviors. While the security

community is reaping fruits of this collection tool, the hacker community is

increasingly aware of this technology. In response, they develop

anti-honeypot technology to detect and avoid honeypots. Prior to the

discovery of newer intelligence collection tools, we need to maintain the

relevancy of honeypots. Since the development of anti-honeypot technology validates

the deterrent effect of honeypots, we can capitalize on this deterrent effect

to develop fake honeypots. A fake honeypot is a real production system with

deterring characteristics of real honeypots that induces the avoidance

behavior of cyber-attackers. Fake honeypots will provide operators with

workable production systems under obfuscation of deterring honeypot when

deployed in hostile information environment. Deployed in the midst of real

honeynets, fake honeypots will confuse and delay cyber-attackers. To

understand the effects of honeypot on cyber-attackers to design more

effective fake honeypots, we exposed a tightly secured, self-contained

virtual honeypot to the Internet over a period of 28 days. We conclude that

it is able to withstand the duration of exposure without compromise. The

metrics pertaining to the sizes of last packets suggested departure of

cyber-attackers during reconnaissance.

|

|

14. SUBJECT TERMS

Fake Honeypots, Deception,

Delay, Deterrence

|

15. NUMBER OF PAGES

79

|

|

16. PRICE CODE

|

|

17. SECURITY

CLASSIFICATION OF REPORT

Unclassified

|

18. SECURITY CLASSIFICATION OF THIS PAGE

Unclassified

|

19. SECURITY CLASSIFICATION OF ABSTRACT

Unclassified

|

20. LIMITATION OF ABSTRACT

UL

|

|

|

|

|

|

|

NSN

7540-01-280-5500 Standard Form 298

(Rev. 2-89)

Prescribed

by ANSI Std. 239-18

THIS PAGE

INTENTIONALLY LEFT BLANK

Approved for

public release; distribution is unlimited.

ASSESSING THE

EFFECTS OF HONEYPOTS ON CYBER-ATTACKERS

Sze Li Harry, Lim

Major, Singapore Army

B.Eng., National University of Singapore, 1998

M.Sc., National University of Singapore, 2002

Submitted in partial

fulfillment of the

requirements for the

degree of

MASTER OF SCIENCE

IN COMPUTER SCIENCE

from the

NAVAL POSTGRADUATE SCHOOL

December 2006

Author: Sze Li

Harry, Lim

Approved by: Neil C. Rowe,

Ph.D.

Thesis

Advisor

John D.

Fulp

Second

Reader

Approved by: Peter J.

Denning, Ph.D

Chairman, Department of Computer

Science

THIS PAGE

INTENTIONALLY LEFT BLANK

ABSTRACT

A honeypot is a non-production system, designed to interact

with cyber-attackers to collect intelligence on attack techniques and

behaviors. While the security community is reaping fruits of this collection

tool, the hacker community is increasingly aware of this technology. In

response, they develop anti-honeypot technology to detect and avoid honeypots.

Prior to the discovery of newer intelligence collection tools, we need to

maintain the relevancy of honeypots. Since the development of anti-honeypot

technology validates the deterrent effect of honeypots, we can capitalize on

this deterrent effect to develop fake honeypots. A fake honeypot is a real

production system with deterring characteristics of real honeypots that induces

the avoidance behavior of cyber-attackers. Fake honeypots will provide

operators with workable production systems under obfuscation of deterring

honeypot when deployed in hostile information environment. Deployed in the

midst of real honeynets, fake honeypots will confuse and delay

cyber-attackers. To understand the effects of honeypot on cyber-attackers to

design more effective fake honeypots, we exposed a tightly secured,

self-contained virtual honeypot to the Internet over a period of 28 days. We

conclude that it is able to withstand the duration of exposure without

compromise. The metrics pertaining to the sizes of last packets suggested

departure of cyber-attackers during reconnaissance.

THIS PAGE

INTENTIONALLY LEFT BLANK

TABLE OF CONTENTS

I. INTRODUCTION........................................................................................................ 1

II. BACKGROUND.......................................................................................................... 3

A. OBSERVATION, ORIENTATION, DECISION &

ACTION (OODA) LOOP 3

B. THREAT MODELING IN COMPUTER SECURITY................................. 3

C. HONEYPOTS (The Honeynet Project, 2004).................................... 4

1. Know

Your Enemy................................................................................ 4

2. Definition

of Honeypot......................................................................... 5

3. Variations

of Honeypots....................................................................... 5

4. Uses

of Honeypots................................................................................ 5

5. Honeynets............................................................................................. 5

6. Virtual

Honeynets................................................................................. 6

D. ANTI-HONEYPOT TECHNology.............................................................. 6

E. Fake Honeynets....................................................................................... 7

F. INTRUSION PREVENTION SYSTEMS...................................................... 7

G. SYSTEM INTEGRITY.................................................................................... 8

H. DATA COLLECTION AND ANALYSIS....................................................... 9

iII. PROBLEM DEFINITION AND ASSUMPTIONS................................................. 11

A. ProBLEM DEFINITION............................................................................ 11

B. ASSUMPTIONS............................................................................................. 11

1. Threat

Model...................................................................................... 11

a. Ignorant Cyber-Attackers............................................................... 11

b. Honeypot-Aware Cyber-Attackers................................................. 12

c. Advanced Cyber-Attackers............................................................. 12

C. GOAL.............................................................................................................. 12

iV. EXPERIMENTAL SETUP........................................................................................ 13

A. Experiment specification............................................................... 13

1. Hardware

Specification...................................................................... 13

2. Software

Specification........................................................................ 15

B. Design of the Experiment............................................................... 18

V. ANALYSIS OF RESULTS......................................................................................... 21

A. Security of FAKE HoneyNet............................................................. 21

B. TRAFFIC VOLUME of HoneyNet........................................................ 21

C. BELIEVABILTY of FAKE HoneyNet................................................... 23

D. Session ANalysis.................................................................................... 24

E. TIME DOMAIN ANalysis........................................................................ 27

F. PACKET SIZE ANALYSIS........................................................................... 29

G. LASt Packet RECEived ANalysis.................................................... 31

VI. CONCLUSIONS........................................................................................................ 43

A. CONCLUSIONS............................................................................................ 43

B. Applications............................................................................................ 44

C. FUTURE WORK............................................................................................ 44

APPENDIX A: RESULT PLOTS.......................................................................................... 45

Results from Session Analysis................................................................ 45

Results from TIME DOMAIN Analysis.................................................... 49

APPENDIX B: SOURCE CODES........................................................................................ 53

LIST OF REFERENCES....................................................................................................... 61

INITIAL DISTRIBUTION LIST.......................................................................................... 63

LIST OF FIGURES

Figure 1. High-level

Process of Threat Modeling................................................................. 4

Figure 2. Hardware

Setup................................................................................................ 15

Figure 3. Fake

Honeynet Setup........................................................................................ 16

Figure 4. Algorithm

of tcpdumpAnalysisConnectionLastPacket class................................. 20

Figure 5. Algorithm

of tcpdumpAnalysisConnectionSocketPairSizeCountTime class.......... 20

Figure 6. Plot

of TCP Session Count for Fake Honeypot Across Weeks........................... 22

Figure 7. Plot

of TCP Session Counts Across Weeks....................................................... 24

Figure 8. Plot

of Ratio of Received to Sent Bytes for Windows Server (Week 2).............. 25

Figure 9. Plot

of Ratio of Received to Sent Bytes for Windows Server (Week 3).............. 26

Figure 10. Plot

of Ratio of Received to Sent Bytes for Windows Server (Week 4).............. 26

Figure 11. Plot

of Ratio of Actual to Estimated Size against Time for Linux Host Operating

System (Week 2). 28

Figure 12. Plot

of Ratio of Actual to Estimated Size against Time for Windows 2000 Advanced

Server Operating System (Week 3).............................................................................................. 29

Figure 13. Histogram

of Size of Packets Received by Fake Honeynet (Week 2).................. 30

Figure 14. Histogram

of Size of Packets Received by Fake Honeynet (Week 3).................. 30

Figure 15. Histogram

of Size of Packets Received by Fake Honeynet (Week 4).................. 31

Figure 16. Histogram

of Size of Last Received Packet by Fake Honeynet (Week 2)............ 32

Figure 17. Histogram

of Size of Last Received Packet by Fake Honeynets (Week 3).......... 32

Figure 18. Histogram

of Size of Last Received Packet by Fake Honeynets (Week 4).......... 33

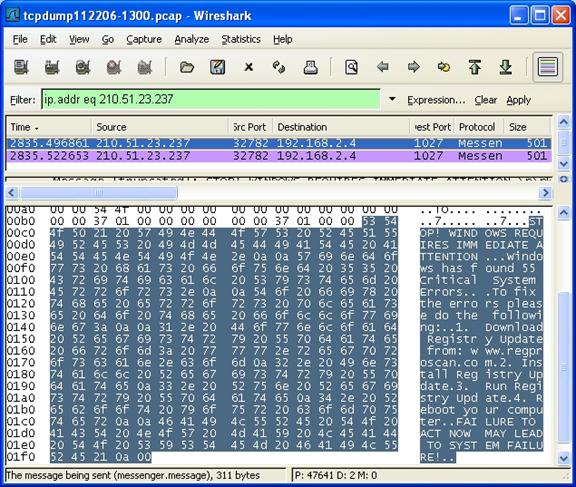

Figure 19. Screen

Capture of Packet Inspection for Packet from IP Address 210.51.23.237 (Packet

Size = 501). 40

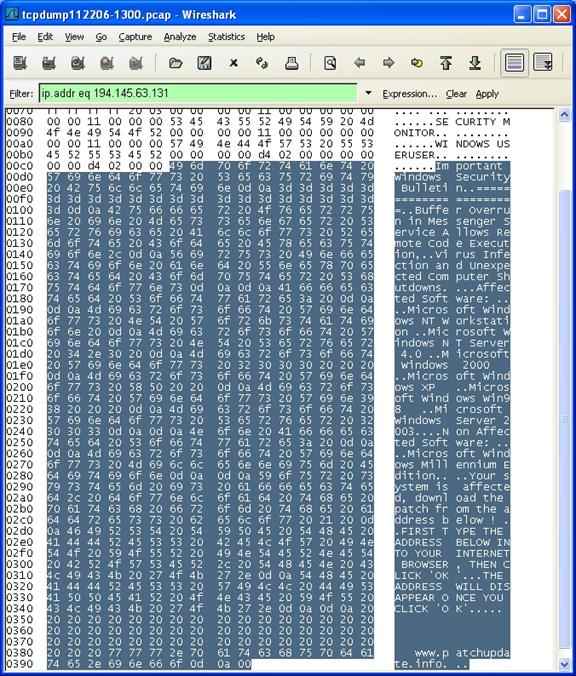

Figure 20. Screen

Capture of Packet Inspection for Packet from IP Address 194.145.63.131 (Packet

Size = 922).......................................................................................................................... 41

Figure 21. Frequency

Plot of Malicious Packet Sizes.......................................................... 42

Figure 22. Plot

of Ratio of Received to Sent Bytes for Host Linux (Week 2)...................... 45

Figure 23. Plot

of Ratio of Received to Sent Bytes for Windows XP (Week 2).................. 46

Figure 24. Plot

of Ratio of Received to Sent Bytes for Linux (Week 3)............................... 46

Figure 25. Plot

of Ratio of Received to Sent Bytes for Windows XP (Week 3).................. 47

Figure 26. Plot

of Ratio of Received to Sent Bytes for Linux (Week 4)............................... 47

Figure 27. Plot

of Ratio of Received to Sent Bytes for Windows XP (Week 4)................... 48

Figure 28. Plot

of Ratio of Actual to Estimated Size against Time for Windows 2000 Advanced

Server Operating System (Week 2).............................................................................................. 49

Figure 29. Plot

of Ratio of Actual to Estimated Size against Time for Linux Host Operating

System (Week 3). 49

Figure 30. Plot

of Ratio of Actual to Estimated Size against Time for Windows XP Operating

System (Week 3). 50

Figure 31. Plot

of Ratio of Actual to Estimated Size against Time for Linux Host Operating

System (Week 4). 50

Figure 32. Plot

of Ratio of Actual to Estimated Size against Time for Windows 2000 Advanced

Server Operating System (Week 4).............................................................................................. 51

Figure 33. Plot

of Ratio of Actual to Estimated Size against Time for Windows XP Operating

System (Week 4). 51

LIST OF TABLES

Table 1. Detecting

Anti-Honeypot/Honeynet Technology................................................... 7

Table 2. Experimental

Hardware Specification................................................................. 14

Table 3. Experiment

Software Specification..................................................................... 17

Table 4. Field

Values of Packets Obtained from Wireshark.............................................. 18

Table 5. Percentage

of Traffic with Packet Size Between 50 and 100 Bytes..................... 33

Table 6. Proportion

of Last Packets to All Packets Received by Honeypots..................... 34

Table 7. Distribution

of Protocol and Flags for Last Packets (Week 2)............................. 34

Table 8. Distribution

of Protocol and Flags for Last Packets (Week 3)............................. 35

Table 9. Distribution

of Protocol and Flags for Last Packets (Week 4)............................. 36

Table 10. Summary

of Distribution of Protocol and Flags for All Packets in 24 hours.......... 37

Table 11. Summary

of Distribution of Protocol and Flags For Each Week.......................... 37

Table 12. Organization

Name and Location of Source IP Addresses for Week 4............... 39

THIS PAGE

INTENTIONALLY LEFT BLANK

ACKNOWLEDGMENTS

I wish express my gratitude to my thesis advisor,

Professor Neil C. Rowe for stimulating my interest in honeypots and JD Fulp for

my inaugural lesson in network security.

I appreciate Han Chong, Goh, my fellow “bee” in the

honeynet research for the bringing me on deck for this thesis. In addition, I

will like to thank Yu Loon, Ng, and Chin Chin, Ng for their timely morale

boosting discussion and support.

Lastly, I will like extend my appreciation to my wife,

Doris, for her unwavering love and patience, in my absence to complete this

thesis.

THIS PAGE

INTENTIONALLY LEFT BLANK

I. INTRODUCTION

All warfares are based on deception.

Hence, when able to attack, we must seem unable; when using our forces, we must

seem inactive; when we are near, we must make the enemy believe we are far

away; when far away, we must make him believe we are near. Hold out baits to

entice the enemy. Feign disorder, and crush him. –

Sun Tzu, the Art of War

The advent of Information Technology (IT) has

revolutionized the conduct of commerce, education, socio-politics and military

operations. While the technology has been assimilated into our daily lives to

increase our competence, capacity and convenience, it has obscured the

operational details of these processes. This lack of detailed information

offers cyber-attackers an opportunity for exploitations. Information on these

exploits is usually restricted to the hacker communities, hence it poses a

great challenge for the security community to understand and defend their

systems.

The “Honeynet Project” started June 2000 with the mandate

to collect intelligence on exploitations and raise awareness of cyber-threats

and vulnerabilities (The Honeynet Project, 2004). They use and provide tools

and techniques, predominantly in the form of honeypots, in their research. Honeypots

are systems that are not intended for any production or authorized activity, hence

any activities other than those generated by the administrators of the

honeypots, are deemed unauthorized or illicit. The value of honeypots lies in

the collection of these activities (The Honeynet Project, 2004). Honeynets

extend the concept of honeypots to create networks of honeypots, thereby

increasing the value that we can derive beyond that of the individual

honeypot. However, cyber-attackers soon gain

awareness of such intelligence collection tools. This suggests that honeypots

could be detected by cyber-attackers, which could reduce the value of honeypots.

(Rowe, 2006) suggested an “artifice” to

capitalize on the intent of cyber-attackers to avoid honeypots; the concept of

fake honeypots was proposed as a defensive technique to deter and delay cyber-attackers.

The objective of this thesis is to learn more--through

experiments--about the decision cycle of cyber-attackers. The goal is to

provide appropriate countermeasures by breaking the attacker’s decision cycle.

We aim to establish the noticeable features of honeypots from the

cyber-attacker’s perspective. Capitalizing on the deterrence effect of

honeypots, we can design fake honeypots to deter and delay exploitations. Fake

honeypots look and behave like real honeypots from the cyber-attackers

perspective. These fake honeypots, however, can be production systems and do

not need to record exploitation techniques. Due to the fear of detection by

the honeypot administrator, coupled with the assumed lack of usefulness of

honeypots for normal cyber-attackers, fake honeypots deter cyber-attackers from

further reconnaissance and exploitation. A random population of fake honeypots

amidst real honeypots in enterprise networks could create uncertainties to

confuse and delay the decision of cyber-attackers upon detection of potential

honeypots.

The setup of our experiments includes the installation and

configuration of a honeypot. We collected and compared results from existing

real honeypots and fake honeypots. We analyzed the data to identify evidence

of deterrence or “fear” of cyber-attackers. Based on this evidence, we discuss

the usefulness of fake honeypots for information-security defense.

The key concepts of the thesis such as honeypots along with

the survey of related works, are given in Chapter II. Chapter III presents the

problem statement, assumptions and intent. Chapter IV details the test bed

setup, configuration, and rationale of the honeypot including hardware,

software and network details. Data analysis is presented in Chapter IV.

Chapter V provides conclusions and suggestions for application. Appendix A

includes other results generated from the honeynet. Appendix B includes the

source code for the two Java classes.

II. BACKGROUND

This chapter

provides background related to our study. The first and second sections

provide a process for modeling threats and decision cycles. The third to fifth

sections provide the history and overview of honeypots and

anti-honeypot/anti-honeynet technology. The sixth section highlight the

difference in intrusion detection and prevention systems and their relevancy in

the honeypot implementation. The seventh section examines the importance of

system integrity in implementation of a honeypot. The last section elaborates

on data collection techniques and their associated tools.

The key to military victory is to create situations

wherein one can make appropriate decisions and translate these decisions into

executions more quickly than the adversaries. (Boyd,

1976) hypothesized that all intelligent organisms undergo a continuous cycle of

interaction with their environment. Boyd breaks this cycle down to four inter-related

and overlapping continuous processes, namely: observation (collection of data),

orientation (analysis and synthesis of data to form current mental

perspective), decision (determination of a course of action based on current

mental perspective) and action (physical execution of decisions). This

decision cycle is known as the OODA loop.

Analysis of cyber-attacks

reveals that the same decision cycle is involved. Penetration and

understanding of the cyber-attacker’s OODA cycle provides us with a framework

to devise an effective security plan.

To devise a cost-effective security plan, we must

understand our threat vis-à-vis the value of assets to be protected.

Understanding the adversary’s view of the system is a critical step in threat

modeling process. (Swiderski, 2004) suggested a threat modeling process as

shown in Figure 1, which we will follow here.

Figure

1.

High-level Process of Threat Modeling.

If you know the enemy and know yourself, you need not fear the

result of a hundred battles. If you know yourself but not the enemy, for every

victory gained you will also suffer a defeat. If you know neither the enemy nor

yourself, you will succumb in every battle.

- Sun Tzu, the Art of War

The concept of warfare in cyberspace is similar to that

of conventional warfare. Understanding our capabilities and vulnerabilities,

vis-à-vis that of the adversaries, allows us to devise defensive and offensive

plans. Prior to October 1999, there was very little information about

cyber-attacker threats, motives, and techniques. The Honeynet Project was

officially incorporated in July 2001 as a nonprofit organization to collect and

analyze cyber-attack intelligence to support awareness. Since unique exploit

motives and techniques were known only to cyber-attack communities, and

otherwise not widely known and/or understood, the Honeynet Project had to

devise and employ creative attack-data collection tools like honeypots and

honeynets.

“A honeypot is an information system resource whose value

lies in unauthorized or illicit use of that resource (The Honeynet Project,

2004).” A honeypot is not designated as a production-oriented component of an

information infrastructure. As such, nobody will be using or interacting with

honeypots, except as may occur by accident; e.g., a user receives incorrect

domain name system service and has his/her browser erroneously directed to a

honeypot system. Since a honeypot has no useful public service, any actual transactions

or interactions with a honeypot are likely indicators of suspicious/miscreant

behaviors.

There are two categories of honeypots: low-interaction

and high-interaction. Low-interaction honeypots are passive and

cyber-attackers are limited to emulated services rather than actual operating

systems. They are generally easier to deploy and pose minimal risk to the

administrators. Examples are Honeyd, Specter and KFSensor. High-interaction

honeypots provide entire operating systems and applications for attackers to

interact with. They are more complex and serve as better

intelligence-collection tools. However, they pose a higher level of risk to

the administrator due to the potential of compromise by cyber-attackers, as for

instance, utilizing a compromised honeypot to propagate other attacks to

non-honeypot enterprise servers/services.

Honeypots can be deployed as production or research

systems. When deployed as production systems, different honeypots can serve to

prevent, detect and respond to attacks. When deployed as research systems,

they serve to collect information on threats for analysis and security enhancement.

Similarly to the enhancement in intelligence-gathering

capability one enjoys when deploying a high-vice low-interaction honeypots, the

value of honeypots can be further extended by networking several together.

Putting honeypots into networks provide cyber-attackers a realistic network of

systems to interact with, and permits better analysis of distributed attacks.

Virtual honeynets (The Honeynet Project, 2004) use

virtual machines like VMware to emulate multiple systems with different

operating systems on a single platform. While reducing the hardware

requirements for the administrators, the virtual guest machines offer

cyber-attackers the illusion of independent systems in the networks. It

reduces the cost and management for both production and research purposes.

There are, however, disadvantages to deployment of virtual honeynets. The use

of virtual machines is limited to the hardware virtualization software and the

host operating system. The secured management of the host operating system and

the virtualization software has to be thoroughly planned and executed. A

compromise to either software may allow cyber-attackers to seize control of the

entire honeynet. It is easier to identify/reveal a virtual honeynet, as

opposed to honeynets deployed with real hardware, due to the presence of

virtualization software and signatures of the virtual hardware emulated by the

virtualization software. Cyber-attackers may potentially identify these

signatures and thus avoid these machines; thereby defeating the purpose of

deploying the honeynet.

As the security professionals begin to include honeypots

or honeynets into their arsenal for information defense, cyber-attackers have

reacted with anti-honeypot technology. (Krawetz, 2004) opined that the

emergence of these honeypot detection tools suggested that honeypots were

indeed affecting the operations of cyber-attackers. This technology can

probably be extended to address the detection of diverse honeypots. (Holz and

Raynal, 2005) introduced several techniques and tools applicable to

cyber-attackers to detect suspicious environments (e.g. virtual machines and the

presence of debuggers). They presented the detection

of Sebek by measuring execution time of the read() system call. Table 1

shows the various implementations of other honeypots and the associated

characteristics and potential exploits.

|

Honeypot/honeynet

|

Typical Characteristics

|

Methods for Detecting

the Honeypot

|

|

BackOfficer Friendly

|

Restricted emulated

services and responses

|

Send different requests and verify the consistency

of responses for different services.

|

|

Honeyd

|

Signature based responses

|

Send a mixture of legitimate and illegitimate

traffic/payload, with common signatures recognized by targeted honeypots.

|

|

Symantec Decoy Server or Virtual Honeynet

|

Virtualization

|

Detect virtual hardware, for instance Media Access

Control (MAC) addresses.

|

|

Snort_inline

|

Modification actions

|

Send different packets and verify the existence and

integrity of response packets.

|

|

Virtual Honeynet

|

System files

|

Probe for existence of VMware.

|

|

Active Tcpdump session or Sebek

|

Logging processes

|

Scan for active logging process or increased

round-trip time (for instance, due to read(1) in Sebek-based honeypots).

|

Table 1.

Detecting Anti-Honeypot/Honeynet Technology.

Capitalizing on the advent of anti-honeypot/honeynet

technology, (Rowe, 2006) suggested the use of fake honeypots to deter and delay

cyber-attacks. These fake honeypots are real production systems that evidence the

signatures and/or behaviors of honeypots to deter cyber-attacks. The suggested

metrics (Rowe, 2006) to guide the design of good honeypots may be used by

cyber-attackers to detect and avoid potential honeypots. Using the anti-honeypot/honeynet

technology during reconnaissance, the cyber-attacker may believe a system with a

poor metrics score (low probability of being a “real” system) is a honeypot,

and thus cease any further exploitation of the system. This defensive approach

capitalizes on the dislike of cyber-attackers for honeynets. With the current

computing and memory capabilities of off-the-shelf computer systems, a fake

honeynet is easily implemented on a real machine as a self-contained virtual

honeynet.

As part of the defense-in-depth approach to Information

Security, Intrusion Detection Systems (IDS) are commonly deployed to detect

potential incoming threats based on signature sets or anomalies. However, such

systems are passive and they often overwhelm administrators with alerts instead

of actively responding to detected attacks. Intrusion Prevention Systems (IPS)

were introduced to address this response capability gap. They extend the

detection capability of IDS to include automated responses to cyber-attacks, as

for instance, blocking or modifying packets (Rash, Orebaugh, Clark, Pinkard and

Babbin, 2005). This capability; however, comes at a significant price to the

performance of protected networks or systems. Snort is a well-known IDS which

will be relevant for post-collection analysis of Tcpdump data in order to

detect cyber-attacks against honeypots. Snort_inline builds on the detection

capability of Snort. It informs “iptables” to drop, reject or modify packets

in accordance with the rule set. This will be relevant in future

investigations as it enables administrators to channel suspected cyber-attackers

to a path/system of their choosing.

To implement a

self-contained virtual honeynet, we must try to ensure that the host operating

system will not be compromised. If it is compromised, we must be able to

isolate the compromise. The typical approach to maintaining system integrity

is to establish baseline system fingerprints (i.e. hash “checksums”), prior to

operations that may result in compromise; for instance, one can connect to the Internet

and periodically monitor changes to the computer files against these

fingerprints. These fingerprints can be encrypted and stored offline to

prevent compromise. In case of modifications to these files, the administrator

can investigate if the modifications are legitimate and update the offline

system fingerprints database accordingly. There many ways to implement such

system integrity features. Tripwire, Intact, and Veracity are such high-end

commercially available integrity-check solutions. Osiris is an open-source

host-integrity monitoring system that keeps the administrator apprised of

possible compromises or changes to the file system, resident kernel modules, or

user and group lists. Alternatively, scripts or programs can be written to

automate the hashing of system files.

(Jones, 2006) categorized the four types of Network-Based

Evidence (NBE) for analysis. These include full content data, session data,

alert data, and statistical data. Full content data consists of the actual

packets, typically including the headers and application information, as seen

on the transmission media. This produces complete information but will consume

significant disk space. Wireshark (or formerly known as Ethereal) is a

memory-intensive application that captures full content data over the network

connections, with a good user-interface for full content data analysis.

Tcpdump is a lightweight tool used for similar collection. With these tools,

analysts can easily be overwhelmed with too much information. Richer analysis

is normally conducted by examining session data of particularly interesting

sessions with tools like Scanmap3d, however, the challenge is to identify the

sessions of interest. Alert data generated by Snort, shoki and Bro offer

intrusion detection alerts through signatures and rules, and these usually generate

much less data than the session data. Statistical data on system parameters

are often useful for performance monitoring. Such monitoring can trigger new

alerts that may not be obvious at lower (more detailed) levels. Tcpstat and

Tcpdstat are common open-source statistical tools.

Tcpdump data was the main source of data collected for

the research reported here. While Wireshark provided most common processing

tools required for the analysis of the Tcpdump data, the processed data did not

fit perfectly into our analysis requirement. As such, simple programs had to

be developed to extract and cluster the Tcpdump raw data.

THIS PAGE

INTENTIONALLY LEFT BLANK

iII. PROBLEM

DEFINITION AND ASSUMPTIONS

A. ProBLEM

DEFINITION

Without constant innovation and improvement in the

deployment of honeypots, their effectiveness will decrease with the increasing

awareness of the cyber-attackers. Signatures to detect honeypots could be

available to enable cyber-attackers to avoid honeypots much as they are for

permitting defenders to recognize attacks. Until the advent of newer

cyber-attack intelligence collection tools, we need to maintain the

effectiveness and relevancy of honeypots. While cyber-attackers have devised

mechanisms to evade some of our detection and protection system, we can

likewise deploy real and fake honeynets to create confusion and obfuscation and

perhaps evade their reconnaissance and attacks.

Honeypots have been deployed to prevent, detect and

respond to attacks; however, they are usually restricted to conventional

passive deployment at the enterprise level. The deployment of an active

self-contained virtual honeynet as a preventive security measure for the

individual host terminal, rather than as a data collection tool, has not been

tested. This thesis explores the use of a self-contained virtual honeynet as one

layer in the defense-in-depth approach, particularly in rapid response

deployment scenario where operators may be constrained by a small logistics

footprint.

B. ASSUMPTIONS

1. Threat

Model

For purpose of this thesis, we categorize cyber-attackers

into the following groups.

a. Ignorant

Cyber-Attackers

These cyber-attackers are assumed to be ignorant of the

existence of honeypot technology and do not understand its nature. They

consist of “script kiddies” or amateurs with little experiences in offensive

information operations. Their wares are often downloaded from the Internet or

distributed by fellow cyber-attackers. They are motivated by thrill,

challenge, pleasure, recognition and occasionally profit (in terms of “owning” machines

as bots). Most of them can be prevented by standard information security best

practices.

b. Honeypot-Aware

Cyber-Attackers

These cyber-attackers are aware of honeypot technology

and use a combination of automated and “manual” hacking tools, including those

outfitted with honeypot detection signatures. They may be experienced in

offensive information operations. They use risk assessment and are cautious in

their navigations and attacks. Generally they will be “scared” off by the

existence – or perception thereof - of honeypots, since there are so many

easier targets. They may be motivated by knowledge and mission requirements.

This is the ideal group to target with fake honeypots.

c. Advanced

Cyber-Attackers

These cyber-attackers have detailed knowledge of

honeypots. They are usually goal-specific in nature, able to stealthily probe

potential honeypots to distinguish real and fake ones. Though fake honeypots

are not designed to deceive this group, if they are deployed amidst real

honeypots, it is expected that the fakes will obscure the real ones and hence

slow the decision cycle of these cyber-attackers. In addition, detection of

honeypots (fake or real) may create frustration for these cyber-attackers,

leading to emotional, rather than logical, reactions (Rowe, 2006).

The goal of this thesis is to establish that cyber-attackers

will alter their behavior in a measurable way when they encounter honeypots.

Subsequently these behavior alterations can be exploited when deploying fake

honeypots to deter honeypot-aware cyber-attackers. We will also explore the

feasibility of using honeypots to delay advanced cyber-attackers through obfuscation

and confusion.

This chapter details the hardware, software, and topological

layout of the honeynet used in our experiments. We report the problems

encountered in setting up the experiment. We also discuss the motivation

behind the creation of the Java program to that is used to aid the analysis of

the Tcpdump data.

The hardware setup prepared by (Duong, 2006) in her M.S.

thesis was maintained as a control setup of a real honeynet. It consisted of

four machines: a router, a machine hosting a real honeynet, a data capture

machine and a machine hosting a fake honeynet. Instead of a dedicated router,

a Belkin router was used to perform create the network address translation

(NAT). Table 2 and Figure 2 lists and illustrates the specification,

respectively.

|

Router

(Dell Dimension XPS B933)

|

|

Processor

|

Intel Pentium III – 933MHz

|

|

Storage

|

Maxtor (Ultra ATA) – 20GB

|

|

Memory

|

512MB

|

|

NIC

|

Davicom Semiconductor 21x4x DEC

Tulip – Compatible 10/100Mbps

3Com 3C905C – TX Fast Etherlink

10/100Mbps

3Com 3C905C – TX Fast Etherlink

10/100Mbps

|

|

Drives

|

DVD-ROM, CD-RW, Zip, Floppy

|

|

|

|

Honeynet

(Dell Optiplex GX520)

|

|

Processor

|

Intel Pentium 4 -2.80GHz

|

|

Storage

|

(Serial ATA) – 40GB

|

|

Memory

|

1024 MB

|

|

NIC

|

DELL NetXtreme BCM5751 Gigabit

Ethernet PCI Express (integrated)

|

|

Drives

|

CD-RW, Floppy

|

|

|

|

Data

Capture (Gateway)

|

|

Processor

|

Intel Pentium 4 – 1.80GHz

|

|

Storage

|

Western Digital (Ultra ATA) –

40GB

|

|

Memory

|

256 MB

|

|

NIC

|

EthernExpress Pro/100 VE

(integrated)

|

|

Drives

|

CD-RW, Floppy

|

|

|

|

Fake

Self-contained Honeynet (Dell Inspiron 6000)

|

|

Processor

|

Intel Pentium 4 – 1.86GHz

|

|

Storage

|

Seagate (Ultra ATA) – 80GB

|

|

Memory

|

1.25 GB

|

|

NIC

|

Broadcom 440x 10/100 Integrated

Controller

Dell_Wireless WLAN Card

|

|

Drives

|

NEC ND-6650A 8x DVD+/-RW

|

|

Router

|

Belkin Wireless G Router

|

Table 2.

Experimental Hardware Specification.

Figure 2.

Hardware Setup.

The software specification from Duong’s honeynet setup

was maintained. In addition, SUSE Linux 10 was installed on the Fake Honeynet

machine. The intent was to replicate the software configuration of the

honeynet to provide common basis of comparison.

In light of the increasing popularity of scanners for

VMware as means to detect honeynets, VMware 5.5 was setup to host a Microsoft

Windows XP Professional with Service Pack 2 and a Microsoft Windows 2000

Advanced Server with Service Pack 4. Figure 3 shows the fake honeynet setup.

Figure 3.

Fake Honeynet Setup.

As the two guest operating systems were to simulate

honeypots, they were not fully equipped with all common applications and

services. As it would be uncommon for a user to use machines without common

applications like Microsoft Office, this served as another attempt to fool

attackers as to the presence of a honeynet. Lastly, the Snort

intrusion-detection system and Tcpdump packet collector were installed, though

only Tcpdump was running as an active process. Tcpdump was selected due to its

simplicity and ability to capture full content data as opposed to other tools.

This served to create the impression of the active logging characteristic of a honeynet.

All software was fully patched with the latest updates, and the baseline

fingerprints for the host Linux machines were obtained using the Host System

Integrity Monitoring System Osiris 4.2.2 prior to connection to Internet. As

opposed to the real honeypot, the integrity of the guest operating systems (OS)

on the fake honeynet, namely the Windows XP Professional and Windows 2000

Advanced Server, is of lesser concern as they are not part of the production systems.

The only concern is that that they may be “owned” or compromised and used as

launch pad for other attacks. This risk can be significantly reduced by

frequent remounting of a fresh guest operating system and activation of

automatic updates.

The analysis machines were not included in the experiment

setup. They were used to test for network connectivity and perform

troubleshooting. Wireshark was installed on a separate machine to facilitate

analysis of full content data.

|

Router

(SUSE Linux 10)

|

|

Primary Goal

|

Sniff traffic, send captured data

to Data Capture

|

|

Software

|

Snort 2.4.3 – intrusion detection

system

Tcpdump – packets capture

|

|

|

|

Honeynet

(SUSE Linux 10)

|

|

Primary Goal

|

Solicit attacks

|

|

Storage

|

Tcpdump – packets capture

VMware Workstation 5.5 hosting

Windows 2000 Advanced

Server with SP4

Windows XP

Professional with SP2

|

|

|

|

Data

Capture (SUSE Linux 10)

|

|

Primary Goal

|

Store Snort Data

|

|

Storage

|

PostgreSQL 8.1.1

|

|

|

|

Fake

Self-contained Honeynet (SUSE Linux 10)

|

|

Primary Goal

|

Solicit/Deter attacks

|

|

Storage

|

Osiris/Unix integrity checker

Tcpdump – packets capture

VMware Workstation 5.5 hosting

Windows 2000 Advanced

Server with SP4

Windows XP

Professional with SP2

|

Table 3.

Experiment Software Specification.

Full-content Tcpdump data was collected at the Ethernet

interface over a period of 28 days for the fake honeynet. The command “#

tcpdump –s 0 –i eth0 –nn outputfile.pcap” was used to obtain the entire packet from

network interface eth0 without truncation and address resolution. The Pcap

dump file was collected on a weekly basis for analysis. Similar data was

conducted for the real honeynet over a period of 21 days.

Wireshark was used to process the Pcap file into readable

text. We were, in particular, interested in the TCP sessions. They can be

obtained using the Statistics|Sessions commands. To facilitate subsequent

analyses, the name resolution box was unchecked to allow the unresolved

addresses to be displayed instead of default resolved addresses.

In addition to the session information, we would like to

find similar information between a pair of Internet Protocol (IP) addresses.

We termed this an IP connection. The last packet of interest; however, -- a

particular interest of ours -- was not offered by standard built-in utility of

Wireshark. Selected displayed fields from Wireshark were captured for further

analysis. They included:

|

Field

|

Packet Description

|

|

Serial Number

|

Serial number of the packet

|

|

Time

|

Time elapsed since issuance of a collection command.

|

|

SrcIP

|

Source Internet Protocol address

|

|

SrcPort

|

Source port

|

|

DestIP

|

Destination Internet Protocol address

|

|

DestPort

|

Destination port

|

|

Size

|

Size of packet in bytes

|

Table 4.

Field Values of Packets Obtained from Wireshark.

Two simple Java classes were developed to digest these

exported files. The first “tcpdumpAnalysisConnectionLastPacket” read the

exported files from Wireshark, filtered the last packet of each connection

between two machines (based on their IP addresses), and generated an output

file containing the meta-data of the last packet of each connection. The

second “tcpdumpAnalysisConnectionSocketPairSizeCountTime” class read the IP

address of interest and the exported files from Wireshark, and generated the

cumulative size and count of each socket-pair across time; where each

socket-pair included in the report had the IP address of interest as either the

source or destination IP. It provided an output file containing the meta-data

of the last packet of socket pair of interest. The procedure followed by these

programs is illustrated in Figure 4 and Figure 5.

Figure 4.

Algorithm of tcpdumpAnalysisConnectionLastPacket class.

Figure 5.

Algorithm of tcpdumpAnalysisConnectionSocketPairSizeCountTime class

This chapter details the analysis of results of the

collection of the Tcpdump data. It highlights network traffic trends observed

which may be useful for future investigation.

One goal of this investigation was to determine the

effectiveness of the fake honeynet to deter or confuse cyber-attackers. The

intent was not to employ it as the sole security mechanism but to include it as

part of a comprehensive suite of security mechanismd to enhance a protected

asset’s defense-in-depth. Using standard practices for secured management of

information systems, the fake honeynet was patched and tightened. The initial

system fingerprints were generated using an MD5sum script and Osiris was

installed to manage the system integrity. The guest and host operating systems

interfaced with the Internet behind a Belkin router that provided NAT.

Standard application firewalls were maintained to prevent hostile traffic from

reaching the host operating system. We ran the collected Tcpdump data through

Snort 2.4.5 and received no alerts that suggested any successful break-ins to

host or guest operating systems. All alerts that were received were of

Priority 3 (low priority). In addition, review of our network-based evidence

showed no break-in behavior, as for instance the installation of rootkits.

Along with the results of our system integrity check, we can assert with some

confidence that the host operating system was not compromised during the 28

days of exposure.

Due to the limitations of our experimental design, the

good state of security of the host operating system offered limited insights

into the decision cycle of the cyber-attackers. Figure 6 shows the number of

TCP sessions for the fake and real honeynets.

Figure 6.

Plot of TCP Session Count for Fake Honeypot Across Weeks.

The traffic on the fake honeynet was significantly lower

than traffic of the real honeynet. For the first week, the fake honeynet was

set up at home with the Internet Service Provider (ISP) of Comcast. We

attributed this to the inaccessibility of the IP address. In the second week,

we shifted the fake honeynet to the same subnet of the real honeynet and TCP

traffic volume increased significantly. The IP address of the real honeynet

had been available to hackers for almost a year and had been disseminated

online. As such, its subnet could be deemed a hot zone for TCP traffic.

In the third week, we experimented with the advertisement

of both the real and fake honeynets in Web logs (blogs) and hackers’ discussion

forum. The effect of advertisement was a 6-fold increase in TCP traffic. In

addition, we received information, from the hackers’ discussion forum,

pertaining to our system configurations and our physical address. There were

comments that our fake honeynet was so tightly maintained that it was difficult

for an amateur hacker to break into our system. This further supported our

assertion on the security of the fake honeynet. In the fourth week we observed

a 50% drop in our TCP traffic, possibly due to the loss of interest in the

honeynet. The results of their reconnaissance might have suggested that they

were not adequately equipped with tools for further reconnaissance or

exploitation. In addition, the fourth week coincided with the Thanksgiving

weekend, which may have affected the American hackers, though the wide

distribution of attack times suggested that we were also getting attacks from

all over the world. Either explanation supports the hypothesis that most

cyber-attackers that were visiting our honeynet were amateur. While

professional awareness of anti-honeypot technology began in 2003, it appeared

that the amateurs visiting our honeynet still lacked awareness or tools

pertaining to anti-honeypot technology. So it appeared that the fear or

deterrent effect could not be observed with the existing amateur

cyber-attackers.

To deceive, we needed to establish believability of a

honeynet from the perspective of the cyber-attackers. We attempted to

duplicate the setup of the real honeynet within the constraints. The

lightweight self-contained constraint helped deployability while maintaining a

high level of interaction. The fully functional guest and host operating systems

were connected to the Internet through a bridged configuration, where the

VMware emulated multiple virtual network interfaces with the single physical

network interface of the host system. The Belkin router was used to provide

Dynamic Host Configuration Protocol (DHCP) services and assigned each operating

system a unique IP address. This would be a typical network configuration for

home or office, especially with the increasing popularity of wireless home or

office networks. From the perspective of the cyber-attackers, it should have

been difficult to decide if the downstream machines were physical or virtual

machines, let alone real or fake honeypots.

Figure 7 compares the number of TCP sessions for real and

fake honeynet. The figures for each week varied significantly. Reference the

discussion above, the variation was attributed to the established IP address

used by the real honeynet. Ignoring week 1, it was interesting to note that

session counts were increasing and decreasing similarly over the subsequent

weeks. Hence we could conclude that the fake honeynet were not significantly

different from the real honeynet from the perspective of the cyber-attackers

over the network.

Figure 7.

Plot of TCP Session Counts Across Weeks.

The session statistics were generated by Wireshark. The

ratio of received to sent bytes for each honeynet machine was plotted against

the session number with sessions numbered in order of occurrence, with the

associated IP address listed for each session. The plots for Windows 2000

Advanced Server were used for discussion in this chapter, and the others are in

Appendix A.

Figures 8, 9, and 10 show plots for weeks 2, 3, and 4.

In week 2 the ratio value ranged from 0.76 to 12.93. Based on similar

observations, we concluded that ratios above three suggested legitimate

sessions between the two machines, for instance, a download of data from the

designated IP address. Ratio values within the limits of one to three

indicated session support activities like an attempt to set up a session or

request services. Excluding the first data point, Figure 9 illustrates session

support activities where the ratio values vary from 1.68 to 2.93, where the

machine was performing a domain name system (DNS) query to our DNS server. The

“recursion desired” flag was set to one and this resulted in many queries to

that IP address. Further inspection of the associated raw packets revealed

that this behavior was triggered by Windows 2000 Advanced Server in our

honeynet with intended destination of update.microsoft.com. We assumed this to

be benign traffic, but a similar traffic profile involving a different remote

site might have been deemed a port scan and triggered alerts. This suggested

the need to turn off automatic updates when setting up a honeypot/honeynet in

order to remove these alerts, as it might mask other interesting traffic from

cyber-attackers. The administrator, however, can schedule manual updates in a

controlled fashion, in order to maintain the currency of the system. In fact,

other than initial network diagnostics to confirm network connectivity, a

honeynet should refrain from generating superfluous administrative traffic such

as, web-browsing and downloading of application or updates. The spike in

Figure 10 is the download of updates from Microsoft Corporation whereas the

ensuing traffic could be a suspicious port scan.

Figure 8.

Plot of Ratio of Received to Sent Bytes for Windows Server (Week 2).

Figure 9.

Plot of Ratio of Received to Sent Bytes for Windows Server (Week 3).

Figure 10. Plot

of Ratio of Received to Sent Bytes for Windows Server (Week 4).

We coded a simple Java program to add two fields to each

packet obtained from the Tcpdump data. The fields were cumulative count and

cumulative size of the packet for each session. We further processed the data

to obtain the ratio of actual cumulative size to estimated cumulative size,

where the estimated cumulative size is the product of current packet size and

quantity of packets received in the session. Under normal circumstances, the

ratio should be close to one to indicate a consistent flow of bytes between the

two sockets. It was however not predictably observed from our data.

A ratio that remains nearly constant might indicate a

standard automated scan, for instance a TCP SYN flag scan, or it could be a

perfectly legitimate session with packets of similar sizes. However, a

legitimate session should usually terminate with a spike for the ratio. This assumes

that the best way to transfer bytes across the network should maximize the

bandwidth to the limit of the maximum transfer unit (MTU), as for instance 1500

bytes for the Ethernet. As the last packet transferred under such circumstances

might not be a full 1500 bytes, this could result in a spike in the ratio.

Figure 11 shows that the host Linux operating system had

high-intensity packet traffic at the beginning and at 360000 seconds. In

addition, there were also four clusters of relatively low-intensity traffic.

We realized that the four clusters corresponded to daily system network

maintenance, whereas the first spike indicated the traffic created during the

initial honeynet setup. Since the honeynet setup was performed on a Thursday,

the second spike corresponded the service check conducted on the following Monday.

Figure 11. Plot

of Ratio of Actual to Estimated Size against Time for Linux Host Operating

System (Week 2).

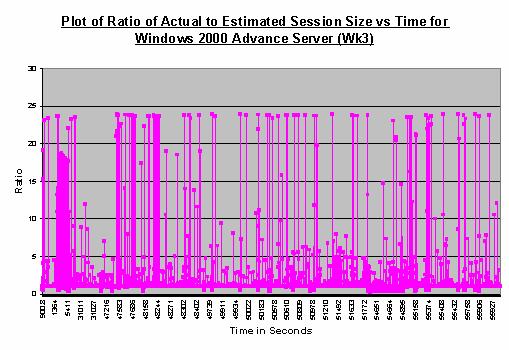

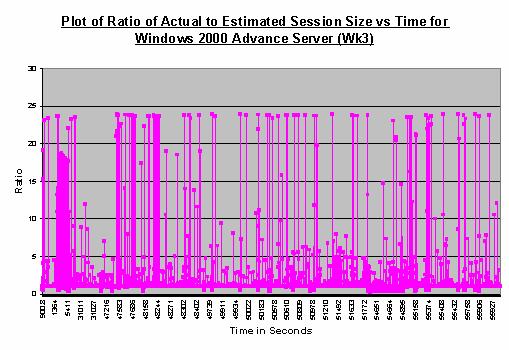

Figure 12 showing the traffic on

the Windows Advanced Server is considerably more eventful. It illustrates the

effect of our IP address advertisement on blogs and hacker discussion forums.

We observed high intensity traffic around the ratio of 1. In addition, the

relatively high intensity of data points in the vertical direction indicated

that packet traffic occurred very quickly. Since this was a honeynet machine,

traffic of such extent and intensity should not be observed unless there were

automated reconnaissances. The second observation was the relatively

consistent ceiling at a ratio of 24. Assuming that the software made the best

effort to maximize of the utility of the 1500 bytes MTU, we could see there

were numerous interweaving scanning packets of approximately 60 bytes with

other potentially legitimate data transfers (at 1500 bytes).

Figure 12. Plot

of Ratio of Actual to Estimated Size against Time for Windows 2000 Advanced

Server Operating System (Week 3).

We attempted to observe the trends in the distribution of

size of packets received by the honeynet. Figure 13, 14 and 15 shows the

frequency of size of each packet received by the honeynet collected over three

weeks, respectively. The modes of week 2 and 3 indicate high frequency of data

transfer where the packet sizes reach the limit of the Ethernet MTU. This is

commonly observed in any Ethernet network. The mode for week 4 indicates that

a relatively high frequency of packet sizes between 50 to 100 bytes. This may

be useful to indicate reconnaissance activities. We investigated further into

these small packets in the next section.

Figure 13. Histogram

of Size of Packets Received by Fake Honeynet (Week 2).

Figure 14. Histogram

of Size of Packets Received by Fake Honeynet (Week 3).

Figure 15. Histogram

of Size of Packets Received by Fake Honeynet (Week 4).

We developed another Java program to extract information

pertaining to the last received packet of each connection between two IP

addresses (regardless of ports). A connection between two machines could be

made up of several sessions. Since the honeynet was not designed to be a

production system, termination of a connection might indicate a loss of

interest or actual fear of the honeynet by an attacker. The results generated

from the Tcpdump data using the Java class were plotted into histograms as

shown in Figures 16, 17 and 18. It can be observed that these plots are

different but have similarities to Figures 13, 14 and 15. The histograms

revealed that three ranges of sizes with relatively high frequency. They were

last-packet sizes of 50-100, 500-600, and 900-950 byets.

Figure 16. Histogram

of Size of Last Received Packet by Fake Honeynet (Week 2).

Figure 17. Histogram

of Size of Last Received Packet by Fake Honeynets (Week 3).

Figure 18. Histogram

of Size of Last Received Packet by Fake Honeynets (Week 4).

Following up these observations on small packets, we

tabulated the percentage of traffic where the last-packet size was between 50

and 100 bytes, in Table 5. Week 3 was the week when we solicited traffic

through active advertisement. Near to 80% of the connections ended with

packets of size between 50 to 100 bytes. While we could not assert if the

cyber-attackers were aware of the existence of our fake honeynet, the above

traffic might fit into a typical departure signature during reconnaissance.

|

Data Set

|

Percentage of Traffic

|

|

Week 2

|

44%

|

|

Week 3

|

79%

|

|

Week 4

|

10%

|

Table 5.

Percentage of Traffic with Packet Size Between 50 and 100 Bytes.

We further investigated into the distribution of protocol

and flags of the last received packets against all received packets for our

self-contained virtual honeypots. These last packets formed a very minute

proportion of all packets received by our honeypots as shown in Table 6. Table

7, 8 and 9 show the distribution of the protocol and flags for the last packets

received by our self-contained virtual honeypot.

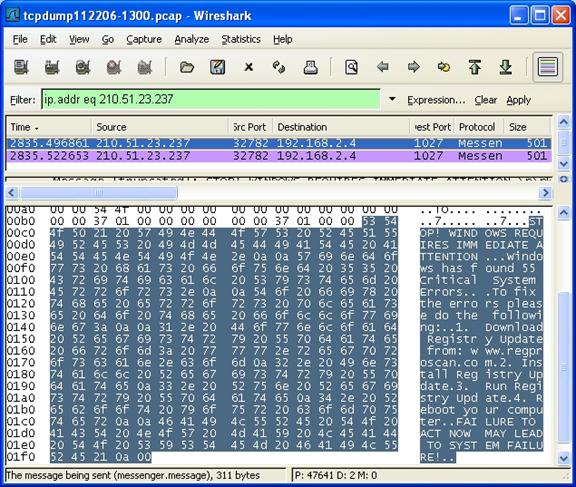

|

Received in

|

Last Packets

|

All Packets

|

Proportion

|

|

Week 2

|

16

|

28114

|

0.06%

|

|

Week 3

|

24

|

45575

|

0.05%

|

|

Week 4

|

48

|

22556

|

0.21%

|

Table 6.

Proportion of Last Packets to All Packets Received by Honeypots.

|

Source IP

|

Protocol

|

TCP

|

UDP

|

ACK

|

PSH

|

RST

|

SYN

|

FIN

|

DON'T FRAG

|

MORE FRAG

|

|

221.208.208.92

|

Messenger

|

0

|

1

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

|

62.213.130.127

|

Messenger

|

0

|

1

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

|

221.208.208.83

|

Messenger

|

0

|

1

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

|

202.97.238.196

|

Messenger

|

0

|

1

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

|

202.97.238.203

|

Messenger

|

0

|

1

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

|

216.239.53.9

|

TCP

|

1

|

0

|

1

|

0

|

1

|

0

|

0

|

0

|

0

|

|

216.239.37.104

|

TCP

|

1

|

0

|

1

|

0

|

0

|

0

|

0

|

0

|

0

|

|

216.231.63.58

|

TCP

|

1

|

0

|

1

|

0

|

0

|

0

|

0

|

0

|

0

|

|

66.102.7.99

|

TCP

|

1

|

0

|

1

|

0

|

0

|

0

|

0

|

0

|

0

|

|

66.230.200.100

|

TCP

|

1

|

0

|

1

|

0

|

0

|

0

|

0

|

0

|

0

|

|

216.228.2.120

|

DNS

|

0

|

1

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

|

66.102.7.99

|

TCP

|

1

|

0

|

0

|

0

|

1

|

0

|

0

|

0

|

0

|

|

216.228.2.120

|

DNS

|

0

|

1

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

|

66.102.7.99

|

TCP

|

1

|

0

|

0

|

0

|

1

|

0

|

0

|

0

|

0

|

|

216.228.2.120

|

DNS

|

0

|

1

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

|

216.228.2.120

|

DNS

|

0

|

1

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

|

Total

|

7

|

9

|

5

|

0

|

3

|

0

|

0

|

0

|

0

|

Table 7.

Distribution of Protocol and Flags for Last Packets (Week 2).

|

Source IP

|

Protocol

|

TCP

|

UDP

|

ACK

|

PSH

|

RST

|

SYN

|

FIN

|

DON'T FRAG

|

MORE FRAG

|

|

66.102.7.99

|

TCP

|

1

|

0

|

1

|

0

|

0

|

0

|

0

|

0

|

0

|

|

216.228.2.120

|

DNS

|

0

|

1

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

|

216.228.2.120

|

DNS

|

0

|

1

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

|

63.245.209.31

|

TCP

|

1

|

0

|

1

|

0

|

0

|

0

|

0

|

0

|

0

|

|

66.35.214.30

|

TCP

|

1

|

0

|

1

|

0

|

0

|

0

|

0

|

0

|

0

|

|

207.46.216.56

|

TCP

|

1

|

0

|

1

|

0

|

0

|

0

|

0

|

0

|

0

|

|

207.46.216.62

|

TCP

|

1

|

0

|

1

|

0

|

0

|

0

|

0

|

0

|

0

|

|

128.241.21.146

|

TCP

|

1

|

0

|

1

|

0

|

1

|

0

|

0

|

0

|

0

|

|

216.73.86.52

|

TCP

|

1

|

0

|

1

|

0

|

0

|

0

|

0

|

0

|

0

|

|

216.73.86.91

|

TCP

|

1

|

0

|

1

|

0

|

0

|

0

|

0

|

0

|

0

|

|

216.228.2.120

|

DNS

|

0

|

1

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

|

207.46.130.100

|

NTP

|

0

|

1

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

|

207.46.209.126

|

TCP

|

1

|

0

|

1

|

0

|

1

|

0

|

0

|

0

|

0

|

|

65.55.192.29

|

TCP

|

1

|

0

|

1

|

0

|

1

|

0

|

0

|

0

|

0

|

|

207.46.212.62

|

TCP

|

1

|

0

|

1

|

0

|

1

|

0

|

0

|

0

|

0

|

|

131.107.115.28

|

TCP

|

1

|

0

|

1

|

0

|

1

|

0

|

0

|

0

|

0

|

|

207.46.13.30

|

TCP

|

1

|

0

|

1

|

0

|

1

|

0

|

0

|

0

|

0

|

|

207.46.221.222

|

TCP

|

1

|

0

|

1

|

0

|

1

|

0

|

0

|

0

|

0

|

|

207.46.13.28

|

TCP

|

1

|

0

|

1

|

0

|

1

|

0

|

0

|

0

|

0

|

|

66.77.84.82

|

TCP

|

1

|

0

|

1

|

0

|

1

|

0

|

0

|

0

|

0

|

|

216.228.2.120

|

DNS

|

0

|

1

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

|

66.77.84.82

|

TCP

|

1

|

0

|

1

|

0

|

1

|

0

|

0

|

0

|

0

|

|

207.46.212.62

|

TCP

|

1

|

0

|

1

|

0

|

1

|

0

|

0

|

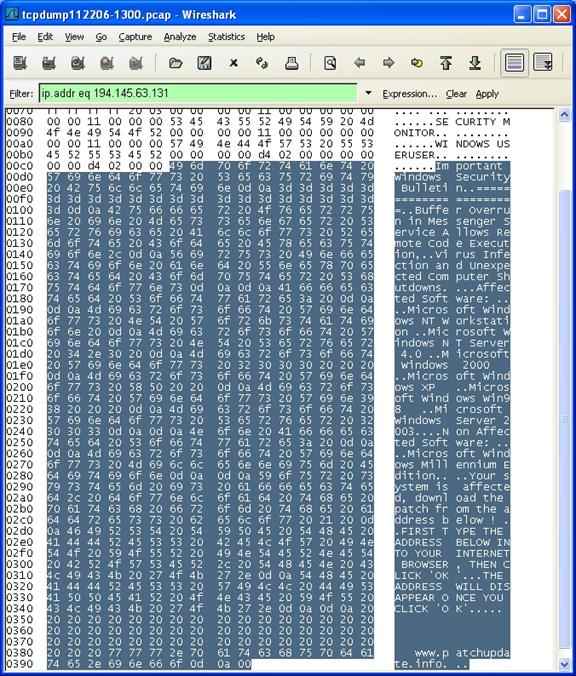

0

|

0

|

|

216.228.2.120

|

DNS

|

0

|

1

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

|

Total

|

18

|

6

|

18

|

0

|

11

|

0

|

0

|

0

|

0

|

Table 8.

Distribution of Protocol and Flags for Last Packets (Week 3).

|

Source IP

|

Protocol

|

TCP

|

UDP

|

ACK

|

PSH

|

RST

|

SYN

|

FIN

|

DON'T FRAG

|

MORE FRAG

|

|

66.102.7.99

|

TCP

|

1

|

0

|

1

|

0

|

0

|

0

|

0

|

0

|

0

|

|

216.228.2.120

|

DNS

|

0

|

1

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

|

216.228.2.120

|

DNS

|

0

|

1

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

|

210.51.23.237

|

Messenger

|

0

|

1

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

|

204.16.208.80

|

Messenger

|

0

|

1

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

|

195.27.116.145

|

Messenger

|

0

|

1

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

|

194.174.170.115

|

Messenger

|

0

|

1

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

|

204.16.208.52

|

Messenger

|

0

|

1

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

|

210.51.21.136

|

Messenger

|

0

|

1

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

|

207.46.130.100

|

NTP

|

0

|

1

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

|

202.97.238.196

|

Messenger

|

0

|

1

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

|

202.97.238.195

|

Messenger

|

0

|

1

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

|

220.164.140.249

|

Messenger

|

0

|

1

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

|

218.10.137.140

|

Messenger

|

0

|

1

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

|

207.46.209.126

|

TCP

|

1

|

0

|

1

|

0

|

1

|

0

|

0

|

0

|

0

|

|

194.145.63.131

|

Messenger

|

0

|

1

|

0

|

0

|

0

|

0

|

0

|

0

|

0

|

|