Abstract—Client-side cyberattacks are becoming more common relative to server-side cyberattacks. This work tested the ability of the honeyclient software Thug to detect malicious or compromised servers that secretly download malicious files to clients, and tested its ability to classify these exploits. We tested Thug's analysis of delivered exploits in different configurations. Results on randomly generated Internet addresses found a low rate of maliciousness of 5.6%, and results on a blacklist of 83,667 suspicious Web sites found 163 unique malware files. Thug demonstrates the usefulness of client-side honeypots in analyzing harmful data presented by malicious Web sites.[1]

Keywords—honeypots, client-side, cyberattacks, signatures, malware

This paper appeared in the Proc. International Conference on Computational Science and Computational Intelligence, Research Track on Cyber Warfare, Cyber Defense, and Cyber Security, December 2022.

I. Introduction

Client-side honeypots ([3], [6]) are tools for detecting and analyzing malicious Internet sites. They connect to a site and record all the files transmitted to it, then analyze them for malicious signatures. Usually, client honeypots are Web crawlers, visiting a list of sites to find and characterize them. Unlike traditional honeypots, client-side honeypots are active, initiating the connections and collecting the data. This work studied Thug, an open-source client-side honeypot from the Honeynet Project (www.honeynet.org). The Project is an international nonprofit security research organization investigating attacks and exploits and providing open-source tools to improve cybersecurity.

A. Client-side attacks through drive-by exploits

Client-side Web attacks ("drive-by exploits") occur when Web users visit a webpage that delivers an HTML document containing malicious code [5]. Early work [7] found references to malicious Web sites in many otherwise legitimate Web pages and email messages despite blacklisting sites like SiteAdvisor. The malicious code can exploit vulnerabilities in the Web browser, browser plugins, or operating system to compromise the user's Web browser. Then the malicious actors can download and execute additional malware causing further compromise of the user's system. This process can occur without the user's knowledge who simply visited a Web page.

A drive-by exploit is a four-stage process [15]. Attackers first load malicious code into the HTML documents of a website. Attackers can lure visitors by sending spam email with links to their servers, abuse search engines to report their pages through search engine optimization, use social media to publish their links, or modify legitimate Web servers. Users then visit malicious or compromised servers and unknowingly retrieve the attackers' malware. Usually, attackers target a specific browser or operating system vulnerability for exploitation which they detect by sending crafted packets. In the final stage, the vulnerable software on the client (victim) is exploited, often to gain control of the victim's machine.

Client-side attacks can be detected in unrequested and unnecessary files downloaded by a Web browser [1]. Anti-malware scanning can be done on them, and suspicious behaviors like downloading non-picture files from unrelated sites can be observed. Client-side malicious activity can be more effectively detected if a client carefully acts like a normal browser using deception methods [10].

B. Previous Work

A honeypot client Monkey-Spider analyzed webpages through search engine seeding [4]. This was a low-interaction honeypot client that separates Web crawling and webpage analysis for malicious code using ClamAV. For starting sites for crawling, they used the Web Services API for Google, Yahoo, and MSN search and collected the first 1,000 results from five search keywords and URLs from commercial blacklists (lists of known malicious sites). These sites were their initial Web crawler visits ("seeds"). The researchers used 20,457 seeds downloaded from 20,005,756 URLs during the Web crawling. They found that 1.0% of the sites they visited showed malicious activity. [5] and [12] were similar projects.

Other previous work compared low-interaction and high-interaction honeypot clients [9]. It tested seven kinds of malware on 20,000 malicious sites. It obtained a 1.8% rate in finding malware with the low-interaction honeypot HoneyC, 2.3% with high-interaction Capture-HPC, and 4.6% when sending files to the SiteAdvisor malware-analysis site. SiteAdvisor appears to use additional information beyond the results of website visits to determine maliciousness, such as spam and phishing site reports. A 2007 report from SiteAdvisor identified 4.1 percent of websites as malicious [14]. This discrepancy with client-side honeypots could be due to Web crawlers focusing on extracting URLs over downloading their content, which meant fewer malicious files downloaded. Another reason could be duplicate visits by crawlers to popular websites like YouTube and Amazon that were unlikely to have malware.

Another project studied the effectiveness of client-side honeypots in identifying malicious webservers that used fingerprinting and bot-detection techniques to complicate analysis [8]. They used two honeypot clients and two kinds of analysis software. One client was the low-interaction client Thug, and the other was the high-interaction honeypot client Cuckoo Sandbox. The tools used were Lookyloo, which captures webpages and redirection data, and VirusTotal, a common cybersecurity tool for identifying malicious code. The project created a custom Web server with the Django Python Web framework using 21 cloaking methods to prevent analysis by clients. All four tools had difficulty pulling malicious content from the Web server using cloaking techniques. However, most tools did not support newer technologies, API links, and browser versions. These results suggest modern websites can effectively cloak their malicious-download techniques from honeypot clients and other analysis tools.

II. Experimental Setup

A. Thug

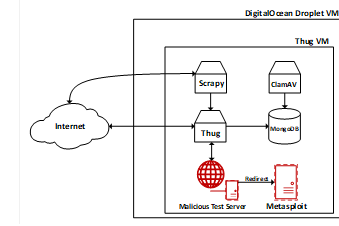

Thug is a Python-based low-interaction honeypot client that does both static and dynamic analyses to inspect suspicious malware [2]. Thug uses the Google V8 JavaScript engine wrapped through STPyV8 to analyze malicious JavaScript code and uses Libemu wrapped through Pylibemu to detect and emulate shell codes. Thug currently emulates 42 different browser types and provides 90 vulnerability plugins for analysis. After analyzing the specified URLs, Thug stores its results in a NoSQL MongoDB database running on the host machine (Fig 1). We chose this client-side honeypot based on its capabilities, open-source license, and active maintenance and support. [13] analyzes the methods and speed of Thug but does not measure its accuracy nor test Thug in real-world environments.

Fig 1. Thug operation

B. Supporting Tools Used

Thug uses MongoDB to store data, an open-source document-oriented NoSQL database. It records data with field-name and field-value pairs similarly to JSON objects, and a document can include other documents, arrays, or arrays of documents. Thug uses MongoDB to store data from its interactions with websites. It records URLs analyzed, analysis type, connections made, behaviors observed, shell codes identified, cookies downloaded, connection graphs, locations, and certificates. Thug stores collected file samples as GridFS chunks within MongoDB.

Scrapy is an open-source Web-crawling [11] and Web-scraping framework supporting data mining, monitoring, and automated testing. We chose Scrapy due to its speed and good suitability for our needs. We used Scrapy to crawl random IP addresses and record responses from Web servers for further analysis with Thug. Random IPv4 addresses were generated using the Python package Faker. The Web crawl flow chart is shown in Fig. 2Fig. 2. Commercial Web scrapers like Googlebot and Bingbot scrape information in addition to crawling IP addresses. However, we only needed the secondary data sent by Web pages, not the pages themselves, and Scrapy sufficed.

Fig. 2. Web-crawling process

We also used ClamAV (www.clamav.net), an open-source antivirus toolkit developed by Cisco Systems. ClamAV has a quick and lightweight interface from the command line. We used ClamAV to scan sample files downloaded from Thug's analysis to determine if they were malicious.

C. Functional Testing of Thug

We tested the functions of Thug using publicly available exploits and exploit tools. The Metasploit Framework (www.metasploit.com) is an open-source penetration-testing tool to find, exploit, and validate vulnerabilities. Metasploit provides modules for exploiting different operating systems, applications, and platforms. For this research, we used Metasploit's browser-exploit modules to test Thug and assess its ability to identify browser and browser plugin exploits used for drive-by downloads. We also tested some exploits not in Metasploit by coding the vulnerability as an HTML Web page on the testing server. The Exploit Database collects public exploits and the corresponding vulnerable software for penetration testers and vulnerability researchers. The database allows searching for exploits by title, CVE number, type, platform, and other parameters. It also provided exploits for the functional testing of Thug. When an exploit module was unavailable in Metasploit, we searched the Exploit Database and tried to add the exploit's source code as an HTML Web page on the malicious test server.

D. Source Sites

Our first experiments used random IP addresses. Further experiments used a sample of source sites obtained from commercial threat intelligence vendors by our school. These sites had been "blacklisted" by various sources for sending data with known malicious signatures or otherwise showing suspicious behavior. However, they had not necessarily been observed to send drive-by downloads, and some may have been blacklisted for just happening to host malicious users. Nonetheless, they were a richer source of malicious client activity than the random IP addresses we tested first.

E. Test Environment

We ran the Thug client on a DigitalOcean cloud platform outside our campus network. DigitalOcean offers cloud computing services and virtualized resources. They call their Linux virtualized platform a "droplet". Our DigitalOcean machine ran the Oracle VirtualBox hypervisor to host a Linux virtual machine on which Thug and the supporting tools ran. The Thug virtual machine (VM) contained Thug, Scrapy, MongoDB, ClamAV, the malicious test server, and Metasploit, as shown in Fig. 3. We connected to our servers through SSH and established SSH tunnels and virtual network computing (VNC) services for remote configuration and control. DigitalOcean does not implement firewall rules or intrusion-detection rules that could stop or impede HTTP responses to Thug. We also did not change the firewall configuration on our virtual machines and used the default settings. We configured both machines with Ubuntu Server 20.04 LTS. The DigitalOcean Droplet machine had 16 GB of memory and 200GB of secondary storage.

Fig. 3. Test environment setup

F. Experimentation Plan

We ran three experiments with Thug's default configuration, emulating Windows XP running Internet Explorer 6.0 with Adobe Acrobat Reader 9.1.0, JavaPlugin 1.6.0.32, and Shockwave 10.0.64.0 plugins enabled. Experiment 1 was a functional test of Thug's ability to identify and classify drive-by exploits. This analyzed a test Web server under our control. The server either ran the Metasploit modules by HTTP redirects or served HTML Web pages with malicious code from the Exploit Database. The Eicar anti-malware test file (www.eicar.org) served as the malicious payload for all the exploits. We selected the exploits based on the vulnerability identification modules in Thug's source code and several other exploits without corresponding modules. We assessed Thug's ability to identify which exploit was used in the drive-by download and successfully retrieve the anti-malware test file.

Our assessment criteria of Thug's performance were based on the amount of information Thug provided for each exploit.

A correct result meant that Thug correctly identified the exploit by name or provided an associated CVE number.

A partia lly correct result meant Thug did not identify the vulnerability by name but noted evidence of malicious activity by identifying the suspicious behavior or retrieving the exploit's malicious shell code. These clues were then used to identify the exploit based on its signatures.

A non-functional result meant we could not configure the exploit to work correctly, either through misconfiguration of the Metasploit software, the HTML code of the exploit, or an incompatible environment.

An incorrect result meant that Thug could not identify the exploit or provide evidence of malicious activity.

Experiments 2 and 3 were a four-step process using Thug to analyze IP addresses. The first step found IP addresses or URLs with running Web servers. Experiment 2 used addresses identified using the Scrapy Web crawler to scan random IP addresses and check if a Web server was running on that machine. Experiment 3 used addresses obtained from a commercial blacklist. Experiments 2 and 3 both then fed the received URLs and IP addresses to Thug for its analysis. Then the data stored on MongoDB was reviewed for malicious activities and Thug's analysis. The final step extracted the collected sample files obtained in the analysis and scanned them for malware with ClamAV. The obtained malware was then categorized into different groups based on the analysis description provided by ClamAV.

III. Results

Experiment 1 showed that Thug could identify our malicious Web server and its artifacts. Overall, Thug recognized 85 malicious exploits from a group of 99 known exploits (Table 1 and Table 2). Thug correctly identified 45 by name or provided a CVE number. It also identified another 40 as malicious from general behavior observations such as ActiveX control abuse, identifying malicious pixel IFrames, or extracting and logging the malicious shell code of the exploit. In 13 other cases, the exploit failed to deposit malicious files on our victim machine due to either misconfiguration of the exploit or an incompatible environment. In only one case Thug did not identify the malicious nature of the site at all. It appears that Thug has kept up to date with obfuscations and other deception associated with drive-by downloads. Experiment 2 directed Thug to test random IP addresses. As expected, most results were uninteresting since malicious sites are statistically rare. Minor anomalies of these sites were flagged on occasion. Overall, Experiment 2 crawled and analyzed 37,415 Web sites. Thug identified 2,054 Web servers with suspicious behavior, all of which was abuse of ActiveX control GET and POST methods. During the analysis, 146,768 file samples were downloaded, of which ClamAV identified 18 as infected with malware and another 230 files identified as potentially unwanted applications (PUA).

Table 1. Cyberattacks tested on our malicious Web server by Thug; "AA" indicates instances for which additional analysis was required to identify the exploit by analyzing signatures based on extracted shell code; "SC", or malicious behavior; "MB".

|

Exploit |

AA? |

Exploit |

AA? |

|

AIM goaway |

MB |

AOL Radio AmpX |

|

|

Adobe 'Collab.getIcon()' |

MB |

Adobe 'Doc.media.newPlayer' |

MB |

|

Adobe Flash Player 'newfunction' |

MB |

Adobe 'util.printf()' |

MB |

|

Creative Software AutoUpdate Engine |

SC |

MS DirectShow 'msvidctl.dll' |

SC |

|

IBM Lotus Domino DWA

Upload Module |

|

IBM Lotus 'inotes6.dll' |

SC |

|

EnjoySAP SAP GUI |

|

Facebook Photo Uploader |

|

|

Gateway WebLaunch |

SC |

GOM Player |

|

|

ICQ Toolbar |

|

MS14-064 |

MB |

|

MSXML Memory Corruption |

SC |

Macrovision Installshield |

|

|

Macrovision FlexNet |

|

MS IE XML |

|

|

NCTAudioFile2 |

|

RealPlayer 'ierpplug.dll' |

SC |

|

Apple QuickTime |

SC |

Shockwave rcsL |

SC |

|

MS Silverlight |

MB |

Microsoft Access |

|

|

SonicWALL NetExtender |

|

MS OWC Spreadsheet |

SC |

|

BaoFeng Storm |

|

Symantec AppStream |

|

|

Symantec BackupExec |

|

MS Visual Studio |

SC |

|

MS Media Encoder |

|

MS Internet Explorer Unsafe

Scripting |

|

|

MS IE WebViewFolderIcon |

|

WinZip FileView |

|

|

Winamp Playlist UNC Path |

SC |

HP LoadRunner |

|

|

Yahoo! Messenger |

|

Zenturi ProgramChecker |

|

|

Adobe CoolType |

MB |

Adobe Flash Player |

MB |

Table 2: Further cyberattacks tested.

|

Exploit |

AA? |

Exploit |

AA? |

|

Adobe Flash Player

copyPixels |

MB |

IBM Lotus Notes Client |

SC |

|

Java 7 Applet |

MB |

ADODB.Recordset |

|

|

AnswerWorks |

|

Baidu Search Bar |

SC |

|

BitDefender Online Scanner |

|

ChinaGames 'CGAgent.dll' |

|

|

GlobalLink 2.7.0.8 |

SC |

DivX Player 6.6.0 |

SC |

|

D-Link MPEG4 SHM Audio Control |

|

Xunlei Web Thunder |

SC |

|

Lycos FileUploader |

SC |

Ourgame 'GLIEDown2.dll' |

|

|

HP Compaq Notebooks |

|

Clever Internet |

SC |

|

Java Deployment Toolkit |

|

jetAudio 7.x |

|

|

Move Networks |

|

MS Rich Textbox |

SC |

|

MySpace Uploader |

|

Sejoong Namo |

|

|

NeoTracePro 3.25 |

SC |

Nessus Delete File |

|

|

Nessus Command Execution |

|

Office Viewer OCX |

SC |

|

Xunlei XPPlayer |

SC |

Cisco Linksys PTZ Cam |

SC |

|

Move Networks Quantum

Streaming Player |

|

Qvod Player 2.1.5 |

SC |

|

Rediff Bol Downloader |

SC |

Rising Scanner |

SC |

|

Sina DLoader Class |

|

StreamAudio Chaincast |

|

|

Toshiba Surveillance |

|

UUSee 'Update' |

SC |

|

VLC Remote Bad Pointer |

|

Firefox 'WMP' |

SC |

|

MS IE Remote Wscript |

|

Yahoo! JukeBox |

|

|

Yahoo! Messenger CYFT |

SC |

Yahoo! Messenger

'YVerinfo.dll' |

|

The PUAs collected consisted of trojans, adware, and trackers. One of the collected PUAs ClamAV identified was the "PUA.Html.Trojan.Agent-37074" PUA. A VirusTotal scan showed that 24 of 61 antivirus tools flagged this file as malicious. Microsoft and Trend Micro security blogs identified the PUA as Exploit:HTML/Phominer.A and Trojan.HTML-.IFRAME.FASGU respectively [16][17]. Their reports indicate this PUA is dropped onto victim computers by other malware or unknowingly downloaded when visiting malicious Web sites. This PUA can steal information from the victim's computer and embed malicious Iframes to redirect users to other malicious sites.

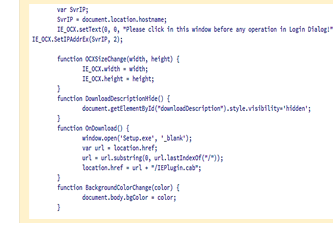

Fig. 4 shows a typical snippet of malicious Javascript code that was collected by Thug and used to download one malware sample. In this snippet, function calls hide the download of the malware and run its startup executable after download completion.

Fig. 4. Malicious Javascript code snippet

Experiment 3 used Thug to analyze and collect data from a commercial blacklist of IP addresses and domain names. The results of this experiment were more interesting, showing fewer observed malicious behaviors in website interaction (e.g., content delivery by ActiveX) but more kinds of malware. We analyzed 83,667 Web servers, of which Thug identified 953 with malicious activity. As seen in Experiment 2, all malicious behaviors observed on the blacklist Web servers used ActiveX control abuse with GET and POST methods. During the analysis, 602,731 file samples were collected, of which ClamAV identified 2,043 as malware and an additional 869 as PUA. The lower number of observed malicious behavior may indicate that the blacklist only contains web servers that hold malicious payloads and not the exploits used to initiate the drive-by download. Table 3 shows the results of Experiments 2 and 3. None of the random Web servers scanned in Experiment 2 was in the blacklist for Experiment 3.

Table 3. Results from experiments 2 and 3. "%" represents the percent total of sites

|

|

Sites |

Malicious Behavior |

Malware |

PUA |

|

Blacklist |

83,667 |

953 (1.14%) |

2,043 (2.44%) |

869 (1.03%) |

|

Random Web |

37,415 |

2,045 (5.47%) |

18 (0.04%) |

230 (0.61%) |

IV. Discussion

Although Thug performed very well in categorizing and identifying different drive-by exploits on our malware server in Experiment 1, we did not observe the same range of exploits used in Web servers obtained through our random IP address samples or the servers in the blacklist. ActiveX controls are a common method many websites use to display information or provide interactivity, but they can be used maliciously to collect information or install malware. Our results may indicate that drive-by downloads using methods besides ActiveX controls are rare.

While there was little variation in the type of drive-by exploits used to deliver malware to our test system, collected malware did vary considerably. Experiment 2 provided 13 unique malware files, while experiment 3 provided 163 unique malware files. We classified the malware into 12 broad categories based on the description of the malware provided by ClamAV, shown in Fig. 5. Malware listed in the "Other" categories contained a variety of malware targeted at specific applications, such as Microsoft Office document macros, HTML, and Java applications.

Fig. 5. Malware frequency by malware type and sample pool

In Experiment 2, 5.4% of random Web servers showed signs of malicious activity, which is similar to previous experiments [4][9][14]. However, not all of the 2,054 malicious interactions resulted in the download and execution of malware. This suggests many malicious activities find it more profitable to collect information on unsuspecting users rather than download malware to their machines. This suspicion is further amplified by the fact that the random Web servers delivered more PUAs than malware files, as PUAs often are spyware, adware, or trackers.

Experiment 3 showed interesting results about the amount of malicious behavior observed compared to the amount of malware retrieved. The significant difference between malicious behaviors observed and malware downloaded is likely due to the types of servers included in the blacklist, which typically host the malware payload instead of using malicious redirects or pulling contents from malicious servers. Legitimate Web servers may be compromised by malicious advertisements (malvertising) or attackers embedding malicious redirects. The goal of the blacklist is to prevent the malware from reaching the unsuspecting user without limiting access to legitimate websites. The comparatively lower number of PUAs may indicate that blacklisted Web servers have more ambitious malicious intentions than most Web servers.

Comparisons of the malware collected between Experiments 2 and 3 show interesting results. The only ransomware seen was from the random Web servers used in Experiment 2. Ransomware is particularly damaging to victims, and our random web server sample collected most of the PUAs with few instances of serious ransomware. Another interesting observation was the differences in the malware-targeted operating system between the two experiments. Experiment 2 showed malware almost entirely targeted Windows operating systems. However, most malware in the blacklist targeted Unix and Linux systems. Our test environment uses a Linux-based operating system to host Thug, but we configured Thug to emulate a Windows XP system. The extensive occurrence of Unix-targeted malware suggests that the blacklisted web servers may be using more sophisticated techniques to identify the host operating system of the victim machine, while the malicious web servers from the random sample do not. This observation suggests Thug's deception capabilities could be improved to better fool servers.

V. Conclusions

We demonstrated methods for finding and collecting malware for analysis using client-side honeypots such as Thug. We tested Thug's functionality, and our experiments confirmed its usefulness in collecting empirical data in a real-world scenario. Its benefit can only be seen when examining the relatively rare malicious sites since it finds many uninteresting anomalies on randomly chosen sites. However, further analysis of our experiment data could yield interesting trends in the types of malware collected or the interactions between the client and Web server that led to the download of the malware. Hence, client-side honeypots definitely provide added value to standard anti-malware tools.

Acknowledgment

Opinions expressed are those of the authors and do not represent the U.S. Government.

References

[1] Clementson, C. (2009). Client-side threats and a honeyclient-based defense mechanism, Honeyscout [Linköpings Universitet, Institutionen för systemteknik]. Retrieved June 27, 2022, from http://urn.kb.se/resolve?urn=urn:nbn:se:liu:diva-20104

[2] Dell'Aera, A. (2022). Thug Documentation (3.14) [Python]. https://thug-honeyclient.readthedocs.io/en/latest/genindex.html.

[6] Joshi, R. C., & Sardana, A. (2011). Honeypot: A new paradigm to information security.

[7] Obied, A., and Alhajj, R. (2009). Fraudulent and malicious sites on the Web. Artificial Intelligence, 30, 223-120.

[8] Pinoy, J., Van Den Broek, F., & Jonker, H. (2021). On the awareness of and preparedness and defenses against cloaking malicious web content delivery. M. S. thesis, Open University of the Netherlands, August 2021. www.open.ou.nl/hjo/supervision/2021-jeroen-pinoy-msc-thesis.pdf.

[9] Qassrawi, M. T., & Zhang, H. (2011). Detecting Malicious Web Servers with Honeyclients. Journal of Networks, 6(1), 145–152. https://doi.org/10.4304/jnw.6.1.145-152

[11] Velkumar, K., & Thendral, P. (2020). Web crawlers and Web crawler algorithms: a perspective. International Journal of Engineering and Advanced Technology (IJEAT), 9(5), 203–205. https://doi.org/10.35940/ijeat.E9362.069520

[12] Wang, Y.-M., Beck, D., Jiang, X., & Roussev, R. (2005). Automated Web Patrol with Strider HoneyMonkeys: Finding Web Sites That Exploit Browser Vulnerabilities. Proc. NDSS Symposium 2006. https://www.ndss-symposium.org/ndss2006/automated-web-patrol-strider-honeymonkeys-finding-web-sites-exploit-browser-vulnerabilities/ .

[13] Zulkurnain, N., Rebitanim, A., & Malik, N. (2018). Analysis of THUG: A Low-Interaction Client Honeypot to Identify Malicious Websites and Malwares. 2018 7th International Conference on Computer and Communication Engineering (ICCCE), 135–140. https://ieeexplore.ieee.org/servlet/opac?punumber=8510540

[14] Keats, S., Nunes, D., Greve, P., (2007). Mapping the Mal Web McAfee SiteAdvisor, 12.

[16] Exploit:HTML/Phominer. A threat description—Microsoft Security Intelligence. (2017). Retrieved October 16, 2022, from https://www.microsoft.com/en-us/wdsi/threats/malware-encyclopedia-description?Name=Exploit%3AHTML%2FPhominer.A