Two Taxonomies of Deception

for Attacks on Information Systems

Neil C. Rowe and Hy S. Rothstein

U.S. Naval Postgraduate School

Monterey, CA 93943

ncrowe@nps.edu, hsrothst@nps.edu

Abstract

'Cyberwar' is information warfare directed at the software of information systems. It represents an increasing threat to our militaries and civilian infrastructures. Six principles of military deception are enumerated and applied to cyberwar. Two taxonomies of deception methods for cyberwar are then provided, making both offensive and defensive analogies from deception strategies and tactics in conventional war to this new arena. One taxonomy has been published in the military literature, and the other is based on case theory in linguistics. The application of both taxonomies to cyberwar is new. We then show how to quantify and rank proposed deceptions for planning using 'suitability' numbers associated with the taxonomies. The paper provides planners for cyberwar with a more comprehensive enumeration than any yet published to the tactics and strategies that they and their enemies may use. Some analogies to deception in conventional warfare hold, but many do not, and careful thought and preparation must be applied to any deception effort.

Keywords: Deception, information warfare, information systems, tactics, defense, decoys, honeypots, lying, disinformation.

This paper appeared in the Journal of Information Warfare, 3 (2), July 2004, 27-39.

INTRODUCTION

Today, when our computer networks and information systems are increasingly subject to warfare, it is important to investigate effective strategies and tactics for them. Traditionally our information systems are seen as fortresses that must be fortified against attack. But this is only one of several useful military metaphors. Deception has always been an integral part of warfare. Can analogs of conventional deceptive tactics be used to attack and defend information systems? Defensive such tactics could provide a quite different dimension to the usual access-control defenses like user authentication and cryptography, and could be part of an increasingly popular idea called 'active network defense'. New tactics are especially needed against the emerging threats of terrorism.

Deception is usually most effective by a weaker force against a stronger. Cyberwar is different from conventional warfare: Most of the arguments of von Clausewitz (von Clausewitz, 1993) for the advantage of defense in warfare do not hold because their assumptions are invalid beyond nineteenth-century warfare. (For instance, the advantage of the defenders of a fortress in being able to see the enemy coming does not hold in cyberspace.) Much of the routine business of the developed world, and important portions of their military activities, are easily accessible on the Internet. Since there are so many access points to defend, and few 'fortresses', it is appealing for an enemy to use deception to overwhelm, neutralize, or subvert sites to achieve the enemy's goals. With resources spread thin, deception may also be essential for defenders.

Historically, deception has been quite useful in war (Dunnigan and Nofi, 2001) for four general reasons. First, it increases freedom of action to carry out tasks by diverting the opponent’s attention away from the real action being taken. Second, deception schemes may persuade an opponent to adopt a course of action that is to their disadvantage. Third, deception can help to gain surprise. Fourth, deception can preserve one's resources. Deception does raise ethical concerns, but defensive deception is acceptable in most ethical systems (Bok, 1978).

CRITERIA FOR GOOD DECEPTION

In this discussion, we will focus on 'cyberwar' which we will define as attacks by a nation or quasi-national organization on the software and data (as opposed to the people) of an information system. Attacks like this can be several degrees more sophisticated than amateur attacks ('hacking') frequently reported on systems today. Nonetheless, many of the same attack techniques must be employed.

Fowler and Nesbitt (1995) suggest six general principles for effective tactical deception in warfare based on their knowledge of air-land warfare. They are:

- Deception should reinforce enemy expectations.

- Deception should have realistic timing and duration.

- Deception should be integrated with operations.

- Deception should be coordinated with concealment of true intentions.

- Deception realism should be tailored to needs of the setting.

- Deception should be imaginative and creative.

For instance, in the well-known World War II deception operation 'Operation Mincemeat' (Montagu, 1954), false papers were put in a briefcase and attached to a corpse that was dumped off the coast of Spain. Some of the papers suggested an Allied attack at different places in the Mediterranean than Sicily, the intended invasion point. This deception was integrated with operations (Principle 3), the invasion of Sicily. Its timing was shortly before the operation (Principle 2) and was coordinated with tight security on the true invasion plan (Principle 4). It was tailored to the needs of the setting (Principle 5) by not attempting to convince the Germans much more than necessary. It was creative (Principle 6) since corpses with fake documents are unusual. Also, enemy preconceptions were reinforced by this deception (Principle 1) since Churchill had spoken of attacking the Balkans and both sides knew the coast of Sicily was heavily fortified. Mincemeat did fool Hitler (though not some of his generals) and has been alleged to have caused some diversion of Axis resources.

Now let us apply the principles to cyberwar.

- Principle 1 says we should reinforce an enemy's expectations. Fortunately, there are only a few strategic goals for information systems: Control the system, prevent normal operations ('denial of service'), collect intelligence about information resources, and propagate the attack to neighboring systems. So deception must focus on these, and because of the limited communications bandwidth between people and computers, deception can be focused on the messages between them.

- Principle 2 says that, however deceptions can be accomplished, they must not be too slow or too fast compared to the activities they simulate (Bell and Whaley, 1991). For instance, a deliberate delay in responding to a command should be long enough to make it seem that some work is being done, but not so long that the attacker suspects something unusual. Information systems avoid most of the nonverbal clues that reveal deceptions (Miller and Stiff, 1993) but timing is important.

- Principle 3 says that deceptions that are unconnected with operations are likely counterproductive because they warn the enemy of the methods of subsequent attack, and surprise is important in cyberwar. It also argues against use of 'honeypots' and 'honeynets' (The HoneyNet Project, 2002) as primary defensive deception tools. These are computers and computer networks (sometimes just simulated) that serve no normal users but bait the enemy to collect data about enemy methods.

- Principle 4 suggests that a deception must be comprehensive and consistent. For instance, if someone masquerades as a legitimate user to break into a computer, they should act as much like that user as possible. Similarly, to convince an attacker that they have downloaded a malicious file, we must maintain this pretense in the file-download utility, the directory-listing utility, the file editors, file-backup routines, the Web browser, and the execution monitor. So we need to systematically plan deceptions using such tools as 'software wrappers' (Michael et al., 2002) around all key software components.

- On the other hand, Principle 5 alerts us that we need not always provide very detailed deceptions if we know our attackers. For instance, most methods to seize control of a computer system involve downloading modified operating-system components ('rootkits') and installing them. So it is valuable to make deceptive the file-download utility and the directory-listing utility, not the archiving software nor the debuggers.

- Principle 6 ('creativity') may be difficult to achieve in computerized responses, but degrees of randomness in an automated attack or defense may simulate it, and methods from the field of artificial intelligence can produce some convincing creative activity.

A TAXONOMY OF MILITARY DECEPTION

Given the above principles, specific kinds of deception for information systems under warfare-like attacks can be considered. Several taxonomies of deception in warfare have been proposed. Dunnigan and Nofi (2001) proposed a popular taxonomy with these deception types:

a.· Concealment ('hiding your forces from the enemy')

b.· Camouflage ('hiding your troops and movements from the

enemy by artificial means')

c.· False and planted information (disinformation,

'letting the enemy get his hands on information that will hurt him and help

you')

d.· Lies ('when communicating with the enemy')

e.· Displays ('techniques to make the enemy see what isn't

there')

f.· Ruses ('tricks, such as displays that use enemy

equipment and procedures')

g.· Demonstrations ('making a move with your forces that implies

imminent action, but is not followed through')

h.· Feints ('like a demonstration, but you actually make

an attack')

i.· Insight ('deceive the opponent by outthinking him')

Table 1 summarizes our assessment of the application of this taxonomy to cyberwar offense and defense (with 10 = most appropriate, 0 = inappropriate).

Table 1: Summary of our assessment of deceptive types in information-system attack.

|

Deception type |

Useful for accomplishing a cyberwar attack? |

Useful in defending against a cyberwar attack? |

|

concealment of resources |

2 |

2 |

|

concealment of intentions |

7 |

10 |

|

camouflage |

5 |

3 |

|

disinformation |

2 |

4 |

|

lies |

1 |

10 |

|

displays |

1 |

6 |

|

ruses |

10 |

1 |

|

demonstrations |

3 |

1 |

|

feints |

6 |

3 |

|

insights |

8 |

10 |

Concealment

Concealment for conventional military operations uses natural terrain features and weather to hide forces and equipment from an enemy. A cyber-attacker can try to conceal their suspicious files and directories in little-visited places in an information system. However, this will not work very well because automated tools allow rapid search in cyberspace and intrusion-detection systems allow automatic checking for suspicious activity in large volumes of records. 'Steganography' or putting hidden secrets in innocent-looking information is only good for data, not programs. Nevertheless, concealment of intentions is important for both attack and defense in cyberspace, especially defense where it is unexpected.

Camouflage

Camouflage aims to deceive the senses artificially. Examples are aircraft with muffled engines and devices for dissipating heat signatures, and flying techniques that minimize enemy detection efforts (Latimer, 2001). Attackers of a computer system can camouflage themselves as legitimate users, but this is not very useful because most computer systems do not track user history very deeply. However, camouflaged malicious software has become common in unsolicited ('spam') electronic mail, as for instance email saying 'File you asked for' with a virus-infested attachment. Such camouflage could be adapted for offensive cyberwar. Defensive camouflage is not very useful because legitimate users as well as attackers will be impeded by moving or renaming resources, and this will not protect anyway against many attacks such as buffer overflows.

False and planted information

The Mincemeat example used false planted information, and false 'intelligence' could similarly be planted on computer systems or Web sites to divert or confuse attackers or even defenders. Scam Web sites (Mintz, 2002) use a form of disinformation. But most false information about a computer system is easy to check with software: A honeypot is often not hard to recognize from its lack of records indicating usual activity. (It may fool hackers, but not experienced attackers.) Disinformation must not be easily disprovable, and that is hard. Attackers will also not likely read disinformation during an attack, and only one lie can make an enemy mistrustful of all other statements made, just as one mistake can destroy the illusion in stage magic (Tognazzini, 1993).

Lies

The Soviets during the Cold War used disinformation by repeating a lie often until it seemed to be the truth. This was very effective in overrepresenting Soviet military capabilities during the 1970s and 1980s (Dunnigan and Nofi, 2001). Lies might help an attacker a little in cyberwar though stealth is more effective. However, lies about information systems are often an easy and useful defensive deceptive tactic. Users of an information system assume that everything the system tells them is true. And users of today's complex operating systems like Microsoft Windows are well accustomed to annoying and seemingly random error messages that prevent them from doing what they want. The best things to lie about could be the most basic things that matter to an attacker: the existence of resources and his or her ability to use them. So a computer system could issue false error messages when asked to do something suspicious, or could lie that it cannot download or open a suspicious file when it really can (Michael et al., 2003).

Displays

Displays aim to make the enemy see what is not there. Dummy positions, decoy targets, and battlefield noise fabrication are examples. Stealth is more valuable than displays in attacking an information system. Defenders do not care what an attacker looks like because they know attacks can come from any source.

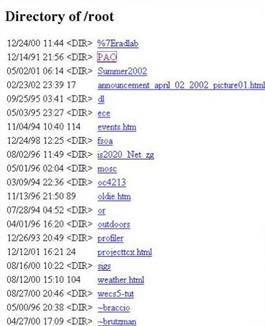

But defensively, displays have several valuable uses for an information system. One use is to make the defender see imaginary resources, as with a prototype deceptive Web site we created (Figure 1). It looks like a Web portal to a large directory, displaying a list of typical-sounding files and subdirectories, but everything is fake. The user can click on subdirectory names to see plausible subdirectories, and can click on file names to see what appear to be encrypted files in some cases (the bottom of Figure 1) and image-caption combinations in other cases (the top right of Figure 1). A proportion of the file and directory names are chosen at random. The 'encryption' is just a random number generator. The image-caption pairs are drawn randomly from a complete index of all images and captions at our school so they are unrelated to the names used in the directory. For some of the files it claims 'You are not authorized to view this page' or gives another error message when asked to open them. The site is a prototype of a way to entice spies to waste time by encouraging them to find surprising connections between concepts.

Another defensive use of displays is to confuse the attacker's damage assessment. Simply delaying a response to an attacker may make them think that they have slowed your system in a 'denial-of-service' attack (Julian et al., 2003). Unusual characters typed by the attacker or attempts to overflow input boxes (classic attack methods for many kinds of software) could initiate pop-up windows that appear to be debugging facilities or other system-administrator tools, as if the user has 'broken through' to the operating system. Computer viruses and worms often have distinctive symptoms that are not hard to simulate, much like faking of damage from bombing a military target.

Organization Chart

Figure 1: Example fake directory and file display from our software.

Ruses

Ruses attempt to make opponents think they are seeing their own troops or equipment instead of the enemy (Bell and Whaley, 1991). Modern ruses can use electronic means like impersonators transmitting orders to enemy troops. Most attacks on computer systems are ruses that amount to variants of the ancient idea of sneaking your men into the enemy's fortress by disguising them, as with the original Trojan Horse. Attackers can pretend to be system administrators, and software with malicious modifications can pretend to be unmodified. Ruses are not much help defensively. For instance, pretending to be a hacker is hard to exploit. If this is done to offer false information to the enemy, the same problems discussed regarding planted information are present. It is also hard to convince an enemy you are an ally unless you actually do something malicious to a third party since there are simple ways to confirm most malicious acts.

Demonstrations

Demonstrations use military power, normally through maneuvering, to distract the enemy. In 1991, General Schwarzkopf used deception to convince Iraq that a main attack would be directly into Kuwait, supported by an amphibious assault (Scales, 1998). Aggressive ground-force patrolling, artillery raids, amphibious feints, ship movements, and air operations were part of the deception. Demonstrations of the strength of an attacker's methods or a defender's protections are likely to be counterproductive for information systems: An adversary gains a greater sense of achievement by subverting the more impressive adversaries. However, bragging might encourage attacks on a honeypot and might generate useful data.

Feints

Feints are similar to demonstrations except they are followed by a true attack to distract the enemy from a main attack elsewhere. Operation Bodyguard supporting the Allied Normandy invasion in 1944 (Breuer, 1993) was a feint that included visual deception and misdirection, dummy forces and equipment, lighting schemes, and radio deception. Feints by the attacker in cyberwar could involve attacks with less-powerful methods, to encourage the defender to overreact and be less prepared for a subsequent main attack involving a different method. This may be happening now at a strategic level, as methods used frequently by hackers like buffer overflows and viruses in email attachments get overreported in press at the expense of the less-used methods like 'backdoors' that are better for offensive cyberwar.

Defensive counterattack feints in cyberwarfare face the problem that finding an attacker is very difficult in cyberspace, since attackers can conceal their identities by coming in through chains of hundreds of sites. Defensive feints could be used effectively to pretend to succumb to one form of attack to conceal a second less-obvious defense. For instance, buffer-overflow attacks could be denied on most 'ports' (access points) of a computer system with a warning message, but the system could pretend to allow them on a few ports for which the effects of succumbing to the attack (error messages, privileged access, etc.) are simulated. This is form of the classic tactic of multiple lines of defense.

Insights

A good understanding of the enemy can be valuable in tailoring deceptions for them. For instance, the Egyptian plan for the 1973 Yom Kippur War capitalized on Israeli and Western perceptions of the Arabs, including a perceived inability to keep secrets, military inefficiency, and inability to plan and conduct a coordinated action. The Egyptian deception plan provided plausible incorrect interpretations for a massive build-up along the Suez Canal and the Golan Heights by a series of false alerts (Stein, 1982). Attackers in cyberwar can use insights to figure out the enemy's weaknesses in advance. Hacker bulletin boards support this by reporting exploitable flaws in software. Defensive deception could involve trying to think like the attacker and figuring the best way to interfere with common attack plans. Methods of artificial intelligence help (Rowe, 2003). 'Counterplanning' can be done, systematic analysis with the objective of thwarting or obstructing an opponent's plan (Carbonell, 1981). Counterplanning is analogous to placing obstacles along expected enemy routes in conventional warfare.

A good counterplan should not try to foil an attack by every possible means: We can be far more effective by choosing a few related 'ploys' and presenting them well. Consider an attempt by an attacker to gain control of a computer system by installing their own 'rootkit', a gimmicked copy of the operating system. This almost always involves finding vulnerable systems by exploration, gaining access to those systems at vulnerable ports, getting administrator privileges on those systems, using those privileges to download gimmicked software, installing the software, testing the software, and using the system to attack others. If this plan can be formulated precisely, each opportunity for causing trouble for the attacker can be evaluated. Often it is best to foil the later steps in a plan because this requires more work to repair the damage. For instance, we could delete a downloaded rootkit after it has been copied. So good deception in cyberwar needs a carefully designed plan, just as in conventional warfare.

A DEEPER THEORY OF DECEPTION IN INFORMATION SYSTEMS

An alternative and deeper theory of deception can be developed from the theory of semantic cases in computational linguistics (Fillmore, 1968). This theory says that every action can be associated with a set of objects and methods necessary to accomplish it; these are semantic cases, a generalization of syntactic cases in language such as the direct object of a verb. Various cases have been proposed as additions to the basic nine-case framework of Fillmore, of which Copeck et al (1992) is the most comprehensive. To Copeck's 28 cases we add four more, the upward type-supertype and part-whole links and two speech-act conditions (Austin, 1975), to get 32 altogether:

- participant

- agent (the person who initiates the action), e.g. 'Joe' in 'Joe sent the email'

- beneficiary (the person who benefits), e.g. 'Tom' in 'Joe got a password for Tom'

- experiences (a psychological feature associated with the action), e.g. 'angry' in 'Joe became angry'

- instrument (some thing that helps accomplish the action), e.g. 'software' in 'Joe read the email with Outlook software'

- object (what the action is done to), e.g. 'attachment' in 'Joe opened the email attachment'

- recipient (the person who receives the action), e.g. 'Tom' in 'Joe sent Tom the attachment'

- space

- direction (of the action), e.g. 'forward' in 'Joe sent the email forward'

- location-at, e.g. 'computer' in 'Joe’s email on his home computer'

- location-from, e.g. 'Joe sent the email from his home computer'

- location-to, e.g. 'Joe sent email to Tom’s computer'

- location-through, e.g. 'firewall' in 'Joe sent email through the firewall'

- orientation (in some metric space), e.g. 'reply' in 'Joe’s email reply'

- time

- frequency (of occurrence), e.g. 'daily' in 'Joe’s daily email'

- time-at, e.g. 'noon' in 'Joe’s email at noon'

- time-from, e.g. 'Joe’s emails sent from noon onwards'

- time-to, e.g. 'Joe’s email process ending at noon'

- time-through, e.g. 'Joe’s email sent during the noon break'

- causality

- cause, e.g. 'bug' in 'Joe’s email got lost due to a bug'

- contradiction (what this action contradicts if anything), e.g. 'crash' in 'Joe's email got through despite the crash')

- effect, e.g. 'crash' in 'Joe’s email virus caused a crash'

- purpose, e.g. 'reputation' in 'Joe’s email was crafted for his reputation'

- quality

- accompaniment (additional object associated with the action) e.g. 'virus' in 'Joe’s email included a virus'

- content (type of the action object), e.g. 'graphics' in 'Joe’s email of graphics'

- manner (the way in which the action is done), e.g. 'stupid' in 'Joe’s stupid email'

- material (the atomic units out of which the action is composed), e.g. 'bits' in 'Joe’s email in bits'

- measure (the measurement associated with the action), e.g. '10K byte' in 'Joes's 10K byte email'

- order (of events), e.g. 'last' in 'Joe’s last email'

- value (the data transmitted by the action), e.g. '37' in 'Joe emailed the password 37'

- essence

- supertype (generalization of the action type), e.g. 'email' in 'Joe sent the offer email'

- whole (of which the action is a part), e.g. 'document' in 'Joe sent the offer document'

- speech-act theory

- precondition (on the action), e.g 'crash' in 'The computer crash prevented Joe's email'

- ability (of the agent to perform the action), e.g. 'know' in 'Joe didn't know how to send email'

Our claim is that deception operates on an action to change its perceived associated case values. For instance, the original Trojan Horse modified the purpose of a gift-giving action (it was an attack not a peace offering), its accompaniment (the gift had hidden soldiers), and 'time-to' (the war was not over). Similarly, an attacker masquerading as the administrator of a computer system is modifying the agent and purpose cases associated with their actions on that system. For the fake-directory prototype shown in Figure 1, deceptions in object (it isn't a real directory interface), 'time-through' (some responses are deliberately delayed), cause (it lies about files being too big to load), preconditions (it lies about necessary authorization), and effect (it lies about the existence of files and directories) were used.

A deception can involve more than one case simultaneously so there are many combinations. However, not all of the above cases make sense in cyberspace nor for our particular concern, the interaction between an attacker and the software of a computer system. The following can be ruled out:

· beneficiary (the attacker is the assumed beneficiary of all activity)

· experiences (deception in associated psychological states doesn't matter in giving commands and obeying them)

· recipient (the only agents that matter are the attacker and the system they are attacking)

· 'location-at' (you can't 'inhabit' cyberspace)

· orientation (there is no coordinate system in cyberspace)

· 'time-from' (attacks happen anytime)

· 'time-to' (attacks happen anytime)

· contradiction (commands do not include comparisons)

· manner (there is only one way commands execute besides in duration)

· material (everything is bits and bytes in cyberspace)

· order (the order of commands or files rarely can be varied and cannot deceive either an attacker or defender)

In general, offensive opportunities for deception are as frequent as defensive opportunities, in cyberspace as well as in conventional warfare, but appropriate methods differ. For instance, the instrument case is associated with offensive deceptions in cyberspace since the attacker can choose the instrument between email attachments, coming in through an insecure port, a backdoor, regular access with a stolen password, and so on, while the defender has little control since they must use the targeted system and its data. That is different from conventional warfare where, say, the attacker can choose the weapons used in an aerial attack but the defender can choose tactics like concealment, decoys, and jamming. In contrast, 'time-through' is primarily associated with defensive deceptions in cyberspace since the defending computer system controls the time it takes to process and respond to a command, and is little affected by the time it takes an attacker to issue commands to it. However, 'object' can be associated with both cyberspace offense and defense because attackers can choose to attack little-defended targets like unused ports, while defenders can substitute low-value targets like honeypots for high-value targets that the attacker thinks they are compromising. Table 2 summarizes the suitability of the remaining 21 deception methods judged on general principles. 10 indicates the most suitable, and 0 indicates unsuitable; these numbers could be refined by surveys of users or deception experiments. This table provides a wide-ranging menu of choices for deception planners.

Table 2: Evaluation of deception methods in cyberspace.

|

Deception method |

Suitability for offense in information systems, with general example |

Suitability for defense in information systems, with general example |

|

agent |

4 (pretend attacker is legitimate user or is standard software) |

0 |

|

instrument |

7 (attack with a surprising tool) |

0 |

|

object |

8 (attack unexpected software or feature of a system) |

5 (camouflage key targets or make them look unimportant, or disguise software as different software) |

|

direction |

2 (attack backward to site of a user) |

4 (transfer Trojan horses back to attacker) |

|

location-from |

5 (attack from a surprise site) |

2 (try to frighten attacker with false messages from authorities) |

|

location-to |

3 (attack an unexpected site or port if there are any) |

6 (transfer control to a safer machine, as on a honeynet) |

|

location-through |

3 (attack through another site) |

0 |

|

frequency |

10 (swamp a resource with tasks) |

8 (swamp attacker with messages or requests) |

|

time-at |

5 (put false times in event records) |

2 (associate false times with files) |

|

time-through |

1 (delay during attack to make it look as if attack was aborted) |

8 (delay in processing commands) |

|

cause |

1 (doesn't matter much) |

9 (lie that you can't do something, or do something not asked for) |

|

effect |

3 (lie as to what a command does) |

10 (lie as to what a command did) |

|

purpose |

3 (lie about reasons for needing information) |

7 (lie about reasons for asking for authorization data) |

|

accompaniment |

9 (a Trojan horse installed on a system) |

6 (software with a Trojan horse that is sent to attacker) |

|

content |

6 (redefine executables; give false file-type information) |

7 (redefine executables; give false file-type information) |

|

measure |

5 (send data too large to easily handle) |

7 (send data too large or requests too hard to attacker) |

|

value |

3 (give arguments to commands that have unexpected consequences) |

9 (systematically misunderstand attacker commands) |

|

supertype |

6 (pretend attack is something else) |

0 |

|

whole |

8 (conceal attack in some other activity) |

0 |

|

precondition |

5 (give impossible commands) |

8 (give false excuses for being unable to do something) |

|

ability |

2 (pretend to be an inept attacker of have inept attack tools) |

5 (pretend to be an inept defender or have easy-to-subvert software) |

RANKING DECEPTIONS

The ideas presented so far can be brought together in a simple quantitative model for ranking deceptions. A deception planner must also take into account the suitability of the deception for the situation. This can also be ranked on a scale of 0 to 10 and can be multiplied by the deception suitability from Table 2 to get a situational suitability. Multiplication is appropriate because the factors are independent and their suitabilities are like probabilities. For instance, consider a defensive deception with regard to preconditions that involves pretending the network is down; this works for best for attempts to connect across the network, less well for displaying network data that may have been cached from the last use of the network, and does not work at all for opening local files. Suitability of a deception should include its consistency with other deceptions. For instance, pretending to misunderstand commands due to faulty communications settings should be done every time for each text command after its first use. Consistency is easier to maintain by using a set of 'generic excuses' for deception, such as the defensive excuses 'the file system is broken' and 'the system is being debugged'.

Another mostly-independent factor is the suitability of the deception type from our first taxonomy. For instance, pretending the network is down is a form of lying. We can multiply this by the product of the above two factors to get an overall suitability that ranges between 0 and 1000. It is not an independent factor because not every item of the first taxonomy can be used with every item in the second taxonomy, but multiplication of this kind is used with the classic technique of 'Naive Bayes' analysis (Witten and Frank, 2000) and works well for many problems.

Finally, we must include a factor for the possibility that the suspicious user is not in fact an attacker but a legitimate user doing something atypical. This will help us decide when to start deception. Suppose the expected benefit of doing the deception is a positive number B (e.g. the change in the probability that the attacker will leave times the expected benefit of their leaving) and the expected cost to a legitimate user of doing the deception is a positive number C. Suppose the probability that the user is an attacker is P, a value which could be obtained from an intrusion-detection system (Lunt, 1993). Then the expected benefit of doing the deception is (P*B)-((1-P)*C). This must be positive to make any deception worthwhile, or when P > C/(B+C). Besides this, a more detailed cost model can include the costs of the discovery by the attacker that you have deceived them, but this is hard to model.

Putting this all together, deception opportunities can be ranked by the formula T1*T2*S*((P*B)-((1-P)*C)) where:

· T1 is the suitability of the deception from the first (military) taxonomy;

· T2 is the suitability of the deception from the second (case) taxonomy;

· S is the intrinsic suitability of the deception for the occasion to which is to be applied;

· P is the probability that the user to which deception is applied is malicious;

· B is the expected benefit to us of our deception if the user is malicious; and

· C is the expected cost to us (a negative number) of our deception if the user is nonmalicious.

For instance, if we lie that the system is down when a user tries to transfer a file from the network, and we estimate a 0.2 probability that user is malicious with a benefit of 10 if the user is malicious and cost of 1 if the user is not, the formula gives 10*8*10*((0.2*10)-(0.8*1)) = 960 which can be compared to that for other proposed deception opportunities.

CONCLUSION

It is simplistic to think of cyberwar as just another kind of warfare. With our analysis of six fundamental principles of deception, we have seen several special requirements for deception in cyberwar. With our first taxonomy of deception methods, we have seen that many ideas from conventional warfare apply, but not all, and often those that apply do so in surprising ways. With our second taxonomy, we have seen that deception in cyberwar can arise in 21 ways from concepts associated with an action. We also see from both taxonomies that defensive and offensive purposes require different considerations, and that a simple numeric formula can guide selection of a good deception. As in conventional warfare, careful planning will be necessary for effective deception in cyberwar, and a basis for it has been provided here.

REFERENCES

Austin, J. L. (1975) How To Do Things With Words, 2nd edition, ed. Urmson, J., & Sbis, M., Oxford University Press, Oxford.

Bell, J. B., & Whaley, B. (1991) Cheating and Deception, Transaction Publishers, New Brunswick, New Jersey.

Bok, S. (1978) Lying: Moral Choice in Public and Private Life, Pantheon, New York.

Breuer, W. B. (1993) Hoodwinking Hitler: The Normandy Deception, Praeger, London.

Carbonell, J. (1981). Counterplanning: A strategy-based model of adversary planning in real-world situations. Artificial Intelligence, 16, pp. 295-329.

von Clausewitz, K. (1993) On War, trans. Howard, M., & Paret, P., Everyman's Library, New York.

Copeck, T., Delisle, S., & Szparkowicz, S. (1992). Parsing and case interpretation in TANKA. Conference on Computational Linguistics, Nantes, France, pp. 1008-1023.

Dunnigan, J. F., & Nofi, A. A. (2001) Victory and Deceit, 2nd edition: Deception and Trickery in War, Writers Press Books, San Jose, California.

Fillmore, C. (1968). The case for case. In Universals in Linguistic Theory, ed. Bach, E. & Harns, R., Holt, Rinehart, & Winston, New York.

Fowler, C. A., & Nesbit, R. F. (1995). Tactical deception in air-land warfare. Journal of Electronic Defense, 18(6) (June), pp. 37-44 & 76-79.

The Honeynet Project (2002) Know Your Enemy, Addison-Wesley, Boston, Massachusetts.

Julian, D., Rowe, N., & Michael, J. B. (2003). Experiments with deceptive software responses to buffer-based attacks. IEEE-SMC Workshop on Information Assurance, West Point, New York, June, pp. 43-44.

Latimer, J. (2001) Deception in War, The Overlook Press, New York.

Lunt, T. F. (1993). A survey of intrusion detection techniques. Computers and Security, 12(4) (June), pp. 405-418.

Michael, J. B., Auguston, M., Rowe, N., & Riehle, R. (2002). Software decoys: intrusion detection and countermeasures. IEEE-SMC Workshop on Information Assurance, West Point, New York, June, pp. 130-138.

Michael, J. B., Fragkos, G., & Auguston, M. (2003) An experiment in software decoy design: Intrusion detection and countermeasures via system call instrumentation. Proc. IFIP 18th International Information Security Conference, Athens, Greece, May, pp. 253-264.

Miller, G. R., & Stiff, J. B. (1993) Deceptive Communications, Sage Publications, Newbury Park, UK.

Mintz, A. P. (ed.) (2002) Web of Deception: Misinformation on the Internet, CyberAge Books, New York..

Montagu, E. (1954) The Man Who Never Was, Lippincott, Philadelphia, Pennsylvania.

Rowe, N. C. (2003). Counterplanning deceptions to foil cyber-attack plans. IEEE-SMC Workshop on Information Assurance, West Point, NY, June, pp. 203-211.

Scales, R. (1998) Certain Victory: The US Army in the Gulf War, Brassey's, New York.

Stein, J. G. (1982). Military deception, strategic surprise, and conventional deterrence: a political analysis of Egypt and Israel, 1971-73. In Military Deception and Strategic Surprise, ed. Gooch, J., and Perlmutter, A., Frank Cass, London, pp. 94-121.

Tognazzini, B. (1993). Principles, techniques, and ethics of stage magic and their application to human interface design. Conference on Human Factors and Computing Systems, Amsterdam, April, pp. 355-362.

Witten, I., & Frank, E. (2000) Data mining: Practical machine learning with Java implementations, Morgan Kaufmann, San Francisco, California.