Abstract - One of the best ways to defend a computer system is to make attackers think it is not worth attacking. Deception or inconsistency during attacker reconnaissance can be an effective way to encourage this. We provide some theory of its advantages and present some data from a honeypot that suggests ways it could be fruitfully employed. We then report on experiments that manipulated packets of attackers of a honeypot using Snort Inline. Results show that attackers definitely responded to deceptive manipulations, although not all the responses helped defenders. We conclude with some preliminary results on analysis of "last packets" of a session which indicate more precisely what clues turn attackers away.

Index terms - deception, computers, reconnaissance, honeypot, packets, cost, networks

This paper appeared in the Proceedings of the 8th IEEE Workshop on Information Assurance, West Point, NY, June 2007.

I. Introduction

Deception can be an effective tool for defending computer systems as a third line of defense when access controls and intrusion-prevention systems fail. Deception is an unexpected defense and can catch an attacker unaware. Deceptions provide a large variety of specific tactics that can confuse, scare away, or tie up an attacker depending on the circumstances and the methods. Examples of useful deceptions in cyberspace include false error messages, fake files and directories, and exaggerated delays [1].

Our previous research has investigated several useful deceptions for cyberspace defense [2]. However, deception is most effective if done early to reduce risk to defenders and better exploit unfamiliarity of the attacker with the defender. So deception during attacker reconnaissance could be especially helpful to defenders. It could convince attackers to go away because the system appears invulnerable to the exploits that they know; it could make the system look very unreliable by deliberate delays and error messages; or it could make the system look like a honeypot since attackers do not like honeypots. We can try out reconnaissance-phase deceptions in honeypots and see how attackers respond as a kind of "experimental information assurance".

II. Background

Deception is frequently used in cyber-attacks, most commonly as identity deception and concealment. Defensive deception most commonly appears in cyberspace in honeypots, machines deliberately designed to be attack targets [3]. While honeypots do not necessarily use deception, they are more effective if they do because attackers know honeypots collect their attack methods and are unlikely to be fully exploitable [4]. Honeypots can try to disguise their monitoring activities by concealing their monitoring software and monitoring messages. The Sebek tool of the Honeynet Project (www.honeynet.org) is a good example, a kind of "defensive rootkit". It conceals its monitoring process by rewriting the process-listing utility of the operating system to omit it, and conceals its monitoring code by modifying the operating-system directory-listing utility.

Deception can be used effectively for defense in other ways too. It can delay or even halt suspicious activity when implemented as false error messages, demands on the attackers, or outright stalling. Attackers depend on consistency of their attack targets, so any inconsistency can help defense. We explore this idea in this paper.

Deception is one of a spectrum of "active response" methods [5] that feature in intrusion-prevention systems, extensions of intrusion-detection systems that respond to suspicious behavior by stopping it in one of several ways, most commonly by blocking connections from particular external addresses. Deception is a more "proactive" active response than these that provides more flexibility to defenders.

Deception can induce counterdeception by the attacker [6]. [7] for instance reports on how the Sebek tool can be detected despite measures to conceal it. However, this is far from being a disadvantage of deception [2], as needing to do additional checks on a machine will make the attacker's job more difficult.

III. The advantages of reconnaissance

Attackers use the reconnaissance phase of their attack to apply various tests to a target machine, to determine such things as what version of an operating system it is running and what ports are open. However, testing requires time and knowledge, and not all attackers want to do it. We see many "brute force" exploits on our honeypots that do not. So we analyze here when a test is useful for an attacker. Assume:

·

![]() is the average cost

perceived by the attacker (perhaps mostly in time) to find a potential victim system.

is the average cost

perceived by the attacker (perhaps mostly in time) to find a potential victim system.

·

![]() is the average cost

perceived by the attacker to subsequently compromise a randomly chosen system.

is the average cost

perceived by the attacker to subsequently compromise a randomly chosen system.

·

![]() is the cost of testing the

victim machine to determine its properties.

is the cost of testing the

victim machine to determine its properties.

·

![]() is the prior probability

that a random machine is confirmed as a honeypot by the test.

is the prior probability

that a random machine is confirmed as a honeypot by the test.

· The test is perfectly accurate.

· The attacker does the test at the first opportunity since there is no advantage to delay.

· If the test confirms a honeypot, the attacker will find another machine at random, continuing until they find a machine that passes the test.

· If the test does not confirm a honeypot, the attacker proceeds normally with the attack.

·

The attacker must compromise ![]() random machines to get the

benefit of compromising one non-honeypot.

random machines to get the

benefit of compromising one non-honeypot.

Then the average cost per compromised machine when not testing will be greater than the average cost with testing if:

This involves an infinite

geometric series and the inequality can be simplified to

This involves an infinite

geometric series and the inequality can be simplified to ![]() .

.

This inequality holds for a large range of typical attack scenarios. For example, if the probability of test failure is 0.8, the cost of compromise is 5 minutes, and the cost of finding the machine is one minute, the test must take less than 3.2 minutes to be cost-effective. Test failure will be common since most tests check whether an unpatched operating system is installed, and the cost of compromise is generally much larger than the cost of both finding the machine and testing. So it will almost always be desirable to test and go away if the tests fails, since there are plenty of vulnerable unpatched machines on the Internet. Note this result applies to design of automated attacks as well.

IV. Deception opportunities inferred from honeypot data

Networks provide display plenty of attack traffic without solicitation as part of the "background radiation" of the Internet [8]. New statistical techniques are emerging for studying it of which we can take advantage of [9, 10, 11].

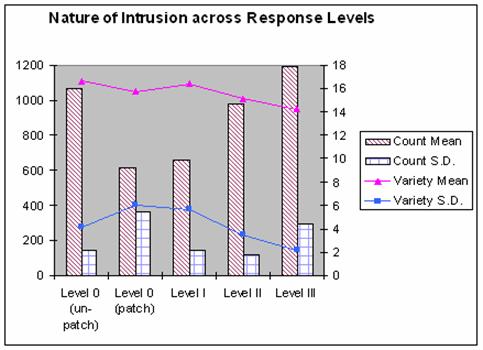

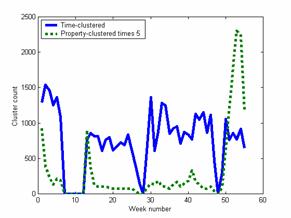

We have been running a honeypot for over a year through a digital subscriber line to an Internet service provider with no association with our school. The honeypot is kept up-to-date with operating-system patches so we see few break-ins but many reconnaissances. Figure 1 shows weekly totals of Snort alert data for the first 55 weeks as clustered two different ways: counting as 1 all alerts involving the same remote IP address within a 10 minute period, and counting the number of clusters obtained from the K-Means algorithm on 12 features of the alerts. The first method estimates the volume of the attacks, and the second estimates the variety of the attacks.

It can be seen that the volume of attacks increased when the system went down and came back up; in week 31 this effect persisted for several weeks. Deliberate manipulations of packets in week 41 and more aggressively in week 51, as we will describe in the next section, increased the variety of attacks. This suggests what a honeypot can do to encourage attacks. On the other hand, it suggests that keeping an existing long-used IP address and responding normally to packets may entail reduced numbers of attacks.

Figure 1: Attack volume and variety on a honeypot over 55 weeks.

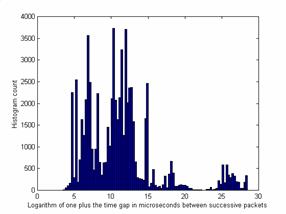

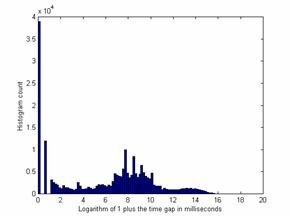

Our data showed frequent automated attacks, as is seen by plotting the histogram of interarrival times of alerts from the same remote IP address (Figure 2). The sharp peaks likely represent programmed timings in multistep automated attacks because manual attacks over such a long period would show a more Gaussian normal distribution. Going from left to right, the peaks correspond to delays of 0 milliseconds, 1 millisecond, 9.2 milliseconds, 40.4 milliseconds, 2.4 seconds, 4.4 seconds, 8.1 seconds, 27 seconds. 24 hours corresponds to a logarithm of 18.2, but we saw no peak there. The interarrival time of unrelated alerts appears as a normal distribution centered at 13.5 (corresponding to 20 minutes delay) and resembles a Poisson process. This suggests that many attacks can be foiled by an unexpected response from the victim since automated attacks are usually implemented without conditionals and thus are "brittle".

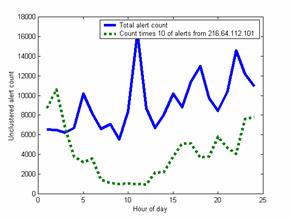

Figure 3 shows the distribution of the unclustered count of attacks as a function of hour of the day in Pacific Standard Time with 0 being midnight. It appears that most of the attackers are on a North American West-Coast timeline, judging by the low counts in the night and high counts in the morning and later evening. Some data for particular external addresses showed clearer trends, such as that for 216.64.112.101 whose event times suggest eastern Asia although it is assigned to an Internet service provider in North Carolina. We also observed a 15% decrease in alerts on Fridays, Saturdays, and Sundays. These results suggest particular days and times we should have a computer online to either attract or discourage attacks depending on our goals.

Figure 2: Inter-alert duration histogram for a honeypot, for gaps measured in milliseconds and for 55 weeks of observation, and for alerts caused by the same external IP address.

Figure 3: Count of unclustered alerts per time of day in 55 weeks.

V. Live-attacker experiments

Based on the data, we modified our honeypot to accomplish deception using the Snort Inline product to drop and modify packets. Precedents are ther work of [12] and [13] with "low-interaction" honeypots that simulate the external appearance of normal machines but do not permit logins. However, more realistic attacker responses should be elicited from honeypots like ours that permit full interaction, since low-interaction honeypots are easy to detect due to the limited capabilities of their protocols.

A. Setup

Most of the setup was done in our earlier work [2]. The testbed uses virtualization software VMWare to run two honeypots on one physical machine while the gateway and remote alert/log servers are hosted in two other machines. The virtual machines are a Windows 2000 Server and a Windows XP machine with the latest patches. The router machine serves as the gateway and channels traffic from the Internet to the honeynet and vice versa. Data capture is done on the router machine by running the Snort intrusion-detection system. Alerts are logged locally and also sent to a remote Postgres database that is inaccessible from the Internet.

We took precautions that our honeypots not be used by the intruder as a launch pad to spread further attacks by regularly monitoring the states and processes on the honeypots. We also blocked traffic by our honeypots that seemed to be probing other networks. These were network messages destined for external addresses that varied only in the last octet and occurred within a second.

B. Deception rule development

Snort Inline runs a simple intrusion-prevention system instead of the regular Snort intrusion-detection mode. Inline mode requires Iptables, a fast prefilter for Snort much like a firewall, and Libnet, a set of high level APIs that allows Snort to construct and inject network packets. We also needed to write our own Snort rules for packet manipulations.

As an example, say we want to intercept all inbound ICMP traffic through Iptables so that we can drop these packets. We would need a rule in the default filter table in Iptables as:

iptables -I INPUT -p icmp -j QUEUE

This inserts the rule at the top of the rule set in the INPUT rule chain, giving it the highest priority on that chain. The rule intercepts incoming network packets of the ICMP protocol and passes them on or jumps to a Snort accessible object called the QUEUE. The corresponding Snort rule would be:

drop icmp $EXTERNAL_NET any -> $HOME_NET any (…)

To set up the configuration to block outgoing ICMP packets, the rules for Iptables and Snort would be:

iptables -I OUTPUT -p icmp -j QUEUE

drop icmp $HOME_NET any -> $EXTERNAL_NET any (…)

Note that we now apply the rule on the OUTPUT rule chain in Iptables and the direction is reversed in the Snort rule.

Iptables also has a Nat table for network address translation and a Mangle table for low-level packet modification such as that of the Type of Service field. For our purposes, we only needed to configure the filter table for traffic filtering.

Due to the peculiarity of our testbed, traffic that is routed to our honeynet will not pass through the INPUT or OUTPUT chains but only through the FORWARD chain. So if we want to drop outbound probes for port 135 on external IP addresses, the respective rules for Iptables and Snort would be:

iptables -I FORWARD -p tcp –-dport 135 –s X.X.X.X/24 -j QUEUE

drop tcp $HOME_NET any -> $EXTERNAL_NET 135 (…)

Our Snort rules also use the action "drop" as in this example of using the "replace" option:

alert tcp $HOME_NET 21 -> $EXTERNAL_NET any (content:"530 "; replace:"331 "; isdataat:1, relative; content:"cannot log in"; replace:"logged in. ";)

This rule acts on FTP (File-Transfer Protocol) responses from our honeynet to the an FTP client on the Internet. The first "replace" replaces the FTP message of code 530, "User name okay, need password", with 331, "User X cannot log in". The second "replace" replaces the message of code 530 with that associated with 230, "cannot log in". The trailing spaces in the second "replace" are needed because Snort requires an exact number of characters to be substituted. The option "isdataat" takes care of the "User X" string that appears after the return code but before the string "cannot log in". So there are two deceptions here, one with the FTP return code and one with the return message. It is unnecessary to synchronize the deceptions and we do this in the hope of confusing the intruder.

We also ran a separate Snort process in intrusion-detection mode on our router to monitor what happened with packet manipulations. We also set up a separate database to receive alerts from just Snort Inline. For more complete forensic analysis, we also ran a full packet capture using Tcpdump on all the network traffic arriving at the router machine.

C. Experiments

As we had a single honeynet, we successively used it for each experiment, restoring the initial state each time (starting in week 50 of Figure 1).

· For Experiment 1 (the control experiment), we set up the virtual honeypot containing the Windows 2000 Advanced Server without any service packs and patches. We collected 36 hours of intrusion data in each of several runs and

· For Experiment 2, we did the same runs using Windows with the most recent service packs and patches.

· For Experiment 3, we let the setup stabilize over a period of one week after it was first put online, and then measured traffic.

· For Experiment 4, we dropped ICMP traffic going to and coming from our honeynet.

· For Experiment 5, we used the rules from Experiment 4 but added rules to replace certain keywords in protocol messages with deceptive ones as described above. As a subexperiment, we also examined the effects of deceptive responses on file-transfer attacks.

We measured Snort alert counts separately for each experiment. We omitted "Destination Unreachable", "Port Unreachable", and "ICMP redirect" alerts because they concerned reconnaissance alone; we omitted login failure alerts because they had high numbers whose counts were rather arbitrary, apparent due to automated password-guessing tools. The omitted intrusion types accounted for 98.7% of the raw counts for one run in Experiment 1 where the honeypot was compromised and used by the successful intruder to probe other networks.

Our results show that intruders are definitely affected by our unexpected responses to their exploits (Figure 4). We plot intrusion counts (left scale) and variety or number of distinct alerts (right scale) across the experiments ("Level 0 (unpatched)" is Experiment 1, "Level 0 (patched)" is Experiment 2, etc.) Experiment 3 shows the best job in keeping the intrusion counts at a stable low. In Experiments 4 and 5, the intrusion count rises although not as much in the Experiment 5 due to a higher standard deviation. At the same time, the intrusion variety decreases but at a slower rate. This suggests that packet manipulations can deal with intrusion types that patched systems cannot handle.

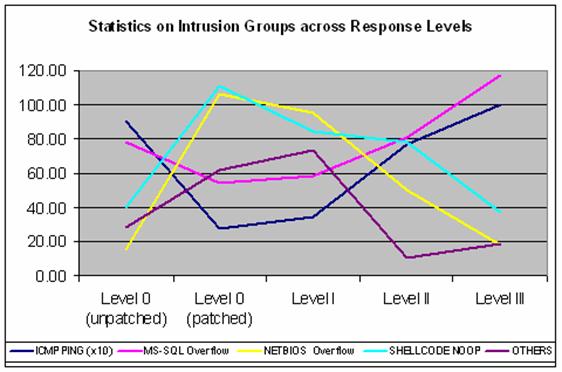

Figure 5 shows how the type of attacks changed over the experiments. Attacks involving NETBIOS overflows and SHELLCODE NOPs decreased, but ICMP PING and MS-SQL attempted overflows increased. It appears we are forcing attackers to use less-effective alternate methods, though they are still trying.

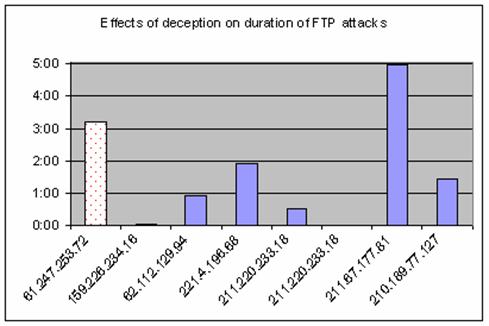

We also examined the effects of deception in the file-transfer utility FTP on the duration of login attacks attempts. We charted the durations of FTP intrusions by eight intruder IP addresses (Figure 6). This first shown with the dotted pattern fill indicates a typical FTP intrusion on an Experiment 3 system; the remaining seven occurred on an Experiment 5 system. Six out of these seven intrusions ended within two hours; one where our deception was not successful in dissuading the intrusion ended just less than five hours. This latter attack suggests an intruder who uses automated tools to scan and conduct attacks; they probably let the tool run without much monitoring and return some five hours later to check on their yield. In none of these attempts did the intruder learn anything about the password. For instance, the first FTP intrusion unsuccessfully tried about three hours of password combinations.

VI. Last-packet analysis

Currently we are investigating further what clues attackers are responding to by analyzing the last few packets sent during a session with an attacker. The idea is that what the attacker saw just before they went away could be something that encouraged them to go away, and thus a good basis for a deception. This approach is similar to that of early detection of worms from small clues [14] and inference of attack intent from initial clues [15].