Multi-Agent Simulation

�of Human Behavior in Naval Air Defense

Lt. Sharif H. Calfee, U.S. Navy, and Neil C. Rowe

Institute for Modeling, Virtual Environments, and Simulation (MOVES)

and Computer Science Department

U.S. Naval Postgraduate School

833 Dyer Road, Monterey, CA 93943

shcalfee@nps.edu, ncrowe@nps.edu

ABSTRACT

The AEGIS Cruiser Air-Defense Simulation is a program that models the operations of a Combat Information Center team performing air defense for a U.S. Navy battle group.� It uses multi-agent system technology and is implemented in Java.� Conceived primarily to assist personnel in air-defense training and doctrine formulation, the simulation provides insight into the factors (skills, experience, fatigue, aircraft numbers, weather, etc.) that influence performance, especially under intense or stressful situations, and the task bottlenecks.� It simulates air contacts (aircraft and missiles) as well as the actions and mental processes of the watchstanders.� All simulated events are logged, to permit performance analysis and reconstruction for post-scenario training.� Validation of the simulation was done with the help of expert practitioners of air defense at the AEGIS Training & Readiness Center (ATRC), San Diego, California, USA.

This paper appeared in the Naval Engineers' Journal, 116 (4), Fall 2004, 53-64.

Introduction

Air warfare is the most rapid, intense, and devastating type of warfare that the U.S. Navy trains for, and is a major focus of the operations of ships (Mairorano, Carr, and Bender 1996).� Due to the fast pace, uncertainty, and dangers of air warfare, the air-defense team of "watchstanders" must train extensively in the many skills needed for these operations.� The needed skills involve search, detection, and classification to determine and maintain identification of all aircraft and surface vessels within the operational area of the ship's "battle group" (or coordinated group of ships).� However, continued advances in speed, maneuverability, and accuracy of anti-ship missiles have reached the point where, despite defensive countermeasures, human watchstanders can be unable to communicate, coordinate, and react quickly and correctly.� Two incidents in the 1980s highlighted the need for improvements: The USS Stark was attacked by two Exocet anti-ship missiles and was nearly sunk, and the USS Vincennes mistakenly shot down a civilian Iranian airliner during a surface battle with Iranian naval forces.� Air defense does require human judgment because of widely varying geographical, environmental, and tactical conditions, and thus would be dangerous to fully automate despite interesting attempts (Noh and Gmytrasiewicz 1998).

This paper reports on the AEGIS Cruiser Air-Defense Commander (ADC) Simulation, built to model the performance of U.S. Navy watchstanders engaged in air defense (Calfee, 2003).� In a naval battle group, which consists of the aircraft carrier and six to eight supporting warships, the Air-Defense Commander is responsible for the coordination of the force�s resources (ships, aircraft) and efforts to conduct surveillance, detection, identification, intercept, and engagement of aircraft within the operational area with the primary objective of defending the aircraft carrier (or other high-value unit).� While other work has modeled the threats in air defense (Barcio et al 1995; Bloeman and Witberg 2000; Choi and Wijesekera 2000; Delaney 2001), the ADC Simulation appears to be the first to carefully model the watchstanders as well.� On Navy ships, air defense is done in the Combat Information Center (CIC), which contains consoles for activating weapon systems, configuring sensor systems, displaying contact tracks, and communicating with other ships and aircraft.� Methodology for modeling such teams has become increasingly sophisticated in recent years (Weaver et al 1995).� Figure 1 gives the organization of the 11-person CIC air-defense team on the AEGIS cruiser serving as the Air-Defense Commander for the battle group forces.

Figure 1: CIC air-defense team organization.

The air-defense watchstanders are:

� The Force Tactical Action Officer (FORCE TAO) who controls air defense for the battle group and is responsible for major decisions such as contact classifications and weapons releases.� There is only one FORCE TAO and they are located on the battle group ship.� The FORCE TAO works directly for the ADC who in turn reports to the Battle Group Commander.

� The Force Anti-Air Warfare Coordinator (FORCE AAWC) who coordinates the movement and assignment of friendly aircraft and orders the weapons employment by ships in defense of the battle group.� The FORCE AAWC works directly for the FORCE TAO.

� The Ship Anti-Air Warfare Coordinator (SHIP AAWC) who directs aircraft detection and classification for a single ship in support of the air-defense effort within its airspace and manages the identification process.� The SHIP AAWC coordinates directly with the FORCE AAWC.

� The Ship Tactical Action Officer (SHIP TAO) who leads the CIC watch-team air-defense effort for a single ship and coordinates directly with the FORCE TAO.

� The Missile Systems Supervisor (MSS) who fires (under orders) the ship�s surface-to-air missiles and the self-defense Close-In Weapon System.

� The Red Crown watchstander (RC) who monitors friendly aircraft for the battle group.� The RC coordinates directly with the FORCE TAO and FORCE AAWC.

� The Electronic Warfare Control Officer (EWCO or EWS) who is responsible for the operation of the electronic emissions detection equipment used to detect and classify aircraft.

� The Identification Supervisor (IDS) who does Identification Friend or Foe (IFF) challenges on unknown aircraft and, when directed, initiates query or warning procedures for contacts.

� The Radar Systems Controller (RSC) who operates the SPY-1A/B radar systems, the primary means by which aircraft are detected and tracked.

� The Tactical Information Coordinator (TIC) who operates the Tactical Digital Information Links which communicate tactical data among the ships and aircraft in the battle group.

� The Combat Systems Coordinator (CSC) who monitors the status of the combat systems that support the CIC and repairs them as necessary.

related work

The Tactical Decision-Making Under Stress study explored the causes of the USS Vincennes incident (Morrison et al 1996).� Some problems were identified with the short-term memory limitations, such as forgetting and confusing track numbers, forgetting and confusing kinematic data, and confusing tracks of contacts.� Other problems were related to decision bias, such as carrying initial threat assessment throughout the scenario regardless of new information, and assessing a contact from past experiences.� This work also suggested how to improve command center display consoles.� For realism, the ADC Simulation approximates some of these cognitive errors.

Other work examined the cognitive aspects of the threat-assessment process used by naval air-defense officers during battle group operations (Liebhaber and Smith 2000).� This indicated that watchstanders had possible-track templates, derived from a set of twenty-two identifying factors, which they used to classify contacts and calculate threat assessments.� Some of the most promising factors were electromagnetic emissions, course, speed, altitude, point of origin, flight profile, intelligence information, and Identification Friend or Foe (IFF) mode.� This research was very helpful in development of the ADC Simulation.

Situational awareness was also identified as a primary concern during task analysis for the Joint Maritime Command Information System (Eddy, Kribs, and Cohen 1999).� It was affected by (1) capabilities, (2) training and experience, (3) preconceptions and objectives, (4) and ongoing task workload.� �As task workload and stress increase, decision-makers will often lose a �Big Picture� awareness and focus on smaller elements.�� This was also incorporated into the ADC Simulation.

Computer games have simulated naval combat.� Strike Fleet� in 1987 was an early video game that simulated naval battle group operations.� Fifth Fleet � was introduced in 1994 and immediately set a standard for the accurate depiction of naval operations and realistic game play.� But the Harpoon� Series games (Strategic Simulations, Inc.) have been the most popular naval games, and they have spanned nearly fourteen years from Harpoon 1� published in 1989 to Harpoon 3� in 2003.� The game engines were based on a realistic war-gaming and operational analysis model designed by the creator, Larry David, a former naval analyst and author.� They featured very accurate representations of platforms, weather phenomena, weapon systems, geography, friendly and opponent tactics, as well as believable scenarios and campaigns based on current and future political and/or actual conflicts.� Some military and military-affiliated organizations have used the game as part of their training, including the United States Air Force Command and Staff College, U.S. Naval Institute, Australian Department of Defense, and the Brazilian Naval War College.� However, these games have not been used much by the military because their primary purpose is entertainment.� They lack key features needed such as psychological models of personnel and comprehensive logging of events to help formulate lessons learned.

Several systems have been developed to aid air-defense personnel in their duties.� The Area Air-Defense Commander Battle Management System was developed for more effective coordination of air-defense planning and execution for multi-service (i.e. Army, Air Force, Navy, and Marines) and international operations (Delaney 2001).� It develops and executes a theater-wide air-defense plan providing an integrated view of the battlespace.� Its focus is threats, not the personnel responding to them.

The Multi-Modal Watch Station (MMWS) program developed specialized watchstation consoles with improved human-computer interface designs to help watch teams during battle-group air defense and land-attack operations (Osga et al 2001).� The MMWS consoles were designed to increase usability and learnability and decrease the potential for information overload and errors.� Research conducted extensive interviews and console evaluations with air-defense subject matter experts.� The MMWS consoles corrected interface problems in the current AEGIS CIC consoles which caused errors, information overload, and loss of situational awareness.� These consoles reduced the size of the air-defense team by two or three people while increasing performance.

The Battle Force Tactical Trainer System was designed for the fleet-wide training of naval units by providing each ship with a system using the existing CIC console architecture (Federation of American Scientists 2003).� High-fidelity scenarios can inject actual signal information into the ship combat systems to simulate reality.� The BFTT system can simulate an entire fleet of ships and their staffs and support war-gaming exercises.

Although the ADC Simulation builds on previous research, it has several unique features:

� It focuses on the decision-making and other mental processes of the watchstanders.

� It explores the influence that a watchstander�s proficiency has on the performance of the air-defense team.

� It allows the user to configure a wide range of attributes to determine their effects on performance.

� It allows the user to examine the assumptions of the simulated watchstanders and compare them to the truth.

� It provides views of the simulation on a variety of time scales.

� Its multiagent system that simulates the watchstanders provides for a realistic reproduction of human behaviors.

� It logs several kinds of data.

� It represents a key piece for a naval wargaming simulation.

Design of the simulation

Design of the ADC Simulation was done in six steps.� First, using the Human-Computer Interface User-Centered Design (UCD) Process, comprehensive interviews were conducted with five experienced air-defense subject-matter experts from the AEGIS Training and Readiness Center Detachment in San Diego, California, and the Fleet Technical Support Center Pacific, to collect data about battle-group air defense.� These personnel possessed five to fifteen years of naval air-defense experience, and were considered experts by their peers and the U.S. Navy.� Second, the materials of the simulation were determined from this data: agents, objects, and attributes.� Third, the relationships between agents and between agents and objects were explicitly defined.� Fourth, the tasks and actions for each agent and object were defined.� Fifth, the simulation was built from this information, including the necessary control actions (see Table 1).� Sixth, the simulation was debugged and some minor adjustments were done.

Table 1: ADC Simulation user actions.

|

TASK # |

TASK NAME |

|

1 |

Open scenario menu |

|

2 |

Open watchstander attributes menu |

|

3 |

Open CIC equipment setup menu |

|

4 |

Open scenario doctrine setup menu |

|

5 |

Open scenario external attributes menu |

|

6 |

Open simulation logs menu |

|

7 |

Change the maximum time it takes a watchstander to complete a task |

|

8 |

Display data about a contact |

|

9 |

Display data about the FORCE TAO watchstander |

|

10 |

Open a contact�s pop-up options window |

|

11 |

Open the FORCE TAO pop-up options window |

|

12 |

Increase the time compression |

|

13 |

Pause the simulation |

|

14 |

Set the situation-assessment skill level to Expert for the FORCE TAO |

|

15 |

Set the fatigue level to Exhausted for the RSC |

|

16 |

Set the SPY-1B radar equipment readiness level to Non-Operational |

|

17 |

Set the ADC doctrine query range to 30 nm and warning range to 20 nm |

|

18 |

Set the scenario threat level to Red |

|

19 |

Open the scenario event log |

|

20 |

Open the SLQ-32 system status log |

|

21 |

Set the watchstander fatigue levels to (0.5, 0.7, 0.9) |

|

22 |

Change the maximum time for the FORCE TAO watchstander to complete a task |

|

23 |

Change the speed of the hostile air contact to 500 kts |

|

24 |

Change the FORCE AAWC experience attribute to Expert |

|

25 |

Change the link equipment status to Partially Degraded |

The ADC Simulation Program

The Simulation Interface

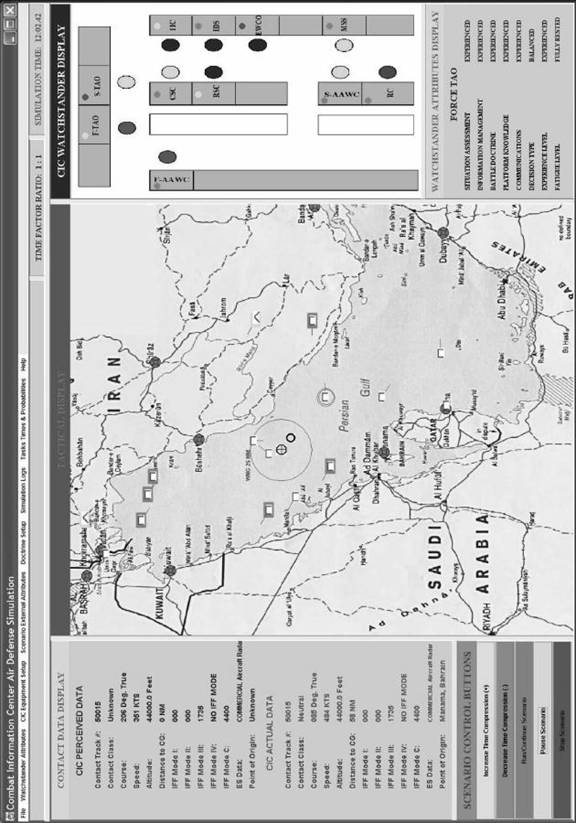

The ADC Simulation is implemented in Java.� Figure 2 shows an example view of the graphical user interface.� The center of the screen shows the locations and statuses of the aircraft and missile contacts.� The left side shows detailed information about the contact currently in focus; the right side gives information about the watchstanders.� The top of the screen and the lower left provide controls for the simulation.

The left side of the screen shows two sets of data about the currently selected contact, the actual data and the data perceived by the simulated CIC team.� Buttons provide shortcuts for starting, stopping, pausing, or continuing a scenario.� They also control the time compression, the ratio of simulation time to standard time.� The right side of the screen gives a picture of the CIC team.� Watchstander icons (the circles) can be selected to display associated attributes.� Some watchstanders collect and assess sensor information; others make decisions; and others carry out defensive and offensive actions.� Each watchstander has a Mental Activity Indicator giving the status in regard to the current task as either high, medium, or low (indicated with colors) to give an indication of watchstander stress.

Figure 2: Example view of user interface to ADC Simulation.

Contacts

The aircraft or missile contact is the fundamental object in the simulation.� It has a track number, point of origin, course, speed, altitude, radar cross-section, electronic signal emissions (based on radar type), and IFF (Identify Friend or Foe) mode.� It also has analytic attributes: whether it has been detected by the CIC, whether it has been evaluated, the last time it was evaluated, whether it is approaching, and its threat status.

Contacts are created by the simulation as it runs.� Friendly aircraft are generated by orders of the FORCE TAO or FORCE AAWC agents.� Other aircraft are generated at random based on the attributes of Contact Density and Hostile Contact Level.� Surface-to-air missile and anti-ship missile contacts are created by orders from either the CIC or hostile aircraft.� In the simulation, contacts fly piecewise linear paths with landing and descent behavior at the ends.� They may respond to queries and warnings from the CIC, and may retreat or alter their courses accordingly.� They may also experience loss of their radar or IFF systems with a certain probability.

The Neutral aircraft fly directly between two points.� The Friendly aircraft are fighters or support directly controlled by the CIC team.� They depart and return from Friendly bases and carriers, and conduct visual intercept, identification, and possible engagement of other aircraft.� The Hostile aircraft start in either Iran or Iraq, and have varied flight profiles including reconnaissance, low-altitude behavior, and approach and attack profiles.

�

Watchstander Agents

The simulated watchstanders were implemented as a multi-agent system where each is an agent (Ferber 1999) with a personality and attributes.� These agents interact with the contacts and communicate with one another to coordinate tasks.� This allows users to design and run scenarios representing real CIC personnel and the possible problems they could encounter.

Each action has a probability of success based on the skill attribute of the watchstander:� Basic (zero to six months experience), Experienced (six months to a year), and Expert (more than a year).� The experts interviewed argued that a distinction should be made between skill and experience: Skill affected the probability of success of an action, and experience affected both the time to complete it and the degree of confidence the agent had in its results.� The experience levels were categorized as Newly Qualified, Experienced (10% faster), and Expert (20% faster).� For watchstanders that evaluated contacts, an Evaluation Confidence attribute is increased for each contact.� The initial value is 30 and is increased at a different rate for Newly Qualified, Experienced, and Expert levels (by 2, 4, and 6 respectively), to a maximum of 95 for Newly Qualified, 90 for Experienced, and 85 for Expert.

The fatigue attribute controls the readiness of the watchstander.� Based on the interviews, three levels were used:� Fully Rested (having had a minimum of five hours of rest without having performed heavy physical labor or stood any watch.), Tired (a minimum of three hours of sleep or at most six hours without rest in a fairly demanding environment), and Exhausted (less than three hours of sleep, or having performed heavy physical labor, or having performed duties over six hours in a demanding environment.)� Fatigue decreased the success probability for an action and increased the length of time to do it.� Many of the interviewed experts also argued for a "decision-maker" attribute to reflect the differences among the watchstanders in how long they took to reach a decision.� The values proposed were Cautious (for a maximum of 30 seconds), Balanced (20 seconds), and Aggressive (10 seconds), with a uniform distribution of times up to these maxima.

Equipment

The equipment used by the watchstanders was modeled as separate software objects.� The performance of seven key items of equipment was simplified (to avoid the need to use classified information) while maintaining realistic qualitative performance.� Equipment has four readiness levels with associated probabilities of successful operation: Fully Operational (1.0), Partially Degraded (0.75), Highly Degraded (0.50), and Non-operational (0.0).� In addition, if the user has activated the Scenario Equipment Failure Option, any of the systems could randomly fail during the scenario, requiring the watchstander agents to troubleshoot them until successful.

To model the SPY-1B radar system, receiver operating characteristics from Swerling II statistics were used (Alvarez-Vaquero 1996).� Data was obtained from the AEGIS SPY-1B Radar Sphere Calibration Test Procedure of the Naval Sea Systems Command.� This gave formulas for the carrier-to-noise ratio as a function of the size of radar cross-section of a contact and for the probability of detection as a function of the carrier-to-noise ratio.

The SLQ-32 radar detects electronic signals emitted by aircraft and shipboard radar systems.� The simulation uses it to distinguish Iraqi fighter and patrol aircraft, Iranian fighter and patrol aircraft, commercial aircraft, friendly fighters, friendly support aircraft, friendly missiles, and hostile fire-control radar (indicating either a hostile aircraft or hostile missile).� The Identification Friend or Foe (IFF) System recognizes friendly and neutral aircraft in five categories or modes.� A simplified IFF model was created to achieve qualitatively realistic performance

The Link 11 and Link 16, also known as (Tactical Digital Information Link) TADIL A and TADIL J respectively, are the main way that U.S. and Allied military forces rapidly disseminate information about aircraft and ship contacts in the operational area.� These data links create a Common Tactical/Operational Picture, which enhances the military�s ability to maintain a continuous situational awareness about the battlespace.� Their operations in the simulation were simplified so that only contacts more than seventy nautical miles away would be evaluated.� This capability modeled standard radio communications.

In the simulation, surface-to-air missiles were simulated with a 0.70 probability of intercepting their target and a range of eighty nautical miles.� To maintain realism, only two missiles can be launched against a target; if they fail, two additional missiles can be fired.� The Close-In-Weapons System (Phalanx) is a twenty-millimeter shipboard self-defense system that contains its own radar and fire control system.� In the simulation, it has a range of one nautical mile and a 0.50 probability of hitting its target.�

Environment and Doctrine Attributes

The simulation handles three options for the Environment attribute: clear weather, heavy rain, and heavy clutter.� Their primary effect is on detection and communications systems; for instance, heavy clutter reduces the probability of detection by 10%.� The Contact Density attribute controls the number of contacts (low, medium, or high).� The Scenario Threat Level attribute (white, yellow, or red) affects classification of aircraft contacts: The higher the threat level, the more likely the team is to classify aircraft as Suspect or Hostile, and the more common and aggressive are the hostile contacts.

An AEGIS Doctrine defines additional procedures and situational parameters for the CIC.� The simulation implemented just the Auto-Special Doctrine, a weapons doctrine used to reduce reaction time and human errors when a fast-moving, anti-ship cruise missile contact is detected in very close proximity to the ship and poses an imminent danger.� With Auto-Special Doctrine, once a detected contact meets the human-provided specifications, the ship�s combat systems will automatically engage the hostile missile with surface-to-air missiles.� Additional types of weapons and identification doctrine aid the watchstanders in the performance of their duties.�

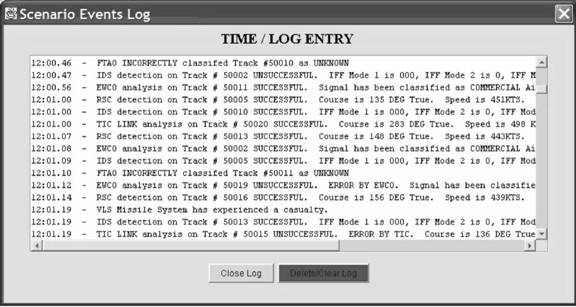

Simulation Logs

The simulation records every event that occurs within a scenario so that it can be later reviewed and analyzed.� Five record logs are maintained: the Scenario Events Log, the Watchstander Decision History Log, the CIC Equipment Status Log, the Watchstander Performance Log, and the Parse/Analyzer Log.� The Scenario Events Log maintains a high-level record of all events; an example is shown in Figure 3.

Figure 3: Example event log for the simulation.

From the logs, values are calculated for average initial detection time of aircraft, average initial classification time of aircraft, and average correct classification time of aircraft.� For the individual watchstanders, values are calculated for the number of errors, number of total actions attempted, percentage of errors in attempted actions, average action durations, and average communications time.

Watchstander Procedures

Air defense can be divided into three phases: (1) contact detection and reporting (Red Crown, EWCO, IDS, RSC, and TIC watchstanders); (2) contact classification (FORCE TAO, FORCE AAWC, Ship TAO, and Ship AAWC); and (3) action response (with the FORCE TAO and/or FORCE AAWC giving orders to the Ship TAO, Ship AAWC, CSC, MSS, and IDS).� The ADC Simulation agents follow this plan of action with defined paths of orders.� The flow of information is shown in Figure 4 and the message handling is implemented as in Figure 5.� There are input-message reception queues, message-priority processors, priority queues, action processors, and output-message transmission queues.� Watchstander agents place order/request messages into another watchstander�s input-message queue for processing.� 15 kinds of reports and 6 kinds of orders are handled in the implementation.

Figure 4: Communications between the watchstander agents.

Figure 5: Message handling for watchstander agents.

Watchstanders must also prioritize contacts.� Their criteria are newness of the contact, closeness to the ship, whether it is approaching, and duration since the last examination.� But not all watchstander agents in the simulation rate these criteria the same way.� Furthermore, watchstanders must periodically reevaluate the same contacts.� To model the cognitive and decision-making aspects of contact classification, linear models were used that take a weighted sum of numeric factors: closing course, speed, altitude, signal, origin, and mode.� Different weights are used for each Scenario Threat Level (White, Yellow, and Red).� Four thresholds are used to distinguish the conclusions Hostile, Suspect, Neutral, Unknown, and Friendly based on the advice of the experts as well as their actual usage by operating naval forces (see Table 3).� Initial contacts are usually Unknown because only partial information is available; as more data becomes available, the classification changes.� The most difficult and infrequent classifications are Hostile and Friend.

Table 2: Default classification thresholds.

|

Contact Classification |

Threat Level White Thresholds |

Threat Level Yellow Thresholds |

Threat Level Red Thresholds |

|

Hostile |

≥ 600 |

≥ 500 |

≥ 450 |

|

Suspect |

500 to 599 |

450 to 499 |

400 to 449 |

|

Neutral |

400 to 499 |

300 to 449 |

200 to 399 |

|

Unknown |

-399 to 399 |

-399 to 301 |

-399 to 199 |

|

Friendly |

≤ -400 |

≤ -400 |

≤ -400 |

EVALUATION OF THE SIMULATION

Five sets of questions were selected as the focus of testing of the simulation.� These explored the influence of important factors on the performance of the agents for the RSC, EWCO, and FORCE TAO individually and in the CIC team.� Team skill, experience, fatigue, and the operational status of the SPY-1B radar were selected as the factors to explore.� For each factor value, ten scenario runs were conducted, for 170 individual tests.� The experts suggested that the most useful performance metrics were the duration of the actions of the watchstanders and their error rate.� Unless otherwise indicated, tests assumed the watchstanders were Experienced in both the skill and experience factors, were fully rested, were balanced decision-makers, and had fully functional equipment.� It was assumed that the external environment had medium contact density and threat level White; the hostile contact number was low; and it was clear weather.� To simplify comparisons between tests, kinematic attributes of the contacts were constant, starting locations were constant, destination points were the same for the same starting point, no new contacts were created, defensive measures were disallowed, and IFF was always present for Neutrals.� Table 3 shows the average percentage change in performance when varying the four test parameters from one extreme to the other; the error rate is significantly more affected than the other metrics.

Table 3: Average percentage change in task time, communications time, and error rate of three watchstanders, when varying key parameters over their range.

|

|

Skill |

Experience |

Fatigue |

SPY Radar Status |

|

RSC (Radar Systems Controller) |

3, 27, 47 |

5, 6, 14 |

5, 6, 14 |

7, 16, 11 |

|

EWCO (Electronic Warfare Control Officer) |

5, 2, 35 |

12, 9, 34 |

5, 4, 41 |

2, 10, 15 |

|

FORCE TAO (Force Tactical Action Officer) |

2, 11, 63 |

2, 2, 23 |

4, 5, 67 |

7, 17, 15 |

Another test compared a scenario where the FORCE TAO�s skill and experience were Expert while the fatigue attribute was Exhausted (Trial #1) with a scenario in which the FORCE TAO�s skill and experience were Basic and Newly Qualified respectively while the fatigue attribute was Well Rested (Trial #2).� For the other watchstanders in Trial #1, the skill and experience attributes were Basic and Newly Qualified respectively, while their fatigue attributes were Fully Rested; in Trial #2, their skill and experiences attributes were Expert while their fatigue attributes were Exhausted.� Trial #1 showed better performance than Trial #2 except in the initial radar detection time, suggesting that the status of the team is more important than the status of their commander.

Testing also included survey questions administered to nine air-defense experts at the ATRC Detachment in San Diego.� Table 4 shows ratings for the simulation interface, where 1 meant "strongly disagree" and 5 meant "strongly agree".� The experts were reasonably satisfied.

Table 4: Mean results for survey questions on the interface.

|

Property |

Survey result mean |

Property |

Survey result mean |

|

Agent pop-up menu |

4.2 |

Contact pop-up menu |

4.2 |

|

Simulation logs menu |

4.0 |

Doctrine setup menu |

4.0 |

|

Scenario external attribute menu |

4.2 |

Equipment setup menu |

4.0 |

|

Watchstander attribute menu |

4.0 |

File menu |

4.4 |

|

Submenu items logically organized |

3.8 |

Menus logically located by functional area |

3.8 |

|

Menus easy to understand |

3.8 |

Pop-up menus arranged logically |

4.2 |

|

Menus arranged logically |

4.0 |

Menus are intuitive |

3.8 |

|

Methods for tasks are reasonable |

3.8 |

Tasks are understandable |

4.0 |

The experts were also queried as to the realism of the simulation's variation in performance with key parameters (Table 5).� For instance, one question (the upper left of the table) asked whether it was realistic for the Radar Systems Controller that performance time improved and the number of errors decreased when the Experience level increased.� For these answers the scale was 1 (Strong Disagree) to 7 (Strongly Agree).� These results were also encouraging.

Table 5: Mean results for survey questions on the simulation realism.

|

Issue as to realism |

Survey result mean |

Issue as to realism |

Survey result mean |

|

RSC skill change |

6.11 |

RSC experience change |

6.00 |

|

RSC fatigue change |

5.33 |

RSC SPY radar change |

5.00 |

|

Team interaction with RSC performance |

5.00 |

EWCO skill change |

6.22 |

|

EWCO experience change |

5.89 |

EWCO fatigue change |

5.44 |

|

EWCO SLQ-32 change |

5.44 |

Team interaction with EWCO performance |

5.44 |

|

FORCE TAO skill change |

6.00 |

FORCE TAO experience change |

5.78 |

|

FORCE TAO fatigue change |

5.56 |

FORCE TAO decision-maker change |

4.33 |

|

Team interaction with FORCE TAO performance |

5.22 |

Team interaction with FORCE TAO decision-maker type |

4.44 |

|

Realism of Trial #1 |

5.00 |

Realism of Trial #2 |

4.67 |

Conclusions

The Air Defense Commander (ADC) Simulation successfully simulates the mental processes, decision-making, cognitive attributes, and communications of an eleven-member CIC air defense team performing their duties including under stressful conditions.� The ADC Simulation should assist air-defense trainers in gaining insight into the degree to which watchstander skill, experience, fatigue, type of decision-maker, and environmental attributes influence the performance of the individual as well as the CIC watch team.� The simulation offers enough flexibility and options for a user to study the effect of a variety of factors on performance.� Future research directions include: a networked simulation; more detailed human models; aircraft contacts as agents; more detailed log parsing using XML; implementation of additional doctrines; alternate scenario locations; more detailed treatment of radar systems; a more detailed study of metrics for watchstander performance; a capability to replay previous scenarios and portions of them; and a capability to build scenarios.� The simulation also can be adapted for wargaming, and provides a useful set of tools for building other simulations of human teamwork.

References

Alvarez-Vaquero, F., "Signal Processing Algorithms by Permutation Test in Radar Applications," SPIE

Proceedings, Vol. 2841, Advanced Signal Processing Algorithms, Architectures, and Implementations VI, Denver Colorado, August 1996, pp. 134-140.

Barcio, B. T., S. Ramaswamy, R. MacFadzean, and K. S. Barber, "Object-Oriented Analysis, Modeling, and Simulation of a Notional Air Defense System," IEEE International Conference on Systems for the 21st Century, Vancouver, BC, Canada, October 1995, vol. 5, pp. 22-25.

Bloeman, A. F., and R. R. Witberg, "Anti-Air Warfare Research for Naval Forces," Naval Forces, Vol. 21, No. 5, pp. 20-24, 2000.

Burr, R. G., Palinkas, L. A., and Banta, G. R., "Psychological Effects of Sustained Shipboard Operations on U.S. Navy Personnel," Current Psychology: Developmental, Learning, Personality, Social, Vol. 12, 1993, pp. 113-129.

Calfee, S. H., "Autonomous Agent-Based Simulation of an Aegis Cruiser Combat Information Center Performing Battle Group Air-Defense Commander Operations," M. S. Thesis, Computer Science Department, U.S. Naval Postgraduate School, March 2003, available at www.cs.nps.navy.mil/people/faculty/'rowe/oldstudents/calfee-thesis.htm.

Choi, S. Y., and D. Wijesekera, "The DADSim Air Defense Simulation Environment," Proceedings of the Fifth IEEE International Symposium on High Assurance Systems Engineering, 2000, pp. 75-82.

Delaney, M. A., "AADC (Area Air Defense Commander): The Essential Link," Sea Power, Vol. 44, No. 3, pp. 30-34, March 2001.

Eddy, M. F., H. D. Kribs, and M. B. Cohen, "Cognitive and Behavioral Task Implications for Three Dimensional Displays Used in Combat Information/Direction Centers," Technical Report 1792, SPAWAR, San Diego, March 1999.�

Federation of American Scientists, �AN/USQ-T46(V) Battle Force Tactical Training System," retrieved from www.fas.org/man/dod/-101/sys/ship/weaps/an-usq-t46.htm, May 2003.

Ferber, Jacques, Multi-Agent Systems:� An Introduction to Distributed Artificial Intelligence, Addison-Wesley, 1999, p. 11.

Liebhaber, M. J., and C. A. P. Smith, "Naval Air Defense Threat Assessment:� Cognitive Factors and Model," Command and Control Research and Technology Symposium, Monterey, CA, June 2000.�

Maiorano, A. G., N. P. Carr, and T. J. Bender, "Primer on Naval Theater Air Defense," Joint Forces Quarterly, No. 11, Spring 1996, pp. 22-28.

Morrison, J. G., R. T. Kelly, R. A. Moore, and S. G. Hutchins, "Implications of Decision-Making Research for Decision Support and Displays," in Cannon-Bowers, J.A., and E. Salas (eds.), Making Decisions under Stress: Implications for Training and Simulation, Washington DC: APA Press, 1998, pp. 375-406.

Noh, S., and P. J. Gmytrasiewicz, "Rational Communicative Behavior in Anti-Air Defense," Proceedings of International Conference on Multi Agent Systems, Paris, France, July 1998, pp. 214-221.

Osga, G., K. F. Van Orden, N. Campbell, D. Kellmeyer, and D. Lulue, "Design and Evaluation of Warfighter Task Support Methods in a Multi-Modal Watch Station," Technical Report 1864, Space and Naval Warfare Systems Center, San Diego, June 2001.

Weaver, J.L., C. A. Bowers, E. Salas, and J. Cannon-Bowers,� "Networked Simulations: New Paradigms for Team Performance Research," Behavior Research Methods, Instruments, and Computers, Vol. 27, No. 1, (1995), pp. 12-24.

ACKNOWLEDGMENTS

We thank John Hiles, Donald Gaver, Patricia Jacobs, and Robert Harney of the Naval Postgraduate School; Lcdr. J. Lundquist, Lt.Brian Deters, OSCS Mackie, OSC Couch, and OSC Coleman of the AEGIS Training & Readiness Center, Detachment San Diego, California; FCC Timothy Simmons, of the Fleet Technical Support Center, Pacific; and Glenn Osga, of the Space and Naval Warfare Systems Center, San Diego, California.� The latter organization funded Lt. Calfee's research through the SPAWAR Research Fellowship Program.